|

|

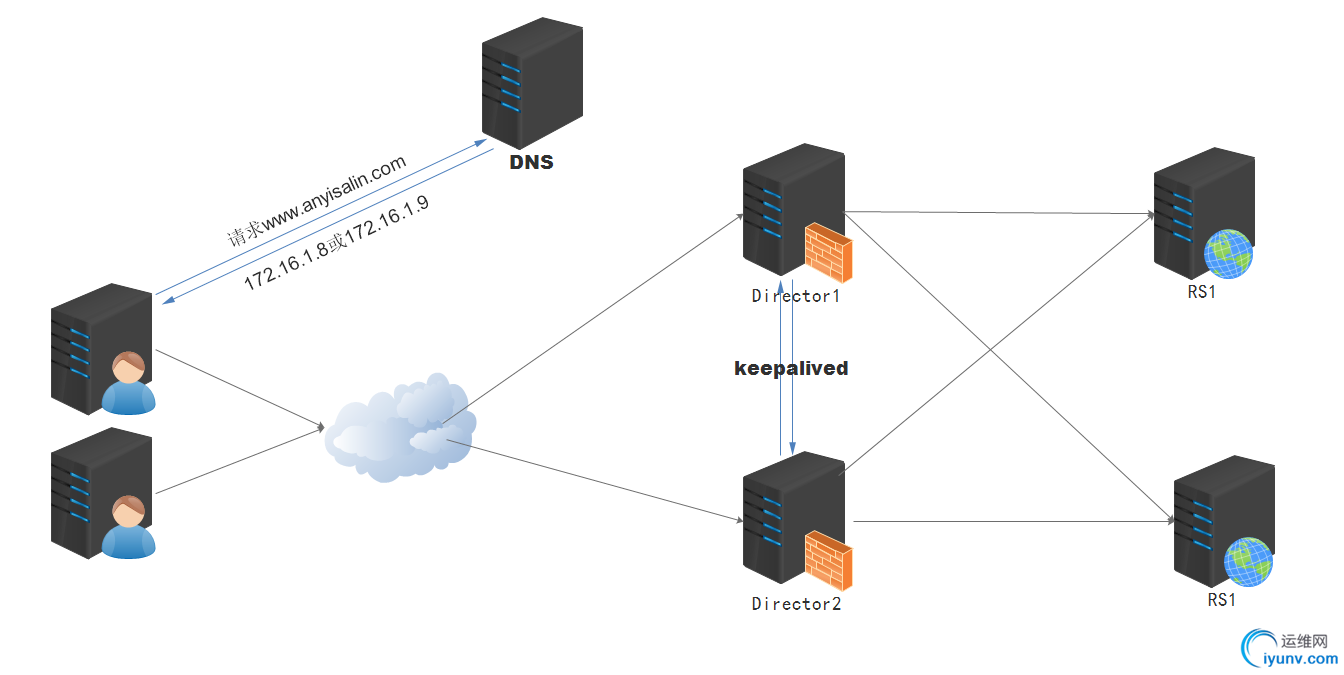

LVS专题: LVS+Keepalived并使用DNS轮询实现Director的高可用和负载均衡

前言

LVS专题写到第三篇了, 前两篇我们对LVS的基础使用也有了一些了解, 这篇我们将做一个比较复杂的实验, 话不多说, 开始吧!

什么是KeepAlived

What is Keepalived ?

Keepalived is a routing software written in C. The main goal of this project is to provide simple and robust facilities for loadbalancing and high-availability to Linux system and Linux based infrastructures. Loadbalancing framework relies on well-known and widely used Linux Virtual Server (IPVS) kernel module providing Layer4 loadbalancing. Keepalived implements a set of checkers to dynamically and adaptively maintain and manage loadbalanced server pool according their health. On the other hand high-availability is achieved by VRRP protocol. VRRP is a fundamental brick for router failover. In addition, Keepalived implements a set of hooks to the VRRP finite state machine providing low-level and high-speed protocol interactions. Keepalived frameworks can be used independently or all together to provide resilient infrastructures. ##转自官方文档

大体的意思就是keepalived是一个由C语言编写的项目, 主要目标是提供负载均衡和高可用的Linux服务. keepalived依赖于Linux Virtual Server(IPVS)内核提供的四层负载均衡, keepalived实现了动态自适应和维护, 能够检测负载均衡池中的主机的健康状态, 而keepalived的高可用是通过VRRP(virtual route redundancy protocol)实现的.

关于VRRP协议参考文档H3C技术白皮书: VRRP、RFC 3768:Virtual Router Redundancy Protocol (VRRP)

实验介绍

大家都知道LVS虽然性能很强劲但是功能上有很多不足, 例如: 不能提供后端健康状态检查功能, director容易成为单点故障…, 而这些功能我们都可以通过第三方软件keepalived来提供, 而本次实验我们就要使用keepalived提供lvs-director的高可用, 并让两台director分别互为主从都能接受客户端通过dns对A记录的轮询请求从而转发至后端主机. 实现Director的高可用和负载均衡

实验拓扑

图画的不够形象, 实验中我们使用DR模型来进行实验

实验环境VIP1为172.16.1.8、VIP2为172.16.1.9

主机IP地址功用

director1.anyisalin.comVIP1,VIP2, DIP: 172.16.1.2Director1

director2.anyisalin.comVIP1,VIP2, DIP: 172.16.1.3Director2

rs1.anyisalin.comVIP, RIP: 172.16.1.4RealServer 1

rs2.anyisalin.comVIP, RIP: 172.16.1.5RealServer 2

ns.anyisalin.comIP: 172.16.1.10DNS

注意: 本文实验中所有主机SElinux和iptables都是关闭的 实验步骤配置KeepAlived(1)实现Director 的VIP互为主从下面的操作都在director1上执行 [iyunv@director1 ~]# ntpdate 0.centos.pool.ntp.org #同步时间

[iyunv@director1 ~]# yum install keepalived &> /dev/null && echo success #安装keepalived

success

[iyunv@director1 ~]# vim /etc/keepalived/keepalived.conf #修改配置文件的部分配置如下

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.1.8 dev eth0 label eth0:0

}

}

vrrp_instance VI_2 {

state BACKUP

interface eth0

virtual_router_id 52

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass 2222

}

virtual_ipaddress {

172.16.1.9 dev eth0 label eth0:1

}

}

下面的操作都在director1上执行

[iyunv@director2 ~]# ntpdate 0.centos.pool.ntp.org #同步时间

[iyunv@director2 ~]# yum install keepalived &> /dev/null && echo success #安装keepalived

success

[iyunv@director2 ~]# vim /etc/keepalived/keepalived.conf #修改配置文件的部分配置如下

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.1.8 dev eth0 label eth0:0

}

}

vrrp_instance VI_2 {

state MASTER

interface eth0

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 2222

}

virtual_ipaddress {

172.16.1.9 dev eth0 label eth0:1

}

}

同时在director1和director2上启动keepalived

[iyunv@director1 ~]# service keepalived start

[iyunv@director2 ~]# service keepalived start

测试

默认情况director1和director2的ip如下

我们将director1的keepalived服务停止, 效果如下, IP自动转移到director2

我们将director1的keepalived服务再次启动, 效果如下, IP地址转回director1 配置LVS配置KeepAlived(2)这里我们使用DR模型进行实验, 因为keepalived可以通过调用ipvs的接口来自动生成规则, 所以我们这里无需ipvsadm, 但是我们要通过ipvsadm命令来查看一下ipvs规则

下面的操作在director1和director2都要执行, 由于篇幅过长, 遂不演示director2的操作 blob.png

我们将director1的keepalived服务停止, 效果如下, IP自动转移到director2

我们将director1的keepalived服务再次启动, 效果如下, IP地址转回director1

配置LVS

配置KeepAlived(2)

这里我们使用DR模型进行实验, 因为keepalived可以通过调用ipvs的接口来自动生成规则, 所以我们这里无需ipvsadm, 但是我们要通过ipvsadm命令来查看一下ipvs规则

下面的操作在director1和director2都要执行, 由于篇幅过长, 遂不演示director2的操作

测试LVS测试director1和director2

当我们关闭rs1的web服务, 会自动检查健康状态并删除 当我们同时关闭rs1和rs2的web服务, 会自动启用sorry server

配置DNS配置dns的过程没什么好说的, 有兴趣可以看我的博客DNS and BIND 配置指南

下面的操作都在ns上执行 [iyunv@ns /]# yum install bind bind-utils -y --nogpgcheck &> /dev/null && echo success #安装bind

success

[iyunv@ns /]# vim /etc/named.conf #修改主配置文件如下

options {

directory "/var/named";

};

zone "." IN {

type hint;

file "named.ca";

};

include "/etc/named.rfc1912.zones";

include "/etc/named.root.key";

[iyunv@ns /]# vim /etc/named.rfc1912.zones #在文件尾部加上下列字段

zone "anyisalin.com" IN {

type master;

file "anyisalin.com.zone";

};

[iyunv@ns /]# vim /var/named/anyisalin.com.zone #创建区域配置文件

$TTL 600

$ORIGIN anyisalin.com.

@ IN SOA ns.anyisalin.com. admin.anyisalin.com. (

20160409

1D

5M

1W

1D

)

IN NS ns

ns IN A 172.16.1.10

www IN A 172.16.1.8

www IN A 172.16.1.9

[iyunv@ns /]# service named start #启动named

Generating /etc/rndc.key: [ OK ]

Starting named: [ OK ]

测试DNS轮询效果

已经实现DNS轮询效果

最终测试做了那么实验, 结合前面实验的效果, 来一次最终测试, 我将本机的DNS server指向了172.16.1.10以便测试 默认情况如下

我们将director2的keepalived强制关闭,依然不会影响访问 此时我们的director1的IP地址如下, 接管了director2的IP

总结我们通过DNS轮询实现LVS-Director的负载均衡, KeepAlived实现Director的高可用, 而Director本身就可以为后端的RS进行负载均衡, 这一套架构还是很完整的. 其实本文还有很多不完善的地方, 但是由于我时间较紧, 遂不对其进行叙述, 希望大家多多谅解, LVS专题到这里可能结束了,

|

|