|

|

this article is the another part follow to hadoop 2.x-HDFS HA --Part I: abstraction ,and here will talk about these topics:

2.installation HA

2.1 manual failover

2.2 auto failover

3.conclusion

2.installation HA

2.1 manual failover

for this mode,the cluster can be failovered by manually using some commands,but it's hard to know when/why this issuse occures.of course ,this is better than nothing:)

here are the assignments my cluster:

yes,in general,the number of journalnode is recommanded to be a odd number for max utilization,but here is just for validaing the function of HA,so it's passed also!

upon on the configs in install hadoop-2.5 without HDFS HA /Federation ,there are some changes of properties to be added or alternated,

| mode | property to be added

/alternated

| value | abstract | | HA manual failover |

dfs.nameservices

| mycluster | logic name of this name service, | | | dfs.ha.namenodes.mycluster | nn1,nn2 | the name is formated by:

dfs.ha.namenodes.#serviceName#;

and this name service contains two namenodes

| | |

dfs.namenode.rpc-address.mycluster.nn1

|

hd1:8020

| the internal communication addr | | |

dfs.namenode.rpc-address.mycluster.nn2

|

hd2:8020

| | | |

dfs.namenode.http-address.mycluster.nn1

| hd1:50070 | the web ui address | | |

dfs.namenode.http-address.mycluster.nn2

| hd2:50070 | | | |

dfs.namenode.shared.edits.dir

|

qjournal://hd1:8485;hd2:8485/mycluster

| | | |

dfs.client.failover.proxy.provider.mycluster

|

org.apache.hadoop.hdfs.server.namenode.

ha.ConfiguredFailoverProxyProvider

| | | |

dfs.ha.fencing.methods

|

sshfence

| | | |

dfs.ha.fencing.ssh.private-key-files

|

/home/hadoop/.ssh/id_rsa

| | | |

dfs.ha.fencing.ssh.connect-timeout

|

10000

| | | |

fs.defaultFS

|

hdfs://mycluster

| the suffix of this value must be same

as property 'dfs.nameservices' set in hdfs-site.xml

| | |

dfs.journalnode.edits.dir

|

/usr/local/hadoop/data-2.5.1/journal

| |

2.1.2 steps to startup

these steps below are order-related.

2..1.2.1 startup journalnode

go to all journalnodes,and run

sbin/hadoop-daemon.sh start journalnode

2.1.2.2 go to first NN and format followed by start

hdfs namenode -format

hadoop-daemon.sh start namenode

then go to the remain JN nodes,to get the fs image,run by

bin/hdfs namenode -bootstrapStandby

sbin/hadoop-daemon.sh start namenode

2.1.2.3 spawn datanode

sbin/hadoop-daemons.sh start datanode

now,both namenodes are all kept in 'standby' state(yes,this is the defult action by manual mode,if you want to set a default active namenode ,use the auto-failover mode instead in this page)

here,u can use some commands to transition standby to active and vice-versa

hadoop@ubuntu:/usr/local/hadoop/hadoop-2.5.1/etc/hadoop-ha-manual$ hdfs haadmin

Usage: DFSHAAdmin [-ns <nameserviceId>]

[-transitionToActive <serviceId> [--forceactive]]

[-transitionToStandby <serviceId>]

[-failover [--forcefence] [--forceactive] <serviceId> <serviceId>]

[-getServiceState <serviceId>]

[-checkHealth <serviceId>]

[-help <command>]

a.transition a standby namenode nn1 To active ,return nothing if it's already active

hdfs haadmin -transitionToActive nn1

b.check whether nn1 is in active state

hdfs haadmin -getServiceState nn1

it will return active result c.then will check its healthy

hdfs haadmin -checkHealth nn1

return nothing if it's healthy,else some 'connection excpetion will show ' here d.yes,u can also failover from a dead namenode to another one to get active

hdfs haadmin -failover nn1 nn2

here switch state from nn1(active) to nn2(standby).if u specify optioin '--forcefence' then the namenode nn1 will be killed also for fencing!so this is prudent. e.and stop-dfs.sh will shutdown all processes in cluster,and start-dfs.sh will spawn up all them

stop-dfs.sh

start-df.sh

hdfs haadmin -transitionToActive nn1

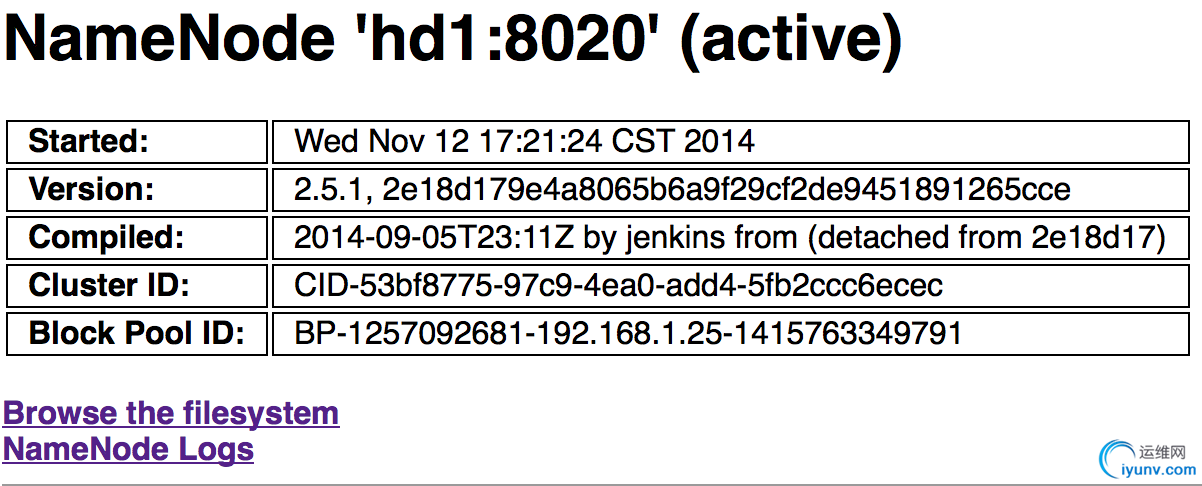

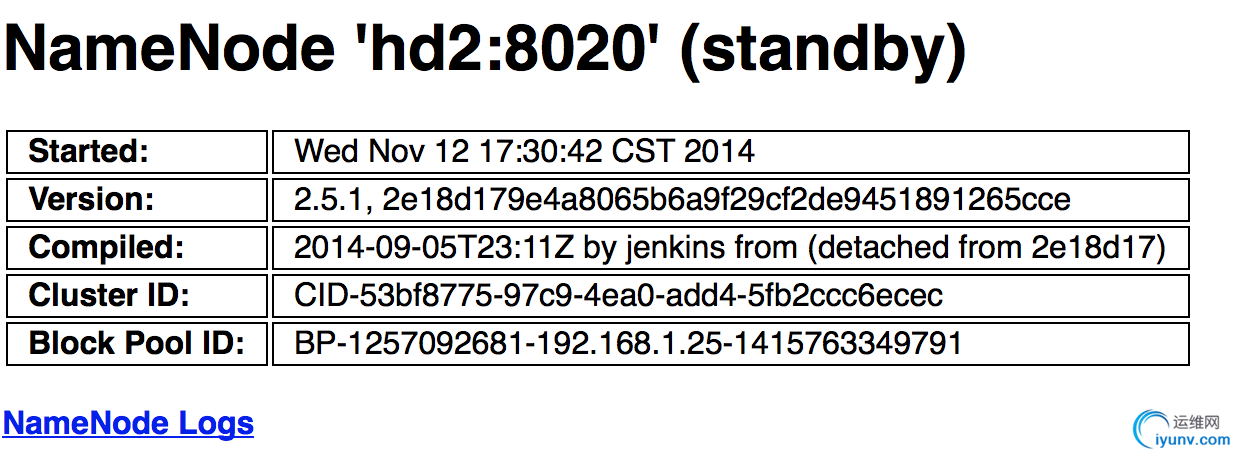

by now ,we can see the nn1 is in active,and 'standby' for nn2

below are the orders of stop and start:

start order :

hadoop@ubuntu:/usr/local/hadoop/hadoop-2.5.1$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [hd1 hd2]

hd1: starting namenode, logging to /usr/local/hadoop/hadoop-2.5.1/logs/hadoop-hadoop-namenode-ubuntu.out

hd2: starting namenode, logging to /usr/local/hadoop/hadoop-2.5.1/logs/hadoop-hadoop-namenode-bfadmin.out

hd1: datanode running as process 1539. Stop it first.

hd2: datanode running as process 3081. Stop it first.

Starting journal nodes [hd1 hd2]

hd1: journalnode running as process 1862. Stop it first.

hd2: starting journalnode, logging to /usr/local/hadoop/hadoop-2.5.1/logs/hadoop-hadoop-journalnode-bfadmin.out

Starting ZK Failover Controllers on NN hosts [hd1 hd2]

hd1: zkfc running as process 2090. Stop it first.

hd2: zkfc running as process 3388. Stop it first.

starting yarn daemons

starting resourcemanager, logging to /usr/local/hadoop/hadoop-2.5.1/logs/yarn-hadoop-resourcemanager-ubuntu.out

hd2: starting nodemanager, logging to /usr/local/hadoop/hadoop-2.5.1/logs/yarn-hadoop-nodemanager-bfadmin.out

hd1: starting nodemanager, logging to /usr/local/hadoop/hadoop-2.5.1/logs/yarn-hadoop-nodemanager-ubuntu.out

shutdown order(same as starting):

hadoop@ubuntu:/usr/local/hadoop/hadoop-2.5.1$ stop-all.sh

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

Stopping namenodes on [hd1 hd2]

hd1: stopping namenode

hd2: stopping namenode

hd1: stopping datanode

hd2: stopping datanode

Stopping journal nodes [hd1 hd2]

hd1: stopping journalnode

hd2: stopping journalnode

Stopping ZK Failover Controllers on NN hosts [hd1 hd2]

hd1: stopping zkfc

hd2: stopping zkfc

stopping yarn daemons

no resourcemanager to stop

hd1: no nodemanager to stop

hd2: no nodemanager to stop

no proxyserver to stop

--------------

now u can test some failover cases:

hdfs dfs -put test.txt /

kill #process-of-nn1#

hdfs haadmin -transitionToActive nn2

# test whether the first nn1 's edits are synchronised to nn2?yes of course,you will see the file lied there correctly

hdfs dfs -ls /

------------------

below is a test of killing the journalnode to check the cluster's robust

after stop hd1's journalnode,this causes the hd1's(same host) namenode to be killed :

2014-11-12 16:45:15,102 WARN org.apache.hadoop.hdfs.qjournal.client.QuorumJournalManager: Took 10008ms to send a batch of 1 edits (17 bytes) to remote journal 192.168.1.25:8485

2014-11-12 16:45:15,102 FATAL org.apache.hadoop.hdfs.server.namenode.FSEditLog: Error: flush failed for required journal (JournalAndStream(mgr=QJM to [192.168.1.25:8485, 192.168.1.30:8485], stream=QuorumOutputStream starting at txid 261))

org.apache.hadoop.hdfs.qjournal.client.QuorumException: Got too many exceptions to achieve quorum size 2/2. 1 successful responses:

192.168.1.30:8485: null [success]

1 exceptions thrown:

192.168.1.25:8485: Call From ubuntu/192.168.1.25 to hd1:8485 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

note the msg:Got too many exceptions to achieve quorum size 2/2. 1 successful responses....1 exceptions thrown:

=============

2.2 auto failover

the so-called 'auto failover' is the opposite of 'manual failover',the former uses a coordination-system(ie.zookeeper) to automatically recover the namenodes if some failures occure,e.g hardware faults,soft ware bugs,etc.when these problems issue the HA will detect which namenode is failed from active(not standby).then the standby nn will undertake the role which prior was 'active'.

here are some configs besides from manual failover's:

property to be added

/alternated

| value | abstract | dfs.ha.automatic-failover.enabled

| true | auto failover when possible | ha.zookeeper.quorum

| hd1:2181,hd2:2181 | yes ,u can see,both journalnode and zookeeperrole here are only even number,but it's ok for test also! | | | | |

and the zkfc (zk client used in namenode to detect failures) roles are like this:

| host | nn | jn | dn | zkfc(new) | | hd1 | y | y | y | y | | hd2 | y | y | y | y |

2.2.1 steps to startup

a.format zk

hdfs zkfc -formatZK

b.start all

start-dfs.sh

this includes the nn,jn,dn and zkfc processes.

now just do what u want to do,the auto failover will function properly,have a nice experience for that!

ref:

HDFS High Availability Using the Quorum Journal Manager

Hadoop 2.0 NameNode HA和Federation实践 |

|