|

|

Energy Proportional Datacenter Networks A couple of weeks back Greg Linden sentme an interesting paper called Energy Proportional Datacenter Networks.The principal of energy proportionality was first coined by Luiz Barroso and UrsHölzle in an excellent paper titled TheCase for Energy-Proportional Computing. The core principal behind energyproportionality is that computing equipment should consume power in proportion to their utilization level. Forexample, a computing component that consumes N watts at full load, should consume X/100*N Watts when running at X% load. This may seem like a obviously important concept but, when the idea was first proposed back in 2007, it was not uncommon for a server runningat 0% load to be consuming 80% of full load power. Even today, you can occasionally find servers that poor. The incredibly difficulty of maintaining near 100% server utilization makes energy proportionality a particularly important concept.

One of the wonderful aspects of our industry is, when an attribute becomes important, we focus on it. Power consumption as a whole has become important and, as a consequence, average full loadserver power has been declining for the last couple of years. It is now easy to buy a commodity server under 150W. And, withenergy proportionality now getting industry attention, this metric is also improving fast. Today a small percentage of the server markethas just barely cross the 50% power proportionality line which is to say, if they are idle, they are consuming less than 50% of the full load power.

This is great progress that we’re all happy to see. However, it’s very clear we are never going to achieve 100% power proportionality so raising server utilization will remain the most importantlever we have in reducing wasted power. This is one of the key advantages of cloud computing. When a large number of workloads are brought together with non-correlated utilization peaks and valleys, the overall peak to trough load ratio is dramatically flattened.Looked at it simplistically, aggregating workloads with dissimilar peak resource consumption levels tends to reduce the peak to trough ratios. There need to be resources available for peak resource consumption but the utilization level goes up and down withworkload so reducing the difference between peak and trough aggregate load levels, cuts costs, save energy, and is better for the environment.

Understanding the importance of power proportionality, it’s natural to be excited by the Energy ProportionalDatacenterNetworks paper. In this paper, the authors observer “if the system is 15% utilized (servers and network) and the servers are fully power-proportional, the network will consume nearly50% of the overall power.” Without careful reading, this could lead one to believe that network power consumption was a significant industry problem and immediate action at top priority was needed. But the statement has two problems. The first is the assumptionthat “full energy proportionality” is even possible. There will always be some overhead in having a server running. And we are currently so distant from this 100% proportional goal, that any conclusion that follows from this assumption is unrealistic andpotentially mis-leading.

The second issue is perhaps more important: the entire data center might be running at 15% utilization. 15% utilization means that all the energy (and capital) that went into the datacenterpower distribution system, all the mechanical systems, the servers themselves, is only being 15% utilized. The power consumption is actually a tiny part of the problem. The real problem is the utilization level means that most resources in a nearly $100Minvestment are being wasted by low utilization levels.There are many poorly utilized data centers running at 15% or worse utilization but I argue the solution to this problem is to increase utilization. Power proportionality is very important and many of usare working hard to find ways to improve powerproportionality. But, power proportionality won’t reduce the importance of ensuring that datacenter resources are near fully utilized.

Just as power proportionality will never be fully realized, 100% utilization will never be realized either so clearly we need to do both. However, it’s important to remember that the gain fromincreasing utilization by 10% is far more than the possible gain to be realized by improving power proportionality by 10%. Both are important but utilization is by far the strongest lever in reducing cost and the impact of computing on the environment.

Returning to the negative impact of networking gear on overall datacenter power consumption, let’s look more closely. It’s easy to get upset when you look at net gear power consumption. Itis prodigious. For example a Juniper EX8200 consumesnearly 10Kw. That’s roughly as much power consumption as an entire rack of servers (server rack powers range greatly but 8 to 10kW is pretty common these days). A fully configured CiscoNexus 7000 requires 8 full 5kW circuits to be provisioned. That’s 40kW or roughly as much power provisioned to a single network device as would be required by 4 average racks of servers.These numbers are incredibly large individually but it’s important to remember that there really isn’t all that much networking gear in a datacenter relative to the number of servers.

To get precise, let’s build a model of the power required in a large datacenter to understand the details of networking gear power consumption relative to the rest of the facility. In thismodel, we’re going to build a medium to large sized datacenter with 45,000 servers. Let’s assume these servers are operating at an industry leading 50% power proportionality and consume only 150W each at full load. This facility has a PUE of 1.5 which is tosay that for every watt of power delivered to IT equipment (servers, storage and networking equipment), there is ½ watt lost in power distribution and powering mechanical systems. PUEs as high as 1.2 are possible but rare and PUEs as poor as 3.0 are possibleand unfortunately quite common. The industry average is currently 2.0 which says that in an average datacenter, for every watt delivered to the IT load, 1 watt is spent on overhead (power distribution, cooling, lighting, etc.).

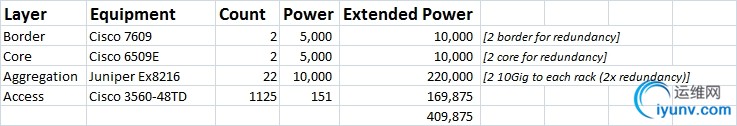

For networking, we’ll first build a conventional, over-subscribed, hierarchical network. In this design, we’ll use Cisco 3560 as a top of rack (TOR) switch. We’ll connect these TORs 2-wayredundant to Juniper Ex8216 at the aggregation layer. We’ll connect the aggregation layer to Cisco 6509E in the core were we need only 1 device for a network of this size but we use 2 for redundancy. We also have two border devices in the model:

Using these numbers as input we get the following:

· Total DC Power: 10.74MW (1.5 * IT load)

· IT Load: 7.16MW (netgear + servers and storage)

o Servers & Storage: 6.75MW (45,100 * 150W)

o Networking gear: 0.41 MW (from table above)

This would have the networking gear as consuming only 3.8% of the overall power consumed by the facility (0.41/10.74). If we were running at 0% utilization which I truly hope is far worse thatanyone’s worst case today, what networking consumption would we see? Using the numbers above with the servers at 50% powerproportionally (unusually good), we would have the networking gear at 7.2% of overall facility power (0.41/((6.75*0.5+0.41)*1.5)).

This data argues strongly that networking is not the biggest problem or anywhere close. We like improvements wherever we can get them and so I’ll never walk away from a cost effective networkingpower solution. But, networking power consumption is not even close to our biggest problem so we should not get distracted.

What if we built an modern fat tree network usingcommodity high-radix networking gear along the lines alluded to in DataCenter Networks are in my Way and covered in detail in the VL2 and PortLand papers?Using 48 port network devices we would need 1875 switches in the first tier, 79 in the next, then 4, and 1 at the root. Let’s use 4 at the root to get some additional redundancy which would put the switch count at 1,962 devices. Each network device dissipatesroughly 150W and driving each of the 48 transceivers requires roughly 5w each (a rapidly declining number). This totals to 390W per 48 port device. Any I’ve seen are better but let’s use these numbers to ensure we are seeing the network in its worst likelyform. Using these data we get:

· Total DC Power: 11.28MW

· IT Load: 7.52MW

o Servers & Storage: 6.75MW

o Networking gear: 0.77MW

Even assuming very high network power dissipation rates with 10GigE to every host in the entire datacenter with a constant bisection bandwidth network topology that requires a very large numberof devices, we still only have the network at 6.8% of the overall data center. If we assume the very worst case available today where we have 0% utilization with 50% power proportional servers, we get 12.4% power consumed in the network (0.77/((6.75*0.5+0.77)*1.5)).

12% is clearly enough to worry about but, in order for the network to be that high, we had to be running at 0% utilization which is to say, that all the resources in the entire data centerare being wasted. 0% utilization means we are wasting 100% of the servers, all the power distribution, all the mechanical systems, and the entire networking system, etc. At 0% utilization, it’s not the network that is the problem. It’s the server utilizationthat needs attention.

Note that in the above model more than 60% of the power consumed by the networking devices were the per-port transceivers. We used 5W/port for these but overall transceiver power is expectedto drop down to 3W or less over the next couple of years so we should expect a drop in network power consumption of 30 to 40% in the very near future.

Summarizing the findings: My take from this data is it’s a rare datacenter where more than 10% of power is going to networking devices and most datacenters will be under 5%. Power proportionalityin the network is of some value but improving server utilization is a far more powerful lever. In fact a good argument can be made to spend more on networking gear and networking power if you can use that investment to drive up server utilization by reducingconstraints on workload placement.

--jrh

James Hamilton

e: jrh@mvdirona.com

w: http://www.mvdirona.com

b: http://blog.mvdirona.com / http://perspectives.mvdirona.com

2010年8月1日 9:44:07 (Pacific Standard Time, UTC-08:00) Comments [4] - Trackback

Hardware

2010年8月2日 4:03:24 (Pacific Standard Time, UTC-08:00)Good point regarding percentage of power used in the datacenter by networking equipment. To take your point a bit further, if you were to modify your "conventional, oversubscribed, heirarchical" example to use the Arista Networks 7508 at the aggregationlayer you could reduce the number of aggregation switches from 22 to 8 (128 vs 384 10G ports per switch) and power consumption down to ~32 kW. That would reduce the networking gear portion of the calculation from 0.41 MW to 0.22 MW, making networking gearuse only 4.1% of overall facility power.

Disclosure: I previously worked for Arista Networks.

Nathan

Nathan Schrenk2010年8月2日 4:07:02 (Pacific Standard Time, UTC-08:00)Exactly. Getting networking power up to 10% isn't that easy and closer to 5% is much more probable.

--jrh

jrh@mvdirona.comJames Hamilton2010年8月8日 22:24:28 (Pacific Standard Time, UTC-08:00)Great post. One thing I don't understand in your calculations, however: you don't charge the networking equipment for its PUE. In your first calculation, the networking equipment seems to be responsible for 1.5 * 0.41 MW. For example, if the networkingequipment magically required no power at all, then wouldn't the total power requirement would drop by 1.5*0.41 MW, not 0.41 MW? This would raise your numbers in the various scenarios from (3.8%, 6.8%, 12.4%) to (5.7%, 10.2%, 18.6%), respectively. Your high-levelpoint still stands, but maybe there is room for some worthwhile improvement in the network.Brighten Godfrey2010年8月16日 14:25:42 (Pacific Standard Time, UTC-08:00)While 50% power proportionality is the state of the art for single servers today,virtualized cloud data centers introduce operational degrees of freedom that easily allows breaking the 50% "sound barrier": virtualized cloud provider environments allowstreating groups of servers as homogeneous resource pools. During workload valleys it's possible to dynamically consolidate workloads into fewer servers in a pool and put "park" the vacated servers. We used ACPI S5 for some experiments we did as a proof ofconcept. S5 reduces power consumption to less than 10% of the peak workload. Hence the asymptotic value for power proportionality is about that number, certainly much better than the achievable with one server

Here is the Barroso analysis, generalized to encompass virtualized server pools:

http://communities.intel.com/community/openportit/server/blog/authors/egcastroleon

This subject ges a full chapter in the upcoming Intel Press book http://www.intel.com/intelpress/sum_virtn.htm. |

|