|

|

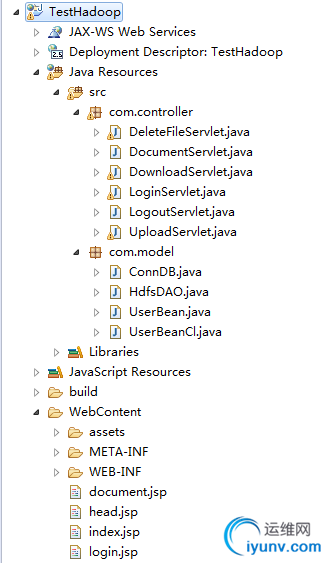

文件结构

(1)、index.jsp首页面实现

index.jsp

<%@ include file="head.jsp"%>

<%@ page language="java" contentType="text/html; charset=UTF-8"

pageEncoding="UTF-8"%>

<%@page import="org.apache.hadoop.fs.FileStatus"%>

<body style="text-align:center;margin-bottom:100px;">

<div class="navbar" >

<div class="navbar-inner">

<a class="brand" href="#" style="margin-left:200px;">网盘</a>

<ul class="nav">

<li><a href="LogoutServlet">退出</a></li>

</ul>

</div>

</div>

<div style="margin:0px auto; text-align:left;width:1200px; height:50px;">

<form class="form-inline" method="POST" enctype="MULTIPART/FORM-DATA" action="UploadServlet" >

<div style="line-height:50px;float:left;">

<input type="submit" name="submit" value="上传文件" />

</div>

<div style="line-height:50px;float:left;">

<input type="file" name="file1" size="30"/>

</div>

</form>

</div>

<div style="margin:0px auto; width:1200px;height:500px; background:#fff">

<table class="table table-hover" style="width:1000px;margin-left:100px;">

<tr style=" border-bottom:2px solid #ddd">

<td >文件名</td><td style="width:100px">类型</td><td style="width:100px;">大小</td><td style="width:100px;">操作</td><td style="width:100px;">操作</td>

</tr>

<%

FileStatus[] list = (FileStatus[])request.getAttribute("list");

if(list != null)

for (int i=0; i<list.length; i++) {

%>

<tr style="border-bottom:1px solid #eee">

<%

if(list.isDir())

{

out.print("<td> <a href=\"DocumentServlet?filePath="+list.getPath()+"\">"+list.getPath().getName()+"</a></td>");

}else{

out.print("<td>"+list.getPath().getName()+"</td>");

}

%>

<td><%= (list.isDir()?"目录":"文件") %></td>

<td><%= list.getLen()/1024%></td>

<td><a href="DeleteFileServlet?filePath=<%=java.net.URLEncoder.encode(list.getPath().toString(),"GB2312") %>">x</a></td>

<td><a href="DownloadServlet?filePath=<%=java.net.URLEncoder.encode(list.getPath().toString(),"GB2312") %>">下载</a></td>

</tr >

<%

}

%>

</table>

</div>

</body>

(2)document.jsp文件

<%@ include file="head.jsp"%>

<%@ page language="java" contentType="text/html; charset=UTF-8"

pageEncoding="UTF-8"%>

<%@page import="org.apache.hadoop.fs.FileStatus"%>

<body style="text-align:center;margin-bottom:100px;">

<div class="navbar" >

<div class="navbar-inner">

<a class="brand" href="#" style="margin-left:200px;">网盘</a>

<ul class="nav">

<li class="active"><a href="#">首页</a></li>

<li><a href="#">Link</a></li>

<li><a href="#">Link</a></li>

</ul>

</div>

</div>

<div style="margin:0px auto; text-align:left;width:1200px; height:50px;">

<form class="form-inline" method="POST" enctype="MULTIPART/FORM-DATA" action="UploadServlet" >

<div style="line-height:50px;float:left;">

<input type="submit" name="submit" value="上传文件" />

</div>

<div style="line-height:50px;float:left;">

<input type="file" name="file1" size="30"/>

</div>

</form>

</div>

<div style="margin:0px auto; width:1200px;height:500px; background:#fff">

<table class="table table-hover" style="width:1000px;margin-left:100px;">

<tr><td>文件名</td><td>属性</td><td>大小(KB)</td><td>操作</td><td>操作</td></tr>

<%

FileStatus[] list = (FileStatus[])request.getAttribute("documentList");

if(list != null)

for (int i=0; i<list.length; i++) {

%>

<tr style=" border-bottom:2px solid #ddd">

<%

if(list.isDir())

{

out.print("<td><a href=\"DocumentServlet?filePath="+list.getPath()+"\">"+list.getPath().getName()+"</a></td>");

}else{

out.print("<td>"+list.getPath().getName()+"</td>");

}

%>

<td><%= (list.isDir()?"目录":"文件") %></td>

<td><%= list.getLen()/1024%></td>

<td><a href="DeleteFileServlet?filePath=<%=java.net.URLEncoder.encode(list.getPath().toString(),"GB2312") %>">x</a></td>

<td><a href="DownloadServlet?filePath=<%=java.net.URLEncoder.encode(list.getPath().toString(),"GB2312") %>">下载</a></td>

</tr>

<%

}

%>

</table>

</div>

</body>

</html>

(3)DeleteFileServlet 文件

package com.controller;

import java.io.IOException;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.mapred.JobConf;

import com.model.HdfsDAO;

import com.sun.security.ntlm.Server;

/**

* Servlet implementation class DeleteFileServlet

*/

public class DeleteFileServlet extends HttpServlet {

/**

* @see HttpServlet#doGet(HttpServletRequest request, HttpServletResponse response)

*/

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

String filePath = new String(request.getParameter("filePath").getBytes("ISO-8859-1"),"GB2312");

JobConf conf = HdfsDAO.config();

HdfsDAO hdfs = new HdfsDAO(conf);

hdfs.rmr(filePath);

System.out.println("===="+filePath+"====");

FileStatus[] list = hdfs.ls("/user/root/");

request.setAttribute("list",list);

request.getRequestDispatcher("index.jsp").forward(request,response);

}

/**

* @see HttpServlet#doPost(HttpServletRequest request, HttpServletResponse response)

*/

protected void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

this.doGet(request, response);

}

}

(4)UploadServlet文件

package com.controller;

import java.io.File;

import java.io.IOException;

import java.util.Iterator;

import java.util.List;

import javax.servlet.ServletContext;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import javax.servlet.jsp.PageContext;

import org.apache.commons.fileupload.DiskFileUpload;

import org.apache.commons.fileupload.FileItem;

import org.apache.commons.fileupload.disk.DiskFileItemFactory;

import org.apache.commons.fileupload.servlet.ServletFileUpload;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.mapred.JobConf;

import com.model.HdfsDAO;

/**

* Servlet implementation class UploadServlet

*/

public class UploadServlet extends HttpServlet {

/**

* @see HttpServlet#doGet(HttpServletRequest request, HttpServletResponse response)

*/

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

this.doPost(request, response);

}

/**

* @see HttpServlet#doPost(HttpServletRequest request, HttpServletResponse response)

*/

protected void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

request.setCharacterEncoding("UTF-8");

File file ;

int maxFileSize = 50 * 1024 *1024; //50M

int maxMemSize = 50 * 1024 *1024; //50M

ServletContext context = getServletContext();

String filePath = context.getInitParameter("file-upload");

System.out.println("source file path:"+filePath+"");

// 验证上传内容了类型

String contentType = request.getContentType();

if ((contentType.indexOf("multipart/form-data") >= 0)) {

DiskFileItemFactory factory = new DiskFileItemFactory();

// 设置内存中存储文件的最大值

factory.setSizeThreshold(maxMemSize);

// 本地存储的数据大于 maxMemSize.

factory.setRepository(new File("c:\\temp"));

// 创建一个新的文件上传处理程序

ServletFileUpload upload = new ServletFileUpload(factory);

// 设置最大上传的文件大小

upload.setSizeMax( maxFileSize );

try{

// 解析获取的文件

List fileItems = upload.parseRequest(request);

// 处理上传的文件

Iterator i = fileItems.iterator();

System.out.println("begin to upload file to tomcat server</p>");

while ( i.hasNext () )

{

FileItem fi = (FileItem)i.next();

if ( !fi.isFormField () )

{

// 获取上传文件的参数

String fieldName = fi.getFieldName();

String fileName = fi.getName();

String fn = fileName.substring( fileName.lastIndexOf("\\")+1);

System.out.println("<br>"+fn+"<br>");

boolean isInMemory = fi.isInMemory();

long sizeInBytes = fi.getSize();

// 写入文件

if( fileName.lastIndexOf("\\") >= 0 ){

file = new File( filePath ,

fileName.substring( fileName.lastIndexOf("\\"))) ;

//out.println("filename"+fileName.substring( fileName.lastIndexOf("\\"))+"||||||");

}else{

file = new File( filePath ,

fileName.substring(fileName.lastIndexOf("\\")+1)) ;

}

fi.write( file ) ;

System.out.println("upload file to tomcat server success!");

System.out.println("begin to upload file to hadoop hdfs</p>");

//将tomcat上的文件上传到hadoop上

String username = (String) request.getSession().getAttribute("username");

JobConf conf = HdfsDAO.config();

HdfsDAO hdfs = new HdfsDAO(conf);

hdfs.copyFile(filePath+"\\"+fn, "/"+username+"/"+fn);

System.out.println("upload file to hadoop hdfs success!");

System.out.println("username-----"+username);

FileStatus[] list = hdfs.ls("/"+username);

request.setAttribute("list",list);

request.getRequestDispatcher("index.jsp").forward(request, response);

}

}

}catch(Exception ex) {

System.out.println(ex);

}

}else{

System.out.println("<p>No file uploaded</p>");

}

}

}

(5)DownloadServlet文件

package com.controller;

import java.io.BufferedInputStream;

import java.io.BufferedOutputStream;

import java.io.File;

import java.io.FileInputStream;

import java.io.IOException;

import java.io.InputStream;

import javax.servlet.ServletException;

import javax.servlet.ServletOutputStream;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.mapred.JobConf;

import com.model.HdfsDAO;

/**

* Servlet implementation class DownloadServlet

*/

public class DownloadServlet extends HttpServlet {

private static final long serialVersionUID = 1L;

/**

* @see HttpServlet#doGet(HttpServletRequest request, HttpServletResponse response)

*/

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

String local = "C:/";

String filePath = new String(request.getParameter("filePath").getBytes("ISO-8859-1"),"GB2312");

System.out.println(filePath);

JobConf conf = HdfsDAO.config();

HdfsDAO hdfs = new HdfsDAO(conf);

hdfs.download(filePath, local);

FileStatus[] list = hdfs.ls("/user/root/");

request.setAttribute("list",list);

request.getRequestDispatcher("index.jsp").forward(request,response);

}

/**

* @see HttpServlet#doPost(HttpServletRequest request, HttpServletResponse response)

*/

protected void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

this.doGet(request, response);

}

}

(6)DocumentServlet文件

package com.controller;

import java.io.IOException;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.mapred.JobConf;

import com.model.HdfsDAO;

/**

* Servlet implementation class DocumentServlet

*/

public class DocumentServlet extends HttpServlet {

private static final long serialVersionUID = 1L;

/**

* @see HttpServlet#doGet(HttpServletRequest request, HttpServletResponse response)

*/

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

String filePath = new String(request.getParameter("filePath").getBytes("ISO-8859-1"),"GB2312");

JobConf conf = HdfsDAO.config();

HdfsDAO hdfs = new HdfsDAO(conf);

FileStatus[] documentList = hdfs.ls(filePath);

request.setAttribute("documentList",documentList);

request.getRequestDispatcher("document.jsp").forward(request,response);

}

/**

* @see HttpServlet#doPost(HttpServletRequest request, HttpServletResponse response)

*/

protected void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

this.doGet(request, response);

}

}

(7)LougoutServlet文件

package com.controller;

import java.io.IOException;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import javax.servlet.http.HttpSession;

/**

* Servlet implementation class LogoutServlet

*/

public class LogoutServlet extends HttpServlet {

private static final long serialVersionUID = 1L;

/**

* @see HttpServlet#doGet(HttpServletRequest request, HttpServletResponse response)

*/

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

HttpSession session = request.getSession();

session.removeAttribute("username");

request.getRequestDispatcher("login.jsp").forward(request, response);

}

/**

* @see HttpServlet#doPost(HttpServletRequest request, HttpServletResponse response)

*/

protected void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

this.doGet(request, response);

}

}

到此,一个简单的基于hadoop的网盘应用就完成了,如果想把它做的更像一个真正的网盘,大家可以花多点时间去实现剩下的功能。

源代码下载地址:http://download.csdn.net/detail/wen294299195/7779949

|

|