|

|

(1).

在执行的如下命令时:

% echo "Text" | hadoop StreamCompressor org.apache.hadoop.io.compress.GzipCodec | gunzip -

Text

由于是在eclipse中编译的此文件,所以默认加上了自定义的包名路径:ch04/StreamCompressor,如果跑到ch04目录下直接执行的话,会报如下错:

Exception in thread "main" java.lang.NoClassDefFoundError: StreamCompressor (wrong name: ch04/StreamCompress)

你需要到ch04的父目录中,执行如下命令才能成功:

% echo "Text" | hadoop ch04.StreamCompressor org.apache.hadoop.io.compress.GzipCodec | gunzip -

同时不要忘了重新导入HADOOP_CLASSPATH为ch04的父目录。

(2).

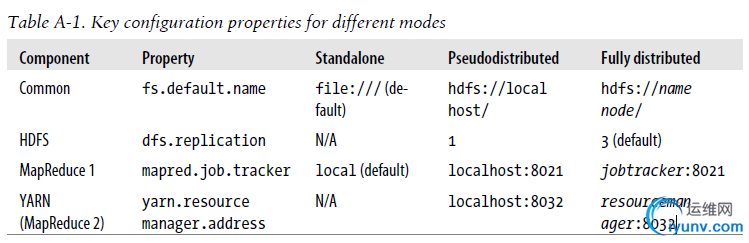

决定hadoop是在单例模式或伪分布模式下运行的,就是conf目录下的那三个配置文件:core-site.xml, hdfs-site.xml, mapred-size.xml。当你准备切换模式时,就修改这三个文件,然后执行hadoop namenode -format即可(伪分布模式下还要执行start-dfs.sh, start-mapred.sh或start-all.sh;单例模式下不需要这些步骤,否则在你执行这些的时候会在logs/*.log中报如下错:“does not contain a valid host port authority:localhost”)。学习hadoop或者在开发应用程序的时候推荐现在单例模式下运行,等到确保在单例模式下应用程序一切ok的时候再迁移到full cluster中去,否则你每次从hdfs上拷文件的话很麻烦。

(3).

刚才发现执行命令start-all.sh时slaves上并没有启动datanode,查看slaves上的logs/***.log发现报错: java.io.IOException: Incompatible namespaceIDs in /home/admin/joe.wangh/hadoop/data/dfs.data.dir: namenode namespaceID = 898136669; datanode namespaceID = 2127444065。原因是:每次namenode format会重新创建一个namenodeId,而tmp/dfs/data(如果你在hdfs-site.xml中设置了dfs.data.dir属性,那么就在此属性指定的目录下)下包含了上次format下的id,namenode format清空了namenode下的数据,但是没有清空datanode下的数据,导致启动时失败,所要做的就是每次fotmat前,清空tmp一下 的所有目录(或者执行stop-all.sh脚本把分布式文件系统先停掉). 现在可以采用如下方法来挽救:

Updating namespaceID of problematic datanodes

Big thanks to Jared Stehler for the following suggestion. I have not tested it myself yet, but feel free to try it out and send me your feedback. This workaround is "minimally invasive" as you only have to edit one file on the problematic datanodes:

1. stop the datanode

2. edit the value of namespaceID in /current/VERSION to match the value of the current namenode

3. restart the datanode

If you followed the instructions in my tutorials, the full path of the relevant file is /usr/local/hadoop-datastore/hadoop-hadoop/dfs/data/current/VERSION (background: dfs.data.dir is by default set to ${hadoop.tmp.dir}/dfs/data, and we set hadoop.tmp.dir to /usr/local/hadoop-datastore/hadoop-hadoop).

If you wonder how the contents of VERSION look like, here's one of mine:

#contents of /current/VERSION

namespaceID=393514426

storageID=DS-1706792599-10.10.10.1-50010-1204306713481

cTime=1215607609074

storageType=DATA_NODE

layoutVersion=-13

(4).

eclipse中Map/Reduce Location中默认的DFS Master和Map/Reduce Master端口号是50040和50020,这和hadoop的配置文件core-site.xml(fs.default.name)和dfs-site.xml中的默认值是不一样的(分别为8020和8021),注意要改过来。

(5).

eclipse下连接hadoop的源码时,如果没有源码的jar包也可以通过add external folder的方式把文件夹加进去,不过加进去的记得是org所在的目录

(6).

The local job runner uses a single JVM to run a job, so as long as all the classes that your job needs are on its classpath, then things will just work.

In a distributed setting, things are a little more complex. For a start, a job’s classes must be packaged into a job JAR file to send to the cluster. Hadoop will find the job JAR automatically by searching for the JAR on the driver’s classpath that contains the class set in the setJarByClass() method (on JobConf or Job). Alternatively, if you want to set an explicit JAR file by its file path, you can use the setJar() method.

(7). Classpath

The client classpath

The user’s client-side classpath set by hadoop jar is made up of:

· The job JAR file

· Any JAR files in the lib directory of the job JAR file, and the classes directory (ifpresent)

· The classpath defined by HADOOP_CLASSPATH, if set

Incidentally, this explains why you have to set HADOOP_CLASSPATH to point to dependent classes and libraries if you are running using the local job runner without a job JAR (hadoop CLASSNAME).

The task classpath

On a cluster (and this includes pseudodistributed mode), map and reduce tasks run in separate JVMs, and their classpaths are not controlled by HADOOP_CLASSPATH.

HADOOP_CLASSPATH is a client-side setting and only sets the classpath for the driver JVM, which submits the job.

Instead, the user’s task classpath is comprised of the following:

· The job JAR file

· Any JAR files contained in the lib directory of the job JAR file, and the classes directory (if present)

· Any files added to the distributed cache, using the -libjars option, or the addFileToClassPath() method on DistributedCache (old API), or Job (new API) Packaging dependencies

Given these different ways of controlling what is on the client and task classpaths, there are corresponding options for including library dependencies for a job.

· Unpack the libraries and repackage them in the job JAR.

· Package the libraries in the lib directory of the job JAR.

· Keep the libraries separate from the job JAR, and add them to the client classpath via HADOOP_CLASSPATH and to the task classpath via -libjars.

(8).

Hadoop中每个task均对应于tasktracker中的一个slot,系统中mapperslots总数与reducerslots总数的计算公式如下:

mapperslots总数=集群节点数×mapred.tasktracker.map.tasks.maximum

reducerslots总数=集群节点数×mapred.tasktracker.reduce.tasks.maximum

设置reducer的个数比集群中全部的reducerslot略少可以使得全部的reducetask可以同时进行,而且可以容忍一些reducetask失败。

(9).

有时候namenode启动不了的话,可以先把hadoop停掉(stop-all.sh),然后执行hadoop namenode -format命令把hdfs格式化(注意,你会丢掉hdfs上的所有数据),然后重新启动hadoop就可以了(start-all.sh)

(10).

在集群中格式化namenode时(hadoop namenode -format),如果hadoop已经启动,此命令不会产生任何作用。如果hadoop没有启动(只是在master端执行此命令),则master与各个slave的namespace ID会不一样(master的改变了)造成下次启动Hadoop集群异常。

|

|