|

|

互联网上关于Linux下多网卡捆绑的配置说明文章和博客一搜就是一大把,但纸上得来终觉浅;加之前几天重装了一台HP DL585机器进行多网卡捆绑时总觉得配置没撒问题但就是捆绑不成功!刚好部门内空闲一台三块网卡的机器,昨天下午闲暇时间特意实战测试和验证了一下基于bonding模式4和模式1的捆绑功能,至于其它模式及模式间的区别和差异请各位网友自行搜索和查阅!

具体实战记录情况按基于Ubuntu系统和rhel系统环境两部分来进行:

实战环境(1):Ubuntu系统环境下多网卡bonding

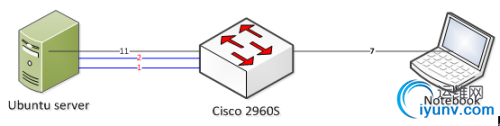

1、 网络拓扑结构图如下:

说明:线上数字分别对应交换机端口Gi1/0/1,Gi1/0/2,Gi1/0/7,Gi1/0/11,默认所有端口均在VLAN1内!

IP 配置规划说明:

C2960S VLAN1 MANAGE IP:192.168.4.1/255.255.255.0

Notebook IP:192.168.4.87/255.255.255.0

ubuntu-desktop eth0 IP:192.168.4.100/255.255.255.0 作为管理IP以便ssh远程连接操作

bond0 (eth1,eth2) IP:192.168.4.200/255.255.255.0

2、 Ubuntu系统环境信息:

root@ubuntu-desktop:~# cat /etc/issue

Ubuntu 12.04.4 LTS \n \l

root@ubuntu-desktop:~# uname -r

3.2.0-35-generic

root@ubuntu-desktop:~# uname -a

Linux ubuntu-desktop 3.2.0-35-generic #55-Ubuntu SMP Wed Dec 5 17:42:16 UTC 2012 x86_64 x86_64 x86_64 GNU/Linux

3、 Ubuntu系统配置多网卡bonding

在保障机器可以上网的情况下安装ifenslave(如已经安装则不需要)

root@ubuntu-desktop:~#apt-get install ifenslave

修改接口配置信息如下:

root@ubuntu-desktop:~# cat /etc/network/interfaces

auto lo eth0 eth1 eth2

iface lo inet loopback

iface eth1 inet static

iface eth2 inet static

auto bond0

iface bond0 inet static

address 192.168.4.200

netmask 255.255.255.0

gateway 192.168.4.1

up ifenslave bond0 eth1 eth2

down ifenslave -d bond0 eth1 eth2

加载bonding

root@ubuntu-desktop:~# modprobe bonding

修改bonding模式和检测时间如下:

root@ubuntu-desktop:~# vi /etc/modules

添加:

bonding mode=4 miimon=100

4、 交换机配置设定信息:

test_sw#conf t

interface Port-channel1

description link_to_ubuntu_server_bind

switchport mode access

interface GigabitEthernet1/0/1

switchport mode access

channel-group 1 mode active

interface GigabitEthernet1/0/2

switchport mode access

channel-group 1 mode active

interface GigabitEthernet1/0/7

description link_to_notebook

switchport mode access

interface GigabitEthernet1/0/11

description link_to_ubuntu_server_mgmt

switchport mode access

interface Vlan1

ip address 192.168.4.1 255.255.255.0

test_sw#sh vlan

VLAN Name Status Ports

---- -------------------------------- --------- -------------------------------

1 default active Gi1/0/3, Gi1/0/4, Gi1/0/5

Gi1/0/6, Gi1/0/7, Gi1/0/8

Gi1/0/9, Gi1/0/10, Gi1/0/11

其它端口省略...

Gi1/0/51, Gi1/0/52, Po1

完成交换机设定后,重启服务器:

root@ubuntu-desktop:~# /etc/init.d/networking restart

5、 Ubuntu接口及交换机端口bonding校验

root@aaron-desktop:~# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: IEEE 802.3ad Dynamic link aggregation

Transmit Hash Policy: layer2 (0)

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

802.3ad info

LACP rate: slow

Min links: 0

Aggregator selection policy (ad_select): stable

Active Aggregator Info:

Aggregator ID: 1

Number of ports: 2

Actor Key: 17

Partner Key: 1

Partner Mac Address: f0:9e:63:f2:ec:80

Slave Interface: eth1

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 00:11:0a:57:5f:4e

Aggregator ID: 1

Slave queue ID: 0

Slave Interface: eth2

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 00:11:0a:57:5f:4f

Aggregator ID: 1

Slave queue ID: 0

说明:绿色字样表示bonding模式4已经成功!

test_sw#sh int status 过滤了其它端口信息

Port Name Status Vlan Duplex Speed Type

Gi1/0/1 connected 1 a-full a-1000 10/100/1000BaseTX

Gi1/0/2 connected 1 a-full a-1000 10/100/1000BaseTX

Gi1/0/7 link_to_notebook connected 1 a-full a-1000 10/100/1000BaseTX

Gi1/0/11 link_to_ubuntu_ser connected 1 a-full a-1000 10/100/1000BaseTX

Po1 link_to_ubuntu_ser connected 1 a-full a-1000

test_sw#sh int port-channel 1

Port-channel1 is up, line protocol is up (connected)

Hardware is EtherChannel, address is f09e.63f2.ec82 (bia f09e.63f2.ec82)

Description: link_to_ubuntu_server_bind

MTU 1500 bytes, BW 2000000 Kbit, DLY 10 usec,

reliability 255/255, txload 1/255, rxload 1/255

Encapsulation ARPA, loopback not set

Keepalive set (10 sec)

Full-duplex, 1000Mb/s, link type is auto, media type is unknown

input flow-control is off, output flow-control is unsupported

Members in this channel: Gi1/0/1 Gi1/0/2

ARP type: ARPA, ARP Timeout 04:00:00

Last input never, output 00:00:00, output hang never

Last clearing of "show interface" counters never

Input queue: 0/75/0/0 (size/max/drops/flushes); Total output drops: 0

说明:端口捆绑成功,带宽已提升为2GB,不但增加了带宽而且实现了负载分担功能!

6、网卡bonding模式1验证及测试(模式1不需要交换机支持)

root@ubuntu-desktop:~# vi /etc/modules

修改为:

bonding mode=1 miimon=100

test_sw#conf t

test_sw(config-if-range)#no channel-group 1 mode active

test_sw(config-if-range)#end

test_sw#sh run int gi 1/0/1

interface GigabitEthernet1/0/1 switchport mode access

test_sw#sh run int gi 1/0/2

interface GigabitEthernet1/0/2 switchport mode access

test_sw#sh ip int bri

Interface IP-Address OK? Method Status Protocol

Vlan1 192.168.4.1 YES manual up up

GigabitEthernet1/0/1 unassigned YES unset up up

GigabitEthernet1/0/2 unassigned YES unset up up

- Ubuntu机器重启,重启后bonding模式1接口bond0恢复正常!

root@ubuntu-desktop:~# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: fault-tolerance (active-backup)

Primary Slave: None

Currently Active Slave: eth1

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: eth1

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 00:11:0a:57:5f:4e

Slave queue ID: 0

Slave Interface: eth2

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 00:11:0a:57:5f:4f

Slave queue ID: 0

root@ubuntu-desktop:~# ifconfig

bond0 Link encap:Ethernet HWaddr 00:11:0a:57:5f:4e

inet addr:192.168.4.200 Bcast:192.168.4.255 Mask:255.255.255.0

inet6 addr: fe80::211:aff:fe57:5f4e/64 Scope:Link

UP BROADCAST RUNNING MASTER MULTICAST MTU:1500 Metric:1

RX packets:78 errors:0 dropped:2 overruns:0 frame:0

TX packets:125 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:7645 (7.6 KB) TX bytes:18267 (18.2 KB)

eth1 Link encap:Ethernet HWaddr 00:11:0a:57:5f:4e

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:76 errors:0 dropped:0 overruns:0 frame:0

TX packets:125 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:7525 (7.5 KB) TX bytes:18267 (18.2 KB)

eth2 Link encap:Ethernet HWaddr 00:11:0a:57:5f:4e

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:2 errors:0 dropped:2 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:120 (120.0 B) TX bytes:0 (0.0 B)

test_sw#conf t

test_sw(config)#int gi 1/0/1

test_sw(config-if)#shut

test_sw(config-if)#

*Mar 1 19:35:25.124: %LINK-5-CHANGED: Interface GigabitEthernet1/0/1, changed state to administratively down

*Mar 1 19:35:26.136: %LINEPROTO-5-UPDOWN: Line protocol on Interface GigabitEthernet1/0/1, changed state to down

root@ubuntu-desktop:~# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: fault-tolerance (active-backup)

Primary Slave: None

Currently Active Slave: eth1

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: eth1

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 00:11:0a:57:5f:4e

Slave queue ID: 0

Slave Interface: eth2

MII Status: down

Speed: Unknown

Duplex: Unknown

Link Failure Count: 1

Permanent HW addr: 00:11:0a:57:5f:4f

Slave queue ID: 0

ping 192.168.4.87 连续性测试不丢包!

test_sw(config-if)#no shut

*Mar 1 19:36:26.287: %LINK-3-UPDOWN: Interface GigabitEthernet1/0/1, changed state to up

*Mar 1 19:36:27.289: %LINEPROTO-5-UPDOWN: Line protocol on Interface GigabitEthernet1/0/1, changed state to up

root@ubuntu-desktop:~# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: fault-tolerance (active-backup)

Primary Slave: None

Currently Active Slave: eth1

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: eth1

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 00:11:0a:57:5f:4e

Slave queue ID: 0

Slave Interface: eth2

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 1

Permanent HW addr: 00:11:0a:57:5f:4f

Slave queue ID: 0

说明:端口bonding模式1捆绑成功,只能提供冗余功能,不能增加带宽和实现负载分担功能!

实战环境(2):RHEL 5.7系统环境下多端口bonding验证和确认

rhel5.7 x64环境下端口捆绑模式4和模式1基本类似于ubuntu系统下的设置,经实战测试可实现相同功能;

现给出RHEL系统下bonding配置信息:

[root@RHEL5 ~]# cat /etc/redhat-release

Red Hat Enterprise Linux Server release 5.7 (Tikanga)

[root@RHEL5 ~]# uname -a

Linux RHEL5.7-bond 2.6.18-274.el5 #1 SMP Fri Jul 8 17:36:59 EDT 2011 x86_64 x86_64 x86_64 GNU/Linux

[root@RHEL5 ~]# uname -r

2.6.18-274.el5

[root@RHEL5 ~]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:11:0A:57:5F:4E

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:11 errors:0 dropped:0 overruns:0 frame:0

TX packets:6 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:3297 (3.2 KiB) TX bytes:2052 (2.0 KiB)

eth1 Link encap:Ethernet HWaddr 00:11:0A:57:5F:4F

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:4 errors:0 dropped:0 overruns:0 frame:0

TX packets:6 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:987 (987.0 b) TX bytes:2052 (2.0 KiB)

eth2 Link encap:Ethernet HWaddr C8:60:00:2A:75:99

inet addr:192.168.4.100 Bcast:192.168.4.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:127 errors:0 dropped:0 overruns:0 frame:0

TX packets:123 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:12244 (11.9 KiB) TX bytes:16505 (16.1 KiB)

Interrupt:74 Memory:f7c00000-f7c20000

[root@RHEL5 ~]# cd /etc/sysconfig/network-scripts/ [root@RHEL5 network-scripts]# cat ifcfg-bond0

DEVICE=bond0

TYPE=Ethernet

BOOTPROTO=static

ONBOOT=yes

IPADDR=192.168.4.200

NETMASK=255.255.255.0

NETWORK=192.168.4.0

GATEWAY=192.168.4.1

USERCTL=no

[root@RHEL5 network-scripts]# cat ifcfg-eth0

# Intel Corporation 82546EB Gigabit Ethernet Controller (Copper)

DEVICE=eth0

BOOTPROTO=static

ONBOOT=yes

MASTER=bond0

SLAVE=yes

USERCTL=no

[root@RHEL5 network-scripts]# cat ifcfg-eth1

# Intel Corporation 82546EB Gigabit Ethernet Controller (Copper)

DEVICE=eth1

BOOTPROTO=static

ONBOOT=yes

MASTER=bond0

SLAVE=yes

USERCTL=no

[root@RHEL5 network-scripts]# vi /etc/modprobe.conf

添加:

alias bond0 bonding

options bond0 mode=4 miimon=100

交换机配置如Ubuntu bonding模式4时,交换机上port-channel捆绑配置,在此略过。。。

RHEL5.7服务器重启

[root@RHEL5 network-scripts]# service network restart

- RHEL5.7服务器重启后,接口bonding校验

[root@RHEL5 ~]# ifconfig

bond0 Link encap:Ethernet HWaddr 00:11:0A:57:5F:4E

inet addr:192.168.4.200 Bcast:192.168.4.255 Mask:255.255.255.0

UP BROADCAST RUNNING MASTER MULTICAST MTU:1500 Metric:1

RX packets:236 errors:0 dropped:0 overruns:0 frame:0

TX packets:100 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:25211 (24.6 KiB) TX bytes:17630 (17.2 KiB)

eth0 Link encap:Ethernet HWaddr 00:11:0A:57:5F:4E

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:22 errors:0 dropped:0 overruns:0 frame:0

TX packets:14 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:4786 (4.6 KiB) TX bytes:2962 (2.8 KiB)

eth1 Link encap:Ethernet HWaddr 00:11:0A:57:5F:4E

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:215 errors:0 dropped:0 overruns:0 frame:0

TX packets:90 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:20485 (20.0 KiB) TX bytes:15556 (15.1 KiB)

eth2 Link encap:Ethernet HWaddr C8:60:00:2A:75:99

inet addr:192.168.4.100 Bcast:192.168.4.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1795 errors:0 dropped:0 overruns:0 frame:0

TX packets:1379 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:159687 (155.9 KiB) TX bytes:168819 (164.8 KiB)

Interrupt:74 Memory:f7c00000-f7c20000

[root@RHEL5 ~]# cat /proc/net/bonding/bond0 Ethernet Channel Bonding Driver: v3.4.0-1 (October 7, 2008)

Bonding Mode: IEEE 802.3ad Dynamic link aggregation

Transmit Hash Policy: layer2 (0)

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

802.3ad info

LACP rate: slow

Active Aggregator Info:

Aggregator ID: 1

Number of ports: 2

Actor Key: 17

Partner Key: 1

Partner Mac Address: f0:9e:63:f2:ec:80

Slave Interface: eth0

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 00:11:0a:57:5f:4e

Aggregator ID: 1

Slave Interface: eth1

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 00:11:0a:57:5f:4f

Aggregator ID: 1

- 交换机端口状态和port-channel捆绑信息确认

test_sw#sh int status

Port Name Status Vlan Duplex Speed Type

Gi1/0/1 connected 1 a-full a-1000 10/100/1000BaseTX

Gi1/0/2 connected 1 a-full a-1000 10/100/1000BaseTX

Gi1/0/7 link_to_notebook connected 1 a-full a-1000 10/100/1000BaseTX

Gi1/0/11 link_to_ubuntu_ser connected 1 a-full a-1000 10/100/1000BaseTX

Po1 link_to_rhel5.7 connected 1 a-full a-1000

test_sw#sh int port-channel 1

Port-channel1 is up, line protocol is up (connected)

Hardware is EtherChannel, address is f09e.63f2.ec82 (bia f09e.63f2.ec82)

Description: link_to_rhel5.7

MTU 1500 bytes, BW 2000000 Kbit, DLY 10 usec,

reliability 255/255, txload 1/255, rxload 1/255

Encapsulation ARPA, loopback not set

Keepalive set (10 sec)

Full-duplex, 1000Mb/s, link type is auto, media type is unknown

input flow-control is off, output flow-control is unsupported

Members in this channel: Gi1/0/1 Gi1/0/2

ARP type: ARPA, ARP Timeout 04:00:00

Last input never, output 00:00:01, output hang never

Last clearing of "show interface" counters never

Input queue: 0/75/0/0 (size/max/drops/flushes); Total output drops: 0

Queueing strategy: fifo

Output queue: 0/40 (size/max)

实战总结说明:

在配置bonding模式4时:需要提前随系统启动时激活Linux系统下需要进行端口捆绑的接口并且连接好网口至交换机端口,在配置完Linux下网口捆绑配置后,再配置交换机port-channel设置并激活端口;最后重启Linux服务器会较容易形成bond0捆绑端口!(还必须保障Linux系统下接口、交换机端口配置无误接口速率一致而且级连跳线稳定) |

|