|

|

编写不易,转载请注明(http://shihlei.iyunv.com/blog/2084711)!

说明

本文搭建Hadoop CDH5.0.1 分布式系统,包括NameNode ,ResourceManger HA,忽略了Web Application Proxy 和Job HistoryServer。

一概述

(一)HDFS

1)基础架构

(1)NameNode(Master)

- 命名空间管理:命名空间支持对HDFS中的目录、文件和块做类似文件系统的创建、修改、删除、列表文件和目录等基本操作。

- 块存储管理

(2)DataNode(Slaver)

namenode和client的指令进行存储或者检索block,并且周期性的向namenode节点报告它存了哪些文件的block

2)HA架构

使用Active NameNode,Standby NameNode 两个结点解决单点问题,两个结点通过JounalNode共享状态,通过ZKFC 选举Active ,监控状态,自动备援。

(1)Active NameNode:

接受client的RPC请求并处理,同时写自己的Editlog和共享存储上的Editlog,接收DataNode的Block report, block location updates和heartbeat;

(2)Standby NameNode:

同样会接到来自DataNode的Block report, block location updates和heartbeat,同时会从共享存储的Editlog上读取并执行这些log操作,使得自己的NameNode中的元数据(Namespcae information + Block locations map)都是和Active NameNode中的元数据是同步的。所以说Standby模式的NameNode是一个热备(HotStandby NameNode),一旦切换成Active模式,马上就可以提供NameNode服务

(3)JounalNode:

用于Active NameNode , Standby NameNode 同步数据,本身由一组JounnalNode结点组成,该组结点基数个,支持Paxos协议,保证高可用,是CDH5唯一支持的共享方式(相对于CDH4 促在NFS共享方式)

(4)ZKFC:

监控NameNode进程,自动备援。

(二)YARN

1)基础架构

(1)ResourceManager(RM)

接收客户端任务请求,接收和监控NodeManager(NM)的资源情况汇报,负责资源的分配与调度,启动和监控ApplicationMaster(AM)。

(2)NodeManager

节点上的资源管理,启动Container运行task计算,上报资源、container情况给RM和任务处理情况给AM。

(3)ApplicationMaster

单个Application(Job)的task管理和调度,向RM进行资源的申请,向NM发出launch Container指令,接收NM的task处理状态信息。NodeManager

(4)Web Application Proxy

用于防止Yarn遭受Web攻击,本身是ResourceManager的一部分,可通过配置独立进程。ResourceManager Web的访问基于守信用户,当Application Master运行于一个非受信用户,其提供给ResourceManager的可能是非受信连接,Web Application Proxy可以阻止这种连接提供给RM。

(5)Job History Server

NodeManager在启动的时候会初始化LogAggregationService服务, 该服务会在把本机执行的container log (在container结束的时候)收集并存放到hdfs指定的目录下. ApplicationMaster会把jobhistory信息写到hdfs的jobhistory临时目录下, 并在结束的时候把jobhisoty移动到最终目录, 这样就同时支持了job的recovery.History会启动web和RPC服务,用户可以通过网页或RPC方式获取作业的信息

2)HA架构

ResourceManager HA 由一对Active,Standby结点构成,通过RMStateStore存储内部数据和主要应用的数据及标记。目前支持的可替代的RMStateStore实现有:基于内存的MemoryRMStateStore,基于文件系统的FileSystemRMStateStore,及基于zookeeper的ZKRMStateStore。

ResourceManager HA的架构模式同NameNode HA的架构模式基本一致,数据共享由RMStateStore,而ZKFC成为 ResourceManager进程的一个服务,非独立存在。

二 规划

(一)版本

组件名

版本

说明

JRE

java version "1.7.0_60"

Java(TM) SE Runtime Environment (build 1.7.0_60-b19)

Java HotSpot(TM) Client VM (build 24.60-b09, mixed mode)

Hadoop

hadoop-2.3.0-cdh5.0.1.tar.gz

主程序包

Zookeeper

zookeeper-3.4.5-cdh5.0.1.tar.gz

热切,Yarn 存储数据使用的协调服务

(二)主机规划

IP

Host

部署模块

进程

8.8.8.11

Hadoop-NN-01

NameNode

ResourceManager

NameNode

DFSZKFailoverController

ResourceManager

8.8.8.12

Hadoop-NN-02

NameNode

ResourceManager

NameNode

DFSZKFailoverController

ResourceManager

8.8.8.13

Hadoop-DN-01

Zookeeper-01

DataNode

NodeManager

Zookeeper

DataNode

NodeManager

JournalNode

QuorumPeerMain

8.8.8.14

Hadoop-DN-02

Zookeeper-02

DataNode

NodeManager

Zookeeper

DataNode

NodeManager

JournalNode

QuorumPeerMain

8.8.8.15

Hadoop-DN-03

Zookeeper-03

DataNode

NodeManager

Zookeeper

DataNode

NodeManager

JournalNode

QuorumPeerMain

各个进程解释:

- NameNode

- ResourceManager

- DFSZKFC:DFS Zookeeper Failover Controller 激活Standby NameNode

- DataNode

- NodeManager

- JournalNode:NameNode共享editlog结点服务(如果使用NFS共享,则该进程和所有启动相关配置接可省略)。

- QuorumPeerMain:Zookeeper主进程

(三)目录规划

名称

路径

$HADOOP_HOME

/home/zero/hadoop/hadoop-2.3.0-cdh5.0.1

Data

$ HADOOP_HOME/data

Log

$ HADOOP_HOME/logs

三 环境准备

1)关闭防火墙

root 用户:

[iyunv@CentOS-Cluster-01 hadoop-2.3.0-cdh5.0.1]# service iptables stop

iptables: Flushing firewall rules: [ OK ]

iptables: Setting chains to policy ACCEPT: filter [ OK ]

iptables: Unloading modules: [ OK ]

验证:

[iyunv@CentOS-Cluster-01 hadoop-2.3.0-cdh5.0.1]# service iptables status

iptables: Firewall is not running.

2)安装JRE:略

3)安装Zookeeper :参见《Zookeeper-3.4.5-cdh5.0.1 单机模式、副本 ...》

4)配置SSH互信:

(1)Hadoop-NN-01创建密钥:

[zero@CentOS-Cluster-01 ~]$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/zero/.ssh/id_rsa):

Created directory '/home/zero/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/zero/.ssh/id_rsa.

Your public key has been saved in /home/zero/.ssh/id_rsa.pub.

The key fingerprint is:

28:0a:29:1d:98:56:55:db:ec:83:93:56:8a:0f:6c:ea zero@CentOS-Cluster-01

The key's randomart image is:

+--[ RSA 2048]----+

| ..... |

| o. + |

|o.. . + |

|.o .. ..* |

|+ . .=.*So |

|.. .o.+ . . |

| .. . |

| . |

| E |

+-----------------+

(2)分发密钥:

[zero@CentOS-Cluster-01 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub zero@Hadoop-NN-01

The authenticity of host 'hadoop-nn-01 (8.8.8.11)' can't be established.

RSA key fingerprint is a6:11:09:49:8c:fe:b2:fb:49:d5:01:fa:13:1b:32:24.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'hadoop-nn-01,8.8.8.11' (RSA) to the list of known hosts.

puppet@hadoop-nn-01's password:

Permission denied, please try again.

puppet@hadoop-nn-01's password:

[zero@CentOS-Cluster-01 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub zero@Hadoop-NN-01

zero@hadoop-nn-01's password:

Now try logging into the machine, with "ssh 'zero@Hadoop-NN-01'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

[zero@CentOS-Cluster-01 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub zero@Hadoop-NN-02

(…略…)

[zero@CentOS-Cluster-01 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub zero@Hadoop-DN-01

(…略…)

[zero@CentOS-Cluster-01 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub zero@Hadoop-DN-02

(…略…)

[zero@CentOS-Cluster-01 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub zero@Hadoop-DN-03

(…略…)

(3)验证:

[zero@CentOS-Cluster-01 ~]$ ssh Hadoop-NN-01

Last login: Sun Jun 22 19:56:23 2014 from 8.8.8.1

[zero@CentOS-Cluster-01 ~]$ exit

logout

Connection to Hadoop-NN-01 closed.

[zero@CentOS-Cluster-01 ~]$ ssh Hadoop-NN-02

Last login: Sun Jun 22 20:03:31 2014 from 8.8.8.1

[zero@CentOS-Cluster-03 ~]$ exit

logout

Connection to Hadoop-NN-02 closed.

[zero@CentOS-Cluster-01 ~]$ ssh Hadoop-DN-01

Last login: Mon Jun 23 02:00:07 2014 from centos_cluster_01

[zero@CentOS-Cluster-03 ~]$ exit

logout

Connection to Hadoop-DN-01 closed.

[zero@CentOS-Cluster-01 ~]$ ssh Hadoop-DN-02

Last login: Sun Jun 22 20:07:03 2014 from 8.8.8.1

[zero@CentOS-Cluster-04 ~]$ exit

logout

Connection to Hadoop-DN-02 closed.

[zero@CentOS-Cluster-01 ~]$ ssh Hadoop-DN-03

Last login: Sun Jun 22 20:07:05 2014 from 8.8.8.1

[zero@CentOS-Cluster-05 ~]$ exit

logout

Connection to Hadoop-DN-03 closed.

5)配置/etc/hosts并分发:

[iyunv@CentOS-Cluster-01 zero]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

8.8.8.10 CentOS-StandAlone

8.8.8.11 CentOS-Cluster-01 Hadoop-NN-01

8.8.8.12 CentOS-Cluster-02 Hadoop-NN-02

8.8.8.13 CentOS-Cluster-03 Hadoop-DN-01 Zookeeper-01

8.8.8.14 CentOS-Cluster-04 Hadoop-DN-02 Zookeeper-02

8.8.8.15 CentOS-Cluster-05 Hadoop-DN-03 Zookeeper-03

6)配置环境变量:vi ~/.bashrc 然后 source ~/.bashrc

[zero@CentOS-Cluster-01 ~]$ vi ~/.bashrc

……

# hadoop cdh5

export HADOOP_HOME=/home/zero/hadoop/hadoop-2.3.0-cdh5.0.1

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

[zero@CentOS-Cluster-01 ~]$ source ~/.bashrc

四 安装

1)解压

[puppet@BigData-01 cdh4.4]$ tar -xvf hadoop-2.0.0-cdh4.4.0.tar.gz

2)修改配置文件

说明:

配置名称

类型

说明

hadoop-env.sh

Bash脚本

Hadoop运行环境变量设置

core-site.xml

xml

配置Hadoop core,如IO

hdfs-site.xml

xml

配置HDFS守护进程:NN、JN、DN

yarn-env.sh

Bash脚本

Yarn运行环境变量设置

yarn-site.xml

xml

Yarn框架配置环境

mapred-site.xml

xml

MR属性设置

capacity-scheduler.xml

xml

Yarn调度属性设置

container-executor.cfg

Cfg

Yarn Container配置

mapred-queues.xml

xml

MR队列设置

hadoop-metrics.properties

Java属性

Hadoop Metrics配置

hadoop-metrics2.properties

Java属性

Hadoop Metrics配置

slaves

Plain Text

DN节点配置

exclude

Plain Text

移除DN节点配置文件

log4j.properties

系统日志设置

configuration.xsl

(1)修改$HADOOP_HOME/etc/hadoop-env.sh:

Shell代码

- #--------------------Java Env------------------------------

- export JAVA_HOME="/usr/runtime/java/jdk1.7.0_60"

-

- #--------------------Hadoop Env------------------------------

- #export HADOOP_PID_DIR=

- export HADOOP_PREFIX="/home/zero/hadoop/hadoop-2.3.0-cdh5.0.1"

-

- #--------------------Hadoop Daemon Options-----------------

- #export HADOOP_NAMENODE_OPTS="-XX:+UseParallelGC ${HADOOP_NAMENODE_OPTS}"

- #export HADOOP_DATANODE_OPTS=

-

- #--------------------Hadoop Logs---------------------------

- #export HADOOP_LOG_DIR=

(2)修改$HADOOP_HOME/etc/hadoop-site.xml

Xml代码

- <?xml version="1.0" encoding="UTF-8"?>

- <?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

- <configuration>

- <!--Yarn 需要使用 fs.defaultFS 指定NameNode URI -->

- <property>

- <name>fs.defaultFS</name>

- <value>hdfs://mycluster</value>

- </property>

- <!--HDFS超级用户 -->

- <property>

- <name>dfs.permissions.superusergroup</name>

- <value>zero</value>

- </property>

- <!--==============================Trash机制======================================= -->

- <property>

- <!--多长时间创建CheckPoint NameNode截点上运行的CheckPointer 从Current文件夹创建CheckPoint;默认:0 由fs.trash.interval项指定 -->

- <name>fs.trash.checkpoint.interval</name>

- <value>0</value>

- </property>

- <property>

- <!--多少分钟.Trash下的CheckPoint目录会被删除,该配置服务器设置优先级大于客户端,默认:0 不删除 -->

- <name>fs.trash.interval</name>

- <value>1440</value>

- </property>

- </configuration>

(3)修改$HADOOP_HOME/etc/hdfs-site.xml

Xml代码

- <?xml version="1.0" encoding="UTF-8"?>

- <?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

- <configuration>

- <!--开启web hdfs -->

- <property>

- <name>dfs.webhdfs.enabled</name>

- <value>true</value>

- </property>

- <property>

- <name>dfs.namenode.name.dir</name>

- <value>/home/zero/hadoop/hadoop-2.3.0-cdh5.0.1/data/dfs/name</value>

- <description> namenode 存放name table(fsimage)本地目录(需要修改)</description>

- </property>

- <property>

- <name>dfs.namenode.edits.dir</name>

- <value>${dfs.namenode.name.dir}</value>

- <description>namenode粗放 transaction file(edits)本地目录(需要修改)</description>

- </property>

- <property>

- <name>dfs.datanode.data.dir</name>

- <value>/home/zero/hadoop/hadoop-2.3.0-cdh5.0.1/data/dfs/data</value>

- <description>datanode存放block本地目录(需要修改)</description>

- </property>

- <property>

- <name>dfs.replication</name>

- <value>1</value>

- </property>

- <!-- 块大小 (默认) -->

- <property>

- <name>dfs.blocksize</name>

- <value>268435456</value>

- </property>

- <!--======================================================================= -->

- <!--HDFS高可用配置 -->

- <!--nameservices逻辑名 -->

- <property>

- <name>dfs.nameservices</name>

- <value>mycluster</value>

- </property>

- <property>

- <!--设置NameNode IDs 此版本最大只支持两个NameNode -->

- <name>dfs.ha.namenodes.mycluster</name>

- <value>nn1,nn2</value>

- </property>

- <!-- Hdfs HA: dfs.namenode.rpc-address.[nameservice ID] rpc 通信地址 -->

- <property>

- <name>dfs.namenode.rpc-address.mycluster.nn1</name>

- <value>Hadoop-NN-01:8020</value>

- </property>

- <property>

- <name>dfs.namenode.rpc-address.mycluster.nn2</name>

- <value>Hadoop-NN-02:8020</value>

- </property>

- <!-- Hdfs HA: dfs.namenode.http-address.[nameservice ID] http 通信地址 -->

- <property>

- <name>dfs.namenode.http-address.mycluster.nn1</name>

- <value>Hadoop-NN-01:50070</value>

- </property>

- <property>

- <name>dfs.namenode.http-address.mycluster.nn2</name>

- <value>Hadoop-NN-02:50070</value>

- </property>

-

- <!--==================Namenode editlog同步 ============================================ -->

- <!--保证数据恢复 -->

- <property>

- <name>dfs.journalnode.http-address</name>

- <value>0.0.0.0:8480</value>

- </property>

- <property>

- <name>dfs.journalnode.rpc-address</name>

- <value>0.0.0.0:8485</value>

- </property>

- <property>

- <!--设置JournalNode服务器地址,QuorumJournalManager 用于存储editlog -->

- <!--格式:qjournal://<host1:port1>;<host2:port2>;<host3:port3>/<journalId> 端口同journalnode.rpc-address -->

- <name>dfs.namenode.shared.edits.dir</name>

- <value>qjournal://Hadoop-DN-01:8485;Hadoop-DN-02:8485;Hadoop-DN-03:8485/mycluster</value>

- </property>

- <property>

- <!--JournalNode存放数据地址 -->

- <name>dfs.journalnode.edits.dir</name>

- <value>/home/zero/hadoop/hadoop-2.3.0-cdh5.0.1/data/dfs/jn</value>

- </property>

- <!--==================DataNode editlog同步 ============================================ -->

- <property>

- <!--DataNode,Client连接Namenode识别选择Active NameNode策略 -->

- <name>dfs.client.failover.proxy.provider.mycluster</name>

- <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

- </property>

- <!--==================Namenode fencing:=============================================== -->

- <!--Failover后防止停掉的Namenode启动,造成两个服务 -->

- <property>

- <name>dfs.ha.fencing.methods</name>

- <value>sshfence</value>

- </property>

- <property>

- <name>dfs.ha.fencing.ssh.private-key-files</name>

- <value>/home/zero/.ssh/id_rsa</value>

- </property>

- <property>

- <!--多少milliseconds 认为fencing失败 -->

- <name>dfs.ha.fencing.ssh.connect-timeout</name>

- <value>30000</value>

- </property>

-

- <!--==================NameNode auto failover base ZKFC and Zookeeper====================== -->

- <!--开启基于Zookeeper及ZKFC进程的自动备援设置,监视进程是否死掉 -->

- <property>

- <name>dfs.ha.automatic-failover.enabled</name>

- <value>true</value>

- </property>

- <property>

- <name>ha.zookeeper.quorum</name>

- <value>Zookeeper-01:2181,Zookeeper-02:2181,Zookeeper-03:2181</value>

- </property>

- <property>

- <!--指定ZooKeeper超时间隔,单位毫秒 -->

- <name>ha.zookeeper.session-timeout.ms</name>

- <value>2000</value>

- </property>

- </configuration>

(4)修改$HADOOP_HOME/etc/yarn-env.sh

Shell代码

- #Yarn Daemon Options

- #export YARN_RESOURCEMANAGER_OPTS

- #export YARN_NODEMANAGER_OPTS

- #export YARN_PROXYSERVER_OPTS

- #export HADOOP_JOB_HISTORYSERVER_OPTS

-

- #Yarn Logs

- export YARN_LOG_DIR=” /home/zero/hadoop/hadoop-2.3.0-cdh5.0.1/logs”

(5)$HADOOP_HOEM/etc/mapred-site.xml

Xml代码

- <configuration>

- <!-- 配置 MapReduce Applications -->

- <property>

- <name>mapreduce.framework.name</name>

- <value>yarn</value>

- </property>

- <!-- JobHistory Server ============================================================== -->

- <!-- 配置 MapReduce JobHistory Server 地址 ,默认端口10020 -->

- <property>

- <name>mapreduce.jobhistory.address</name>

- <value>0.0.0.0:10020</value>

- </property>

- <!-- 配置 MapReduce JobHistory Server web ui 地址, 默认端口19888 -->

- <property>

- <name>mapreduce.jobhistory.webapp.address</name>

- <value>0.0.0.0:19888</value>

- </property>

- </configuration>

(6)修改$HADOOP_HOME/etc/yarn-site.xml

Xml代码

- <configuration>

- <!-- nodemanager 配置 ================================================= -->

- <property>

- <name>yarn.nodemanager.aux-services</name>

- <value>mapreduce_shuffle</value>

- </property>

- <property>

- <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

- <value>org.apache.hadoop.mapred.ShuffleHandler</value>

- </property>

- <property>

- <description>Address where the localizer IPC is.</description>

- <name>yarn.nodemanager.localizer.address</name>

- <value>0.0.0.0:23344</value>

- </property>

- <property>

- <description>NM Webapp address.</description>

- <name>yarn.nodemanager.webapp.address</name>

- <value>0.0.0.0:23999</value>

- </property>

-

- <!-- HA 配置 =============================================================== -->

- <!-- Resource Manager Configs -->

- <property>

- <name>yarn.resourcemanager.connect.retry-interval.ms</name>

- <value>2000</value>

- </property>

- <property>

- <name>yarn.resourcemanager.ha.enabled</name>

- <value>true</value>

- </property>

- <property>

- <name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

- <value>true</value>

- </property>

- <!-- 使嵌入式自动故障转移。HA环境启动,与 ZKRMStateStore 配合 处理fencing -->

- <property>

- <name>yarn.resourcemanager.ha.automatic-failover.embedded</name>

- <value>true</value>

- </property>

- <!-- 集群名称,确保HA选举时对应的集群 -->

- <property>

- <name>yarn.resourcemanager.cluster-id</name>

- <value>yarn-cluster</value>

- </property>

- <property>

- <name>yarn.resourcemanager.ha.rm-ids</name>

- <value>rm1,rm2</value>

- </property>

- <!—这里RM主备结点需要单独指定,(可选)

- <property>

- <name>yarn.resourcemanager.ha.id</name>

- <value>rm2</value>

- </property>

- -->

- <property>

- <name>yarn.resourcemanager.scheduler.class</name>

- <value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

- </property>

- <property>

- <name>yarn.resourcemanager.recovery.enabled</name>

- <value>true</value>

- </property>

- <property>

- <name>yarn.app.mapreduce.am.scheduler.connection.wait.interval-ms</name>

- <value>5000</value>

- </property>

- <!-- ZKRMStateStore 配置 -->

- <property>

- <name>yarn.resourcemanager.store.class</name>

- <value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

- </property>

- <property>

- <name>yarn.resourcemanager.zk-address</name>

- <value>Zookeeper-01:2181,Zookeeper-02:2181,Zookeeper-03:2181</value>

- </property>

- <property>

- <name>yarn.resourcemanager.zk.state-store.address</name>

- <value>Zookeeper-01:2181,Zookeeper-02:2181,Zookeeper-03:2181</value>

- </property>

- <!-- Client访问RM的RPC地址 (applications manager interface) -->

- <property>

- <name>yarn.resourcemanager.address.rm1</name>

- <value>Hadoop-NN-01:23140</value>

- </property>

- <property>

- <name>yarn.resourcemanager.address.rm2</name>

- <value>Hadoop-NN-02:23140</value>

- </property>

- <!-- AM访问RM的RPC地址(scheduler interface) -->

- <property>

- <name>yarn.resourcemanager.scheduler.address.rm1</name>

- <value>Hadoop-NN-01:23130</value>

- </property>

- <property>

- <name>yarn.resourcemanager.scheduler.address.rm2</name>

- <value>Hadoop-NN-02:23130</value>

- </property>

- <!-- RM admin interface -->

- <property>

- <name>yarn.resourcemanager.admin.address.rm1</name>

- <value>Hadoop-NN-01:23141</value>

- </property>

- <property>

- <name>yarn.resourcemanager.admin.address.rm2</name>

- <value>Hadoop-NN-02:23141</value>

- </property>

- <!--NM访问RM的RPC端口 -->

- <property>

- <name>yarn.resourcemanager.resource-tracker.address.rm1</name>

- <value>Hadoop-NN-01:23125</value>

- </property>

- <property>

- <name>yarn.resourcemanager.resource-tracker.address.rm2</name>

- <value>Hadoop-NN-02:23125</value>

- </property>

- <!-- RM web application 地址 -->

- <property>

- <name>yarn.resourcemanager.webapp.address.rm1</name>

- <value>Hadoop-NN-01:23188</value>

- </property>

- <property>

- <name>yarn.resourcemanager.webapp.address.rm2</name>

- <value>Hadoop-NN-02:23188</value>

- </property>

- <property>

- <name>yarn.resourcemanager.webapp.https.address.rm1</name>

- <value>Hadoop-NN-01:23189</value>

- </property>

- <property>

- <name>yarn.resourcemanager.webapp.https.address.rm2</name>

- <value>Hadoop-NN-02:23189</value>

- </property>

- </configuration>

(7)修改slaves

[zero@CentOS-Cluster-01 hadoop]$ vi slaves

Hadoop-DN-01

Hadoop-DN-02

Hadoop-DN-03

3)分发程序

[zero@CentOS-Cluster-01 ~]$ scp -r /home/zero/hadoop/hadoop-2.3.0-cdh5.0.1 zero@Hadoop-NN-02: /home/zero/hadoop/

…….

[zero@CentOS-Cluster-01 ~]$ scp -r /home/zero/hadoop/hadoop-2.3.0-cdh5.0.1 zero@Hadoop-DN-01: /home/zero/hadoop/

…….

[zero@CentOS-Cluster-01 ~]$ scp -r /home/zero/hadoop/hadoop-2.3.0-cdh5.0.1 zero@Hadoop-DN-02: /home/zero/hadoop/

…….

[zero@CentOS-Cluster-01 ~]$ scp -r /home/zero/hadoop/hadoop-2.3.0-cdh5.0.1 zero@Hadoop-DN-03: /home/zero/hadoop/

…….

4)启动HDFS

(1)启动JournalNode

格式化前需要在JournalNode结点上启动JournalNode:

[zero@CentOS-Cluster-03 hadoop-2.3.0-cdh5.0.1]$ hadoop-daemon.sh start journalnode

starting journalnode, logging to /home/puppet/hadoop/cdh4.4/hadoop-2.0.0-cdh4.4.0/logs/hadoop-puppet-journalnode-BigData-03.out

验证JournalNode: [zero@CentOS-Cluster-03 hadoop-2.3.0-cdh5.0.1]$ jps

25918 QuorumPeerMain

16728 JournalNode

16772 Jps

(2)NameNode 格式化:

结点Hadoop-NN-01:hdfs namenode -format

[zero@CentOS-Cluster-01 hadoop-2.3.0-cdh5.0.1]$ hdfs namenode -format

14/06/23 21:02:49 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = CentOS-Cluster-01/8.8.8.11

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.3.0-cdh5.0.1

STARTUP_MSG: classpath =…

STARTUP_MSG: build =…

STARTUP_MSG: java = 1.7.0_60

************************************************************/

(…略…)

14/06/23 21:02:57 INFO common.Storage: Storage directory /home/zero/hadoop/hadoop-2.3.0-cdh5.0.1/data/dfs/name has been successfully formatted. (…略…)

14/06/23 21:02:59 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at CentOS-Cluster-01/8.8.8.11

************************************************************/

(3)同步NameNode元数据:

同步Hadoop-NN-01元数据到Hadoop-NN-02

主要是:dfs.namenode.name.dir,dfs.namenode.edits.dir还应该确保共享存储目录下(dfs.namenode.shared.edits.dir ) 包含NameNode 所有的元数据。

[puppet@BigData-01 hadoop-2.0.0-cdh4.4.0]$ scp -r data/ zero@Hadoop-NN-02:/home/zero/hadoop/hadoop-2.3.0-cdh5.0.1

seen_txid 100% 2 0.0KB/s 00:00

VERSION 100% 201 0.2KB/s 00:00

seen_txid 100% 2 0.0KB/s 00:00

fsimage_0000000000000000000.md5 100% 62 0.1KB/s 00:00

fsimage_0000000000000000000 100% 121 0.1KB/s 00:00

VERSION 100% 201 0.2KB/s 00:00

(4)初始化ZFCK:

创建ZNode,记录状态信息。

结点Hadoop-NN-01:hdfs zkfc -formatZK

[zero@CentOS-Cluster-01 hadoop-2.3.0-cdh5.0.1]$ hdfs zkfc -formatZK

14/06/23 23:22:28 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

14/06/23 23:22:28 INFO tools.DFSZKFailoverController: Failover controller configured for NameNode NameNode at Hadoop-NN-01/8.8.8.11:8020

14/06/23 23:22:28 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.5-cdh5.0.1--1, built on 05/06/2014 18:50 GMT

14/06/23 23:22:28 INFO zookeeper.ZooKeeper: Client environment:host.name=CentOS-Cluster-01

14/06/23 23:22:28 INFO zookeeper.ZooKeeper: Client environment:java.version=1.7.0_60

14/06/23 23:22:28 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation

14/06/23 23:22:28 INFO zookeeper.ZooKeeper: Client environment:java.home=/usr/runtime/java/jdk1.7.0_60/jre

14/06/23 23:22:28 INFO zookeeper.ZooKeeper: Client environment:java.class.path=...

14/06/23 23:22:29 INFO zookeeper.ZooKeeper: Client environment:java.library.path=/home/zero/hadoop/hadoop-2.3.0-cdh5.0.1/lib/native

14/06/23 23:22:29 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp

14/06/23 23:22:29 INFO zookeeper.ZooKeeper: Client environment:java.compiler=<NA>

14/06/23 23:22:29 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux

14/06/23 23:22:29 INFO zookeeper.ZooKeeper: Client environment:os.arch=i386

14/06/23 23:22:29 INFO zookeeper.ZooKeeper: Client environment:os.version=2.6.32-431.el6.i686

14/06/23 23:22:29 INFO zookeeper.ZooKeeper: Client environment:user.name=zero

14/06/23 23:22:29 INFO zookeeper.ZooKeeper: Client environment:user.home=/home/zero

14/06/23 23:22:29 INFO zookeeper.ZooKeeper: Client environment:user.dir=/home/zero/hadoop/hadoop-2.3.0-cdh5.0.1

14/06/23 23:22:29 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=Zookeeper-01:2181,Zookeeper-02:2181,Zookeeper-03:2181 sessionTimeout=2000 watcher=org.apache.hadoop.ha.ActiveStandbyElector$WatcherWithClientRef@150c2b0

14/06/23 23:22:29 INFO zookeeper.ClientCnxn: Opening socket connection to server CentOS-Cluster-03/8.8.8.13:2181. Will not attempt to authenticate using SASL (unknown error)

14/06/23 23:22:29 INFO zookeeper.ClientCnxn: Socket connection established to CentOS-Cluster-03/8.8.8.13:2181, initiating session

14/06/23 23:22:29 INFO zookeeper.ClientCnxn: Session establishment complete on server CentOS-Cluster-03/8.8.8.13:2181, sessionid = 0x146cc0517b30002, negotiated timeout = 4000

14/06/23 23:22:29 INFO ha.ActiveStandbyElector: Session connected.

14/06/23 23:22:30 INFO ha.ActiveStandbyElector: Successfully created /hadoop-ha/mycluster in ZK.

14/06/23 23:22:30 INFO zookeeper.ZooKeeper: Session: 0x146cc0517b30002 closed

(5)启动

集群启动法:Hadoop-NN-01:

[zero@CentOS-Cluster-01 hadoop-2.3.0-cdh5.0.1]$ start-dfs.sh

14/04/23 01:54:58 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting namenodes on [Hadoop-NN-01 Hadoop-NN-02]

Hadoop-NN-01: starting namenode, logging to /home/puppet/hadoop/cdh4.4/hadoop-2.0.0-cdh4.4.0/logs/hadoop-puppet-namenode-BigData-01.out

Hadoop-NN-02: starting namenode, logging to /home/puppet/hadoop/cdh4.4/hadoop-2.0.0-cdh4.4.0/logs/hadoop-puppet-namenode-BigData-02.out

Hadoop-DN-01: starting datanode, logging to /home/puppet/hadoop/cdh4.4/hadoop-2.0.0-cdh4.4.0/logs/hadoop-puppet-datanode-BigData-03.out

Hadoop-DN-02: starting datanode, logging to /home/puppet/hadoop/cdh4.4/hadoop-2.0.0-cdh4.4.0/logs/hadoop-puppet-datanode-BigData-04.out

Hadoop-DN-03: starting datanode, logging to /home/puppet/hadoop/cdh4.4/hadoop-2.0.0-cdh4.4.0/logs/hadoop-puppet-datanode-BigData-05.out

Starting journal nodes [Hadoop-DN-01 Hadoop-DN-02 Hadoop-DN-03]

Hadoop-DN-01: starting journalnode, logging to /home/puppet/hadoop/cdh4.4/hadoop-2.0.0-cdh4.4.0/logs/hadoop-puppet-journalnode-BigData-03.out

Hadoop-DN-03: starting journalnode, logging to /home/puppet/hadoop/cdh4.4/hadoop-2.0.0-cdh4.4.0/logs/hadoop-puppet-journalnode-BigData-05.out

Hadoop-DN-02: starting journalnode, logging to /home/puppet/hadoop/cdh4.4/hadoop-2.0.0-cdh4.4.0/logs/hadoop-puppet-journalnode-BigData-04.out

14/04/23 01:55:29 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting ZK Failover Controllers on NN hosts [Hadoop-NN-01 Hadoop-NN-02]

Hadoop-NN-01: starting zkfc, logging to /home/puppet/hadoop/cdh4.4/hadoop-2.0.0-cdh4.4.0/logs/hadoop-puppet-zkfc-BigData-01.out

Hadoop-NN-02: starting zkfc, logging to /home/puppet/hadoop/cdh4.4/hadoop-2.0.0-cdh4.4.0/logs/hadoop-puppet-zkfc-BigData-02.out

单进程启动法:

<1>NameNode(Hadoop-NN-01,Hadoop-NN-02):

[zero@CentOS-Cluster-01 hadoop-2.3.0-cdh5.0.1]$ hadoop-daemon.sh start namenode

<2>DataNode(Hadoop-DN-01,Hadoop-DN-02,Hadoop-DN-03):

[zero@CentOS-Cluster-01 hadoop-2.3.0-cdh5.0.1]$ hadoop-daemon.sh start datanode

<3>JournalNode(Hadoop-DN-01,Hadoop-DN-02,Hadoop-DN-03):

[zero@CentOS-Cluster-01 hadoop-2.3.0-cdh5.0.1]$ hadoop-daemon.sh start journalnode

<4>ZKFC(Hadoop-NN-01,Hadoop-NN-02):

[zero@CentOS-Cluster-01 hadoop-2.3.0-cdh5.0.1]$ hadoop-daemon.sh start zkfc

(6)验证<1>进程NameNode: [zero@CentOS-Cluster-01 hadoop-2.3.0-cdh5.0.1]$ jps

4001 NameNode

4290 DFSZKFailoverController

4415 Jps

DataNode: [zero@CentOS-Cluster-03 hadoop-2.3.0-cdh5.0.1]$ jps

25918 QuorumPeerMain

19217 JournalNode

19143 DataNode

19351 Jps

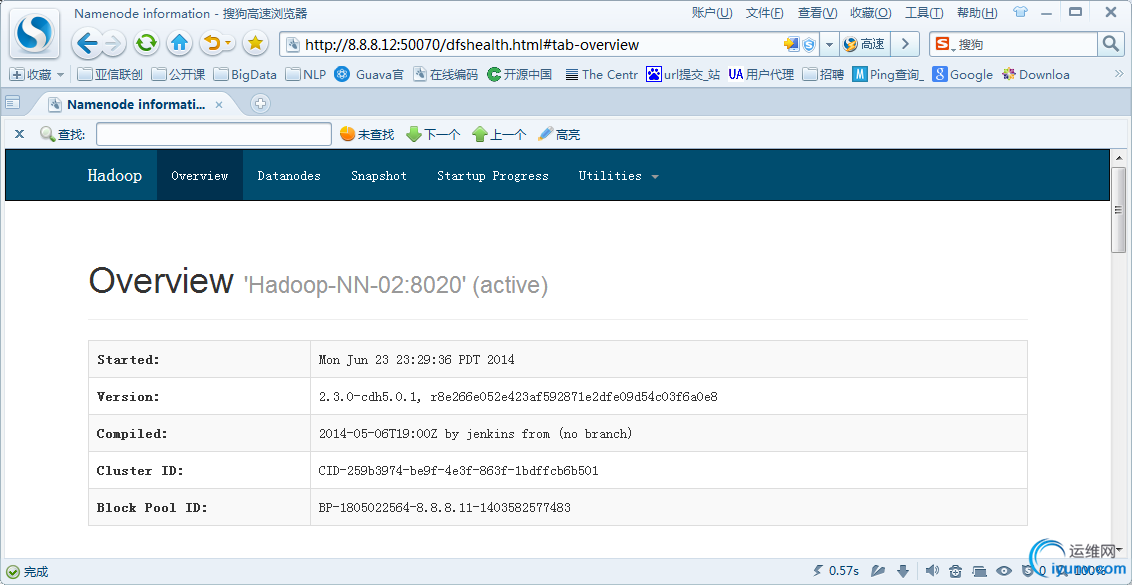

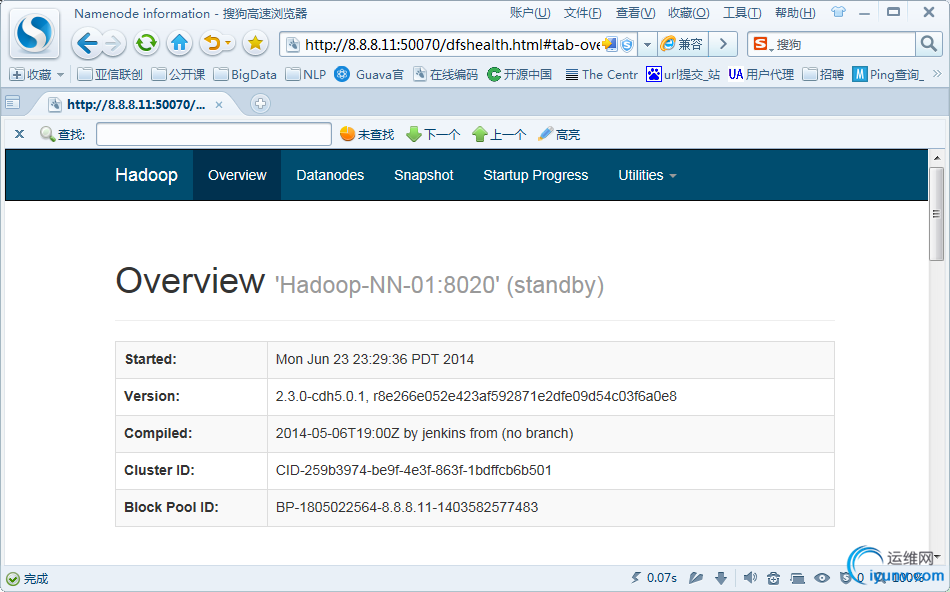

<2>页面:

Active结点:http://8.8.8.10:50070

JSP:

StandBy结点:

JSP:

(7)停止:stop-dfs.sh

5)启动Yarn

(1)启动

<1>集群启动

Hadoop-NN-01启动Yarn,命令所在目录:$HADOOP_HOME/sbin

[zero@CentOS-Cluster-01 hadoop]$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /home/zero/hadoop/hadoop-2.3.0-cdh5.0.1/logs/yarn-zero-resourcemanager-CentOS-Cluster-01.out

Hadoop-DN-02: starting nodemanager, logging to /home/zero/hadoop/hadoop-2.3.0-cdh5.0.1/logs/yarn-zero-nodemanager-CentOS-Cluster-04.out

Hadoop-DN-03: starting nodemanager, logging to /home/zero/hadoop/hadoop-2.3.0-cdh5.0.1/logs/yarn-zero-nodemanager-CentOS-Cluster-05.out

Hadoop-DN-01: starting nodemanager, logging to /home/zero/hadoop/hadoop-2.3.0-cdh5.0.1/logs/yarn-zero-nodemanager-CentOS-Cluster-03.out

Hadoop-NN-02备机启动RM:

[zero@CentOS-Cluster-02 hadoop]$ yarn-daemon.sh start resourcemanager

starting resourcemanager, logging to /home/zero/hadoop/hadoop-2.3.0-cdh5.0.1/logs/yarn-zero-resourcemanager-CentOS-Cluster-02.o

<2>单进程启动

ResourceManager(Hadoop-NN-01,Hadoop-NN-02):

[zero@CentOS-Cluster-01 hadoop-2.3.0-cdh5.0.1]$ yarn-daemon.sh start resourcemanager

DataNode(Hadoop-DN-01,Hadoop-DN-02,Hadoop-DN-03):

[zero@CentOS-Cluster-03 hadoop-2.3.0-cdh5.0.1]$ yarn-daemon.sh start nodemanager

(2)验证

<1>进程:

JobTracker:Hadoop-NN-01,Hadoop-NN-02

[zero@CentOS-Cluster-01 hadoop]$ jps

7454 NameNode

14684 Jps

14617 ResourceManager

7729 DFSZKFailoverController

TaskTracker:Hadoop-DN-01,Hadoop-DN-02,Hadoop-DN-03

[zero@CentOS-Cluster-03 ~]$ jps

8965 Jps

5228 JournalNode

5137 DataNode

2514 QuorumPeerMain

8935 NodeManager

<2>页面

ResourceManger(Active):8.8.8.11:23188

ResourceManager(Standby):8.8.8.12:23188

(3)停止

Hadoop-NN-01:stop-yarn.sh

Hadoop-NN-02:yarn-daeman.sh stop resourcemanager

分享到:

Hive-0.12.0-cdh5.0.1 安装[metasore 内嵌 ... | Zookeeper-3.4.5-cdh5.0.1单机模式、副本 ...

Hive-0.12.0-cdh5.0.1 安装[metasore 内嵌 ... | Zookeeper-3.4.5-cdh5.0.1单机模式、副本 ...

|

|