|

1.工作准备 1.1 mysql相关 配置准备 #打开查询日志 Set global general_log = 1; Set global general_log_file =’/data/mysqldata/data/localhost1.log’ 注意:log_output 这个参数需要设置为file,如果为table就不会记录到文件了 #打开满查询日志(默认是开的) Set gloabl log_slow_queries = 1; #修改满查询阀值(默认1s改成0.5s) Set global long_query_time = 0.5; #打开未使用索引查询记录 Set global log_queries_not_using_indexes = 1;

以上配置是,打开相关日志记录的参数,慢查询,和不适用索引查询的sql

2.1 QPS= select + update+ insert+delete 这里我们只统计数据库每秒执行的select,update,insert和delete数量(增量数据)。 因为数据库里面这些参数对应的值都是当前值, 而不是每秒的增量,所以,我们需要自己写脚本去搜集

Select ------>Com_select Update ------>Com_update Insert ------>Com_insert Delete ------>Com_delete

2.2 去qps增量值保存到文件 这里我们采用mysqladmin -u7roaddba -p -r -i 1 exttended-status来动态增量获取上面对应参数的值。 这里-r 是去增量值,-i 是刷新取值频率,这里是每秒

在mysql服务器,系统层面执行 mysqladmin -u7roaddba -p -r -i 1 ext |awk -F"|" "BEGIN{ count=0; }"'{ if($2 ~ /Variable_name/ && ++count == 1){\ print "---------- MySQL Command Status -- --";\ print "---Time---|select insert update delete";\ }\

else if ($2 ~ /Com_select /){com_select=$3;}\ else if ($2 ~ /Com_insert /){com_insert=$3;}\ else if ($2 ~ /Com_update /){com_update=$3;}\ else if ($2 ~ /Com_delete /){com_delete=$3;}\ else if ($2 ~ /Uptime / && count = 2){\ printf(" %s ",strftime("%Y-%m-%d %H:%M:%S"));\ printf(",%6d ,%6d ,%6d ,%6d\n",com_select,com_insert,com_update,com_delete);\ }}' |tee -a a.csv

这里会吧上面的几个指标值,按照‘,’ 分割来写入文件a.csv中保存下来 看下a.csv中的内容

3,收集慢查询和general log日志文件 3.1 将服务器的general log日志和慢查询日志下载到本地保存 如: 这里的慢查询日志名是 slow-query.log General log 日志名是 localhost.log

差不多5个小时,可能general log的日志文件就会填充到1G 大小以上

4,处理和分析阶段

4.1 将qps 抓取的数据,导入到本地空闲的mysql服务器中 这里我们导入到test库中 创建表 Create table qps(ctime datetime, svalue int(11), uvalue int(11), ivalue int(11), dvalue int(11)); 将搜集qps的文件a.csv 复制到mysql的数据目录下的test目录中 Mysql> load data infile ‘a.csv’ into qps fields terminated by ‘,’;

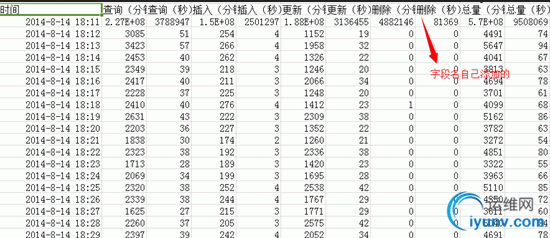

4.2 处理qps 表数据 这里我们通过select查询,获取分钟的数据量和分钟内平均每秒的数据量,然后将取得的数据导出到外部csv格式文件: select ctime as ‘统计时间’, sum(svalue) as ‘select/分钟’, sum(svalue) div 60 as ‘select/秒’, sum(ivalue) as ‘insrt/分钟’, sum(ivalue) div 60 as ‘insert/秒’, sum(uvalue) as ‘update/分钟’, sum(uvalue) div 60 as ‘update/秒’, sum(dvalue) as ‘delete/分钟’, sum(dvalue) div 60 as ‘delete/秒’, (sum(svalue)+sum(ivalue)+sum(uvalue)+sum(dvalue)) as ‘总量/分钟’, (sum(svalue)+sum(ivalue)+sum(uvalue)+sum(dvalue)) div 60 as ‘总量/秒’ from fengcelog group by date_format(ctime,'%Y-%m-%d %H:%i:00') into outfile 'db_log_hgtest_0001_20140815.csv' fields terminated by ',' 最后到处csv文件,在windows中 excel打开,如下

这个就很形象的展示了,统计时间,各种指标的值了 4.3 general log 日志处理分析 这里使用mysqlsla 去分析genernal 日志文件 a.安装mysqlsla .下载 mysqlsla [iyunv@localhost tmp]# wget http://hackmysql.com/scripts/mysqlsla-2.03.tar.gz --19:45:45-- http://hackmysql.com/scripts/mysqlsla-2.03.tar.gz Resolving hackmysql.com... 64.13.232.157 Connecting to hackmysql.com|64.13.232.157|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 33674 (33K) [application/x-tar] Saving to: `mysqlsla-2.03.tar.gz.2'

100%[===========================================================================================>] 33,674 50.2K/s in 0.7s

19:45:47 (50.2 KB/s) - `mysqlsla-2.03.tar.gz.2' saved [33674/33674] b..解压 [iyunv@localhost tmp]# tar -zxvf mysqlsla-2.03.tar.gz mysqlsla-2.03/ mysqlsla-2.03/Changes mysqlsla-2.03/INSTALL mysqlsla-2.03/README mysqlsla-2.03/Makefile.PL mysqlsla-2.03/bin/ mysqlsla-2.03/bin/mysqlsla mysqlsla-2.03/META.yml mysqlsla-2.03/lib/ mysqlsla-2.03/lib/mysqlsla.pm mysqlsla-2.03/MANIFEST [iyunv@localhost tmp]# cd mysqlsla-2.03 [iyunv@localhost mysqlsla-2.03]# ls bin Changes INSTALL lib Makefile.PL MANIFEST META.yml README c.执行perl脚本检查包依赖关系 [iyunv@localhost mysqlsla-2.03]# perl Makefile.PL Checking if your kit is complete... Looks good Writing Makefile for mysqlsla d.安装 [iyunv@localhost mysqlsla-2.03]# make && make install; cp lib/mysqlsla.pm blib/lib/mysqlsla.pm cp bin/mysqlsla blib/script/mysqlsla /usr/bin/perl "-MExtUtils::MY" -e "MY->fixin(shift)" blib/script/mysqlsla Manifying blib/man3/mysqlsla.3pm Installing /usr/lib/perl5/site_perl/5.8.8/mysqlsla.pm Installing /usr/share/man/man3/mysqlsla.3pm Installing /usr/bin/mysqlsla Writing /usr/lib/perl5/site_perl/5.8.8/i386-linux-thread-multi/auto/mysqlsla/.packlist Appending installation info to /usr/lib/perl5/5.8.8/i386-linux-thread-multi/perllocal.pod [iyunv@localhost mysqlsla-2.03]# 使用mysqlsla 分析general log mysqlsla -lt general --top 200 general_log.txt > top_200.txt 这里-lt 是指定后面要分析的日志类型,这里我们使用-lt general

展示下分析后的top_200.txt文件内容 Report for general logs: game_gerernal.log 1.56M queries total, 4.17k unique Sorted by 'c_sum' ____________________________001 ___ Count : 735.58k (47.22%) Connection ID : 2528823 Database : Users : g_hgtest_0001@10.107.167.116 : 87.93% (646784) of query, 86.83% (1352745) of all users @ : 12.07% (88798) of query, 13.07% (203592) of all users Query abstract: SET autocommit=N Query sample: SET autocommit=1 ___________________________________________________ 002 ___ Count : 49.78k (3.20%)#这里统计sql执行了4.978万次 Connection ID : 2528823 #这里是connect id,从show processlist中可以找到 g_hgtest_0001@10.107.167.116 : 84.11% (41868) of query, 86.83% (1352745) of all users @ : 15.89% (7910) of query, 13.07% (203592) of all users Query abstract: SELECT * FROM t_u_newbie WHERE username='S' AND site='S' #执行的语句(通用写法) Query sample: select * from t_u_newbie where `username`='449' and `site`='hgtest_0001'#具体sql示例 _____________________________________________ 003 ___ Count : 43.84k (2.81%) Connection ID : 2528826 g_hgtest_0001@10.107.167.116 : 99.46% (43602) of query, 86.83% (1352745) of all users @ : 0.54% (238) of query, 13.07% (203592) of all users Query abstract: SELECT * FROM t_u_item WHERE userid = N AND (bagtype = N OR bagtype = N) AND isexist = N AND place != -N Query sample: select * from t_u_item where userId = 1002011 and (bagType = 2 or bagType = 18) and IsExist = 1 and place != -1 上面 简单的展示了general log中记录执行次数最多的3个sql

4.4 ,慢查询日志分析 同样适用mysqlsla可以分析慢查询中出现频率最高的sql, 当然也要注意关注那些没有适应索引查询的sql,(有些很明显字段是有加索引的,但是还出现在慢查询日志中) root]#mysqlsla -lt slow -top 200 query_slow.log > slow.log 1.04k queries total, 7 unique Sorted by 't_sum' Grand Totals: Time 0 s, Lock 0 s, Rows sent 18, Rows Examined 38.52 _____________________________________ 001 ___ Count : 865 (82.93%) Time : 213.588 ms total, 247 avg, 188 to 388 max (57.17%) 95% of Time : 197.697 ms total, 241 avg, 188 to 351 max Lock Time (s) : 79.913 ms total, 92 avg, 59 to 162 max (57.97%) 95% of Lock : 73.55 ms total, 90 avg, 59 to 134 max Rows sent : 0 avg, 0 to 0 max (0.00%) Rows examined : 33 avg, 33 to 33 max (74.10%) Database : db_game_hgtest_0002 Users : g_hgtest_0002@ 10.34.148.18 : 100.00% (865) of query, 99.81% (1041) of all users Query abstract: SET timestamp=N; UPDATE t_p_macrodrop SET currentcount = maxcount, resetdate = now() WHERE timestampdiff(hour,resetdate,now()) >= time; Query sample: SET timestamp=1408031756; update t_p_macrodrop set `currentCount` = `maxCount`, `resetDate` = now() where TIMESTAMPDIFF(HOUR,resetDate,now()) >= time; _______________________________ 002 ___ Count : 173 (16.59%) Time : 153.938 ms total, 890 avg, 826 to 989 max (41.20%) 95% of Time : 145.608 ms total, 888 avg, 826 to 906 max #执行消耗的总时间,平均时间,和最慢的一条执行时间 Lock Time (s) : 57.457 ms total, 332 avg, 310 to 365 max (41.68%) #锁时间 95% of Lock : 54.246 ms total, 331 avg, 310 to 349 max Rows sent : 0 avg, 0 to 0 max (0.00%) Rows examined : 41 avg, 41 to 41 max (18.41%) Database : Users : g_hgtest_0002@ 10.34.148.18 : 100.00% (173) of query, 99.81% (1041) of all users

Query abstract: SET timestamp=N; SELECT *, t_mv.membercount membercount_ FROM t_u_guild g, t_u_combine_load_guild c, (SELECT COUNT( mv.guildid) membercount,mv.guildid FROM v_guild_member_list mv GROUP BY mv.guildid ) t_mv WHERE g.guildid = t_mv.guildid AND g.guildid=c.guildid AND g.state !=N AND c.states = N LIMIT N; Query sample: SET timestamp=1408031999; SELECT *, t_mv.memberCount memberCount_ FROM t_u_guild g, t_u_combine_load_guild c, (SELECT COUNT( mv.guildID) memberCount,mv.guildID FROM v_guild_member_list mv GROUP BY mv.guildID ) t_mv WHERE g.guildID = t_mv.guildID AND g.guildID=c.guildID AND g.state !=3 AND c.states = 1 limit 100;

4,查找数据库中最大的表 4.1 根据最多数据的表,查看刚刚分析的general log中的某个insert 次数是否相对应(insert 次数多导致表大,这里insert次数并不是跟表数据量相等) 扫描大表: Select TABLE_SCHEMA,TABLE_NAME,TABLE_ROWS from information_schema.tables where TABLE_SCHEMA=’TABLENAME’ order by TABLE_ROWS desc limit 10;

Qps分析: 1,通过mysqladmin 每秒获取数据库的com_select com_update等值 2,打开genenral log, 保存下来,使用mysqlsla统计 3,打开慢查询日志,设定满查询时间为1秒, 分析慢查询 4,统计数据库中每个表的行数 5,跟踪一个玩家的所有sql 6,压测期间,让开发记住当天的操作

|