|

|

socket通常也称作"套接字",用于描述IP地址和端口,是一个通信链的句柄,应用程序通常通过"套接字"向网络发出请求或者应答网络请求。socket起源于Unix,而Unix/Linux基本哲学之一就是“一切皆文件”,对于文件用【打开】【读写】【关闭】模式来操作。socket就是该模式的一个实现,socket即是一种特殊的文件,一些socket函数就是对其进行的操作(读/写IO、打开、关闭)有关socket的详细介绍请看:

http://blog.csdn.net/sight_/article/details/8138802

socket和file的区别:

- file模块是针对某个指定文件进行【打开】【读写】【关闭】

- socket模块是针对服务器端和客户端Socket进行【打开】【读写】【关闭】

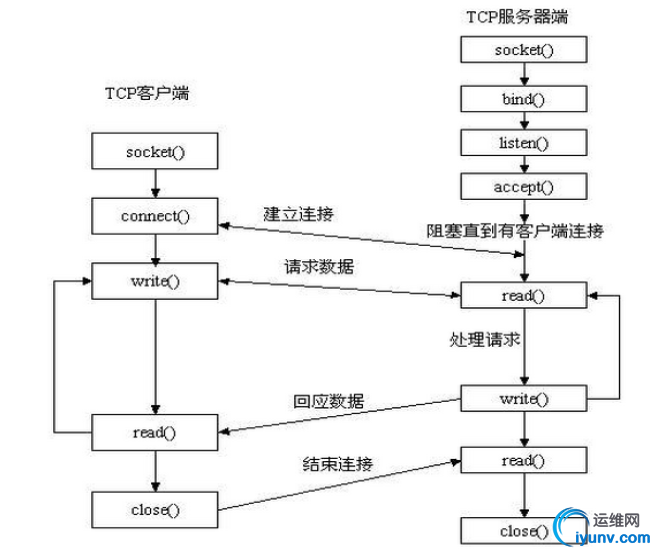

socket通信原理如下:

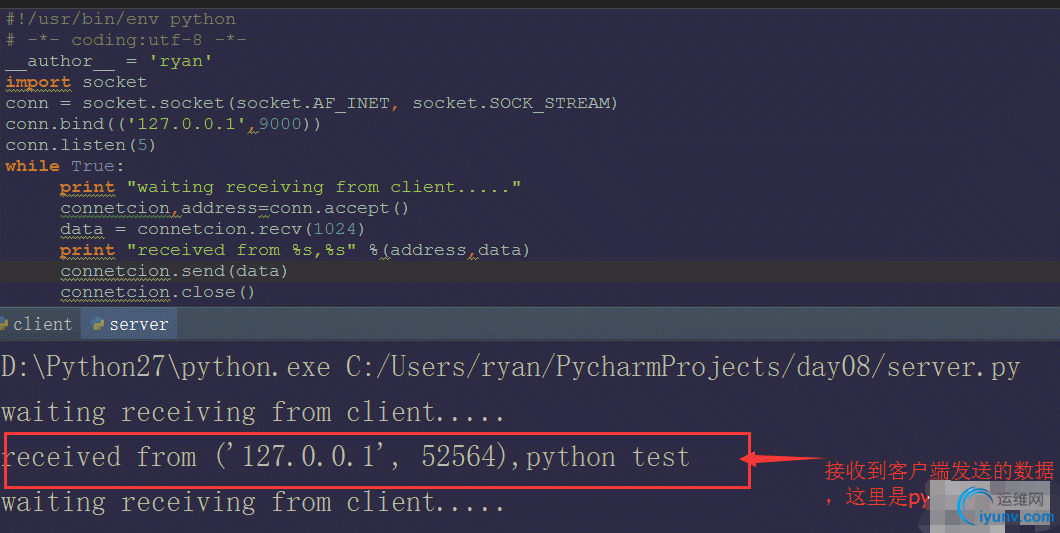

基于以上原理图,运用如下代码来实现效果:

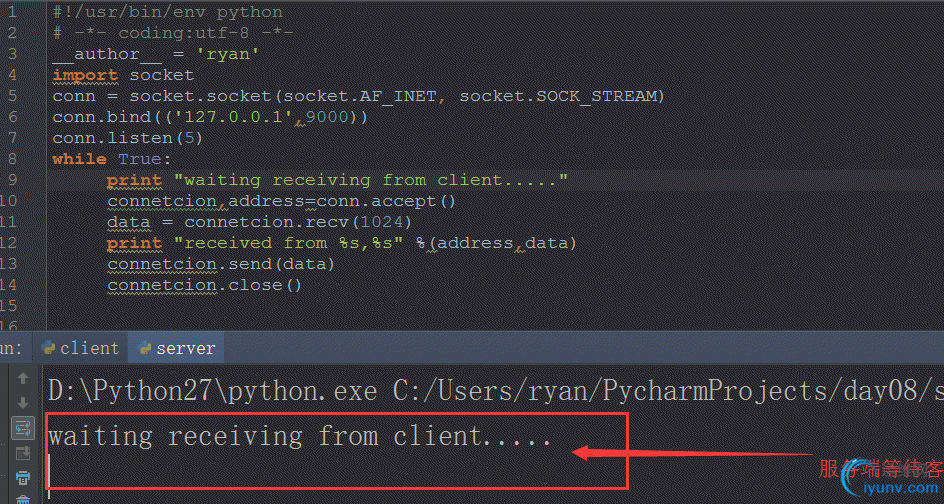

socket server

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

__author__ = 'ryan'

import socket

conn = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

conn.bind(('127.0.0.1',9000))

conn.listen(5)

while True:

print "waiting receiving from client....."

connetcion,address=conn.accept()

data = connetcion.recv(1024)

print "received from %s,%s" %(address,data)

connetcion.send(data)

connetcion.close()

|

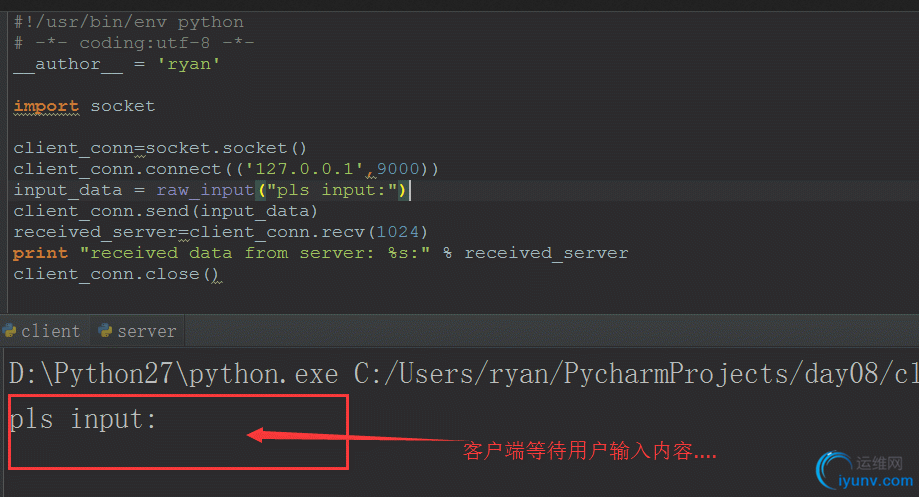

socket client

1

2

3

4

5

6

7

8

9

10

11

12

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

__author__ = 'ryan'

import socket

client_conn=socket.socket()

client_conn.connect(('127.0.0.1',9000))

input_data = raw_input("pls input:")

client_conn.send(input_data)

received_server=client_conn.recv(1024)

print "received data from server: %s:" % received_server

client_conn.close()

|

分别启动服务端和客户端程序:

server运行:

client运行

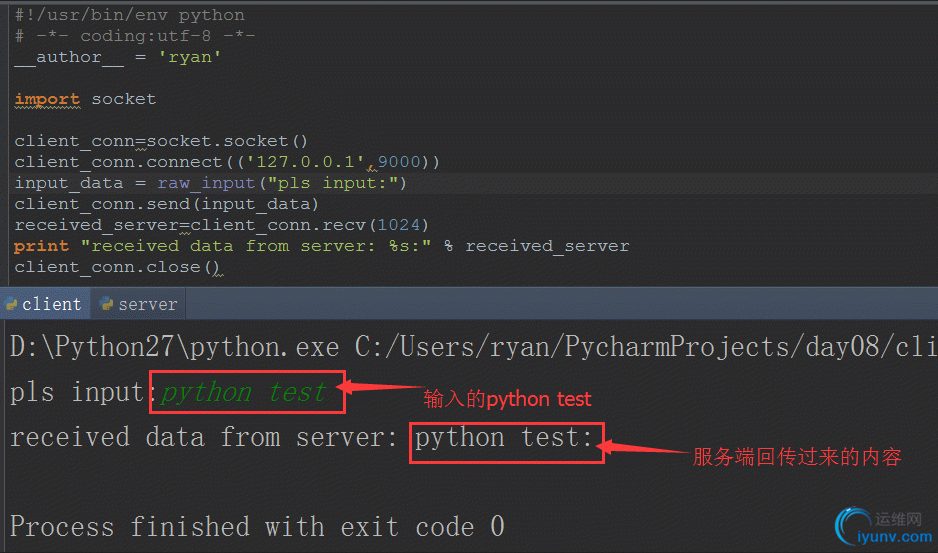

此时输入内容(这里我们输入python test)

此时server 端状态:

接下来对代码进行解释:

WEB服务应用(server):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

__author__ = 'ryan'

#!/usr/bin/env python

#coding:utf-8

import socket

#导入socket模块

#定义handle_request函数

def handle_request(client):

#定义变量buf等于类clinet下的方法recv接收的内容(这里内容是1024)

buf = client.recv(1024)

#定义send方法发送的内容

client.send("HTTP/1.1 200 OK\r\n\r\n")

client.send("Hello, World")

#定义main函数

def main():

#通过socket.socket类实例化一个对象sock,即创建一个连接

sock = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

#对象sock调用类socket中的方法bind,并传入参数'localhost'和8080;即指定地址和打开的端口

sock.bind(('localhost',8080))

#对象sock调用类socket中的方法listen并传入参数5,这里定义最大连接数,同时连接过来的连接数超过5就会被拒绝掉

sock.listen(5)

while True:

#sock对象调用accept方法等待客户端发送过来的请求;

#connection代表客户端sock对象,address连接过来的客户端IP地址;connection类似于土电话(两个易拉罐底部串个

#细线)细线。

这里就会阻塞,等待客户端连接过来

connection, address = sock.accept()

connection.recv(1024)

#通过connection(细线/管道)最多接收客户端发送过来请求的字节数(这里定义1024),

这里

#就会阻塞,等待客户端发送数据

#handle_request(connection)

#connection调用send方法发送数据,这里发送数据实际上是往缓冲区发,然后让缓冲区

#根据自己的算法发送出去(到客户端,这里的数据是"HTTP/1.1 200 OK\r\n\r\n"和"Hello,world")

connection.send("HTTP/1.1 200 OK\r\n\r\n")

connection.sendall("HTTP/1.1 200 OK\r\n\r\n")

#sendall也是往缓冲区发,发完会后缓冲区立即发送出去不用再调用缓冲区的算法

connection.send("Hello, World")

#关闭连接端口

connection.close()

if __name__ == '__main__':

main()

|

注意:服务端有两处阻塞:

①connection, address = sock.accept() #等待客户端连接过来,处于阻塞状态

②connection.recv(1024) #等待接收客户端发送数据过来,处于阻塞状态

注意:sock.close()与connection.close()的区别:

sock.close()是关闭服务端的socket程序本身的连接,关闭后整个服务端socket程序就退出运行;

connection.close()是关闭服务端socket程序与客户端 连接,关闭后,服务端的socket程序仍然还在运行.

WEB服务应用(client):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

__author__ = 'ryan'

import socket

#通过socket实例化一个对象obj

obj=socket.socket()

#调用socket类中的方法connect,并传入参数(localhost,8080);换句话说建立连接

obj.connect(('localhost',8080))

#对象obj调用send方法传入参数"this is a client";即发送数据到服务端

obj.send("this is a client")

#对象obj调用recv方法接收数据,传入参数1024;即最大接收字节数为1024个

recv_server_data=obj.recv(1024)

print recv_server_data

#obj调用socket类中的方法close;即关闭连接

obj.close()

|

分别启动服务端 客户端运行结果如下:

1

2

| D:\Python27\python.exe C:/Users/ryan/PycharmProjects/day08/clientdemo.py

HTTP/1.1 200 OK

|

常见功能详解:

sock = socket.socket(socket.AF_INET,socket.SOCK_STREAM,0)

参数一:地址簇

socket.AF_INET IPv4(默认)

socket.AF_INET6 IPv6

socket.AF_UNIX 只能够用于单一的Unix系统进程间通信

参数二:类型

socket.SOCK_STREAM 流式socket , for TCP (默认)

socket.SOCK_DGRAM 数据报式socket , for UDP

socket.SOCK_RAW 原始套接字,普通的套接字无法处理ICMP、IGMP等网络报文,而SOCK_RAW可以;其次,SOCK_RAW也可以处理特殊的IPv4报文;此外,利用原始套接字,可以通过IP_HDRINCL套接字选项由用户构造IP头。

socket.SOCK_RDM 是一种可靠的UDP形式,即保证交付数据报但不保证顺序。SOCK_RAM用来提供对原始协议的低级访问,在需要执行某些特殊操作时使用,如发送ICMP报文。SOCK_RAM通常仅限于高级用户或管理员运行的程序使用。

socket.SOCK_SEQPACKET 可靠的连续数据包服务

参数三:协议

0 (默认)与特定的地址家族相关的协议,如果是 0 ,则系统就会根据地址格式和套接类别,自动选择一个合适的协议 |

UDP实例

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| import socket

ip_port = ('127.0.0.1',9999)

sk = socket.socket(socket.AF_INET,socket.SOCK_DGRAM,0)

sk.bind(ip_port)

while True:

data = sk.recv(1024)

print data

import socket

ip_port = ('127.0.0.1',9999)

sk = socket.socket(socket.AF_INET,socket.SOCK_DGRAM,0)

while True:

inp = raw_input('数据:').strip()

if inp == 'exit':

break

sk.sendto(inp,ip_port)

sk.close()

|

|

sock.bind(address)

s.bind(address) 将套接字绑定到地址。address地址的格式取决于地址族。在AF_INET下,以元组(host,port)的形式表示地址。

sock.listen(backlog)

开始监听传入连接。backlog指定在拒绝连接之前,可以挂起的最大连接数量。

backlog等于5,表示内核已经接到了连接请求,但服务器还没有调用accept进行处理的连接个数最大为5

这个值不能无限大,因为要在内核中维护连接队列

sock.setblocking(bool)

是否阻塞(默认True),如果设置False,那么accept和recv时一旦无数据,则报错。

sock.accept()

接受连接并返回(conn,address),其中conn是新的套接字对象,可以用来接收和发送数据。address是连接客户端的地址。接收TCP 客户的连接(阻塞式)等待连接的到来

sock.connect(address)

连接到address处的套接字。一般,address的格式为元组(hostname,port),如果连接出错,返回socket.error错误。

sock.connect_ex(address)

同上,只不过会有返回值,连接成功时返回 0 ,连接失败时候返回编码,例如:10061

sock.close()

关闭套接字

sock.recv(bufsize[,flag])

接受套接字的数据。数据以字符串形式返回,bufsize指定最多可以接收的数量。flag提供有关消息的其他信息,通常可以忽略。

sock.recvfrom(bufsize[.flag])

与recv()类似,但返回值是(data,address)。其中data是包含接收数据的字符串,address是发送数据的套接字地址。

sock.send(string[,flag])

将string中的数据发送到连接的套接字。返回值是要发送的字节数量,该数量可能小于string的字节大小。即:可能未将指定内容全部发送。

sock.sendall(string[,flag])

将string中的数据发送到连接的套接字,但在返回之前会尝试发送所有数据。成功返回None,失败则抛出异常。 内部通过递归调用send,将所有内容发送出去。

sock.sendto(string[,flag],address)

将数据发送到套接字,address是形式为(ipaddr,port)的元组,指定远程地址。返回值是发送的字节数。该函数主要用于UDP协议。

sock.settimeout(timeout)

设置套接字操作的超时期,timeout是一个浮点数,单位是秒。值为None表示没有超时期。一般,超时期应该在刚创建套接字时设置,因为它们可能用于连接的操作(如 client 连接最多等待5s )

sock.getpeername()

返回连接套接字的远程地址。返回值通常是元组(ipaddr,port)。

sock.getsockname()

返回套接字自己的地址。通常是一个元组(ipaddr,port)

sock.fileno()

套接字的文件描述符

实例:智能机器人

服务端:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

import socket

ip_port = ('127.0.0.1',8888)

sk = socket.socket()

sk.bind(ip_port)

sk.listen(5)

while True:

conn,address = sk.accept()

conn.sendall('欢迎致电 10086,请输入1xxx,0转人工服务.')

Flag = True

while Flag:

data = conn.recv(1024)

if data == 'exit':

Flag = False

elif data == '0':

conn.sendall('通过可能会被录音.balabala一大推')

else:

conn.sendall('请重新输入.')

conn.close()

|

客户端

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

import socket

ip_port = ('127.0.0.1',8005)

sk = socket.socket()

sk.connect(ip_port)

sk.settimeout(5)

while True:

data = sk.recv(1024)

print 'receive:',data

inp = raw_input('please input:')

sk.sendall(inp)

if inp == 'exit':

break

sk.close()

|

socket模块源码:

# Wrapper module for _socket, providing some additional facilities

# implemented in Python.

"""\

This module provides socket operations and some related functions.

On Unix, it supports IP (Internet Protocol) and Unix domain sockets.

On other systems, it only supports IP. Functions specific for a

socket are available as methods of the socket object.

Functions:

socket() -- create a new socket object

socketpair() -- create a pair of new socket objects

fromfd() -- create a socket object from an open file descriptor

gethostname() -- return the current hostname

gethostbyname() -- map a hostname to its IP number

gethostbyaddr() -- map an IP number or hostname to DNS info

getservbyname() -- map a service name and a protocol name to a port number

getprotobyname() -- map a protocol name (e.g. 'tcp') to a number

ntohs(), ntohl() -- convert 16, 32 bit int from network to host byte order

htons(), htonl() -- convert 16, 32 bit int from host to network byte order

inet_aton() -- convert IP addr string (123.45.67.89) to 32-bit packed format

inet_ntoa() -- convert 32-bit packed format IP to string (123.45.67.89)

ssl() -- secure socket layer support (only available if configured)

socket.getdefaulttimeout() -- get the default timeout value

socket.setdefaulttimeout() -- set the default timeout value

create_connection() -- connects to an address, with an optional timeout and optional source address.

not available on all platforms!

Special objects:

SocketType -- type object for socket objects

error -- exception raised for I/O errors

has_ipv6 -- boolean value indicating if IPv6 is supported

Integer constants:

AF_INET, AF_UNIX -- socket domains (first argument to socket() call)

SOCK_STREAM, SOCK_DGRAM, SOCK_RAW -- socket types (second argument)

Many other constants may be defined; these may be used in calls to

the setsockopt() and getsockopt() methods.

"""

###################################代码从这里开始######################################

import _socket

from _socket import *

from functools import partial

from types import MethodType

try:

import _ssl

except ImportError:

# no SSL support

pass

else:

def ssl(sock, keyfile=None, certfile=None):

# we do an internal import here because the ssl

# module imports the socket module

import ssl as _realssl

warnings.warn("socket.ssl() is deprecated. Use ssl.wrap_socket() instead.",

DeprecationWarning, stacklevel=2)

return _realssl.sslwrap_simple(sock, keyfile, certfile)

# we need to import the same constants we used to...

from _ssl import SSLError as sslerror

from _ssl import \

RAND_add, \

RAND_status, \

SSL_ERROR_ZERO_RETURN, \

SSL_ERROR_WANT_READ, \

SSL_ERROR_WANT_WRITE, \

SSL_ERROR_WANT_X509_LOOKUP, \

SSL_ERROR_SYSCALL, \

SSL_ERROR_SSL, \

SSL_ERROR_WANT_CONNECT, \

SSL_ERROR_EOF, \

SSL_ERROR_INVALID_ERROR_CODE

try:

from _ssl import RAND_egd

except ImportError:

# LibreSSL does not provide RAND_egd

pass

import os, sys, warnings

try:

from cStringIO import StringIO

except ImportError:

from StringIO import StringIO

try:

import errno

except ImportError:

errno = None

EBADF = getattr(errno, 'EBADF', 9)

EINTR = getattr(errno, 'EINTR', 4)

__all__ = ["getfqdn", "create_connection"]

__all__.extend(os._get_exports_list(_socket))

_realsocket = socket

# WSA error codes

if sys.platform.lower().startswith("win"):

errorTab = {}

errorTab[10004] = "The operation was interrupted."

errorTab[10009] = "A bad file handle was passed."

errorTab[10013] = "Permission denied."

errorTab[10014] = "A fault occurred on the network??" # WSAEFAULT

errorTab[10022] = "An invalid operation was attempted."

errorTab[10035] = "The socket operation would block"

errorTab[10036] = "A blocking operation is already in progress."

errorTab[10048] = "The network address is in use."

errorTab[10054] = "The connection has been reset."

errorTab[10058] = "The network has been shut down."

errorTab[10060] = "The operation timed out."

errorTab[10061] = "Connection refused."

errorTab[10063] = "The name is too long."

errorTab[10064] = "The host is down."

errorTab[10065] = "The host is unreachable."

__all__.append("errorTab")

def getfqdn(name=''):

"""Get fully qualified domain name from name.

An empty argument is interpreted as meaning the local host.

First the hostname returned by gethostbyaddr() is checked, then

possibly existing aliases. In case no FQDN is available, hostname

from gethostname() is returned.

"""

name = name.strip()

if not name or name == '0.0.0.0':

name = gethostname()

try:

hostname, aliases, ipaddrs = gethostbyaddr(name)

except error:

pass

else:

aliases.insert(0, hostname)

for name in aliases:

if '.' in name:

break

else:

name = hostname

return name

_socketmethods = (

'bind', 'connect', 'connect_ex', 'fileno', 'listen',

'getpeername', 'getsockname', 'getsockopt', 'setsockopt',

'sendall', 'setblocking',

'settimeout', 'gettimeout', 'shutdown')

if os.name == "nt":

_socketmethods = _socketmethods + ('ioctl',)

if sys.platform == "riscos":

_socketmethods = _socketmethods + ('sleeptaskw',)

# All the method names that must be delegated to either the real socket

# object or the _closedsocket object.

_delegate_methods = ("recv", "recvfrom", "recv_into", "recvfrom_into",

"send", "sendto")

class _closedsocket(object):

__slots__ = []

def _dummy(*args):

raise error(EBADF, 'Bad file descriptor')

# All _delegate_methods must also be initialized here.

send = recv = recv_into = sendto = recvfrom = recvfrom_into = _dummy

__getattr__ = _dummy

# Wrapper around platform socket objects. This implements

# a platform-independent dup() functionality. The

# implementation currently relies on reference counting

# to close the underlying socket object.

class _socketobject(object):

__doc__ = _realsocket.__doc__

__slots__ = ["_sock", "__weakref__"] + list(_delegate_methods)

def __init__(self, family=AF_INET, type=SOCK_STREAM, proto=0, _sock=None):

if _sock is None:

_sock = _realsocket(family, type, proto)

self._sock = _sock

for method in _delegate_methods:

setattr(self, method, getattr(_sock, method))

def close(self, _closedsocket=_closedsocket,

_delegate_methods=_delegate_methods, setattr=setattr):

# This function should not reference any globals. See issue #808164.

self._sock = _closedsocket()

dummy = self._sock._dummy

for method in _delegate_methods:

setattr(self, method, dummy)

close.__doc__ = _realsocket.close.__doc__

def accept(self):

sock, addr = self._sock.accept()

return _socketobject(_sock=sock), addr

accept.__doc__ = _realsocket.accept.__doc__

def dup(self):

"""dup() -> socket object

Return a new socket object connected to the same system resource."""

return _socketobject(_sock=self._sock)

def makefile(self, mode='r', bufsize=-1):

"""makefile([mode[, bufsize]]) -> file object

Return a regular file object corresponding to the socket. The mode

and bufsize arguments are as for the built-in open() function."""

return _fileobject(self._sock, mode, bufsize)

family = property(lambda self: self._sock.family, doc="the socket family")

type = property(lambda self: self._sock.type, doc="the socket type")

proto = property(lambda self: self._sock.proto, doc="the socket protocol")

def meth(name,self,*args):

return getattr(self._sock,name)(*args)

for _m in _socketmethods:

p = partial(meth,_m)

p.__name__ = _m

p.__doc__ = getattr(_realsocket,_m).__doc__

m = MethodType(p,None,_socketobject)

setattr(_socketobject,_m,m)

socket = SocketType = _socketobject

class _fileobject(object):

"""Faux file object attached to a socket object."""

default_bufsize = 8192

name = "<socket>"

__slots__ = ["mode", "bufsize", "softspace",

# "closed" is a property, see below

"_sock", "_rbufsize", "_wbufsize", "_rbuf", "_wbuf", "_wbuf_len",

"_close"]

def __init__(self, sock, mode='rb', bufsize=-1, close=False):

self._sock = sock

self.mode = mode # Not actually used in this version

if bufsize < 0:

bufsize = self.default_bufsize

self.bufsize = bufsize

self.softspace = False

# _rbufsize is the suggested recv buffer size. It is *strictly*

# obeyed within readline() for recv calls. If it is larger than

# default_bufsize it will be used for recv calls within read().

if bufsize == 0:

self._rbufsize = 1

elif bufsize == 1:

self._rbufsize = self.default_bufsize

else:

self._rbufsize = bufsize

self._wbufsize = bufsize

# We use StringIO for the read buffer to avoid holding a list

# of variously sized string objects which have been known to

# fragment the heap due to how they are malloc()ed and often

# realloc()ed down much smaller than their original allocation.

self._rbuf = StringIO()

self._wbuf = [] # A list of strings

self._wbuf_len = 0

self._close = close

def _getclosed(self):

return self._sock is None

closed = property(_getclosed, doc="True if the file is closed")

def close(self):

try:

if self._sock:

self.flush()

finally:

if self._close:

self._sock.close()

self._sock = None

def __del__(self):

try:

self.close()

except:

# close() may fail if __init__ didn't complete

pass

def flush(self):

if self._wbuf:

data = "".join(self._wbuf)

self._wbuf = []

self._wbuf_len = 0

buffer_size = max(self._rbufsize, self.default_bufsize)

data_size = len(data)

write_offset = 0

view = memoryview(data)

try:

while write_offset < data_size:

self._sock.sendall(view[write_offset:write_offset+buffer_size])

write_offset += buffer_size

finally:

if write_offset < data_size:

remainder = data[write_offset:]

del view, data # explicit free

self._wbuf.append(remainder)

self._wbuf_len = len(remainder)

def fileno(self):

return self._sock.fileno()

def write(self, data):

data = str(data) # XXX Should really reject non-string non-buffers

if not data:

return

self._wbuf.append(data)

self._wbuf_len += len(data)

if (self._wbufsize == 0 or

(self._wbufsize == 1 and '\n' in data) or

(self._wbufsize > 1 and self._wbuf_len >= self._wbufsize)):

self.flush()

def writelines(self, list):

# XXX We could do better here for very long lists

# XXX Should really reject non-string non-buffers

lines = filter(None, map(str, list))

self._wbuf_len += sum(map(len, lines))

self._wbuf.extend(lines)

if (self._wbufsize <= 1 or

self._wbuf_len >= self._wbufsize):

self.flush()

def read(self, size=-1):

# Use max, disallow tiny reads in a loop as they are very inefficient.

# We never leave read() with any leftover data from a new recv() call

# in our internal buffer.

rbufsize = max(self._rbufsize, self.default_bufsize)

# Our use of StringIO rather than lists of string objects returned by

# recv() minimizes memory usage and fragmentation that occurs when

# rbufsize is large compared to the typical return value of recv().

buf = self._rbuf

buf.seek(0, 2) # seek end

if size < 0:

# Read until EOF

self._rbuf = StringIO() # reset _rbuf. we consume it via buf.

while True:

try:

data = self._sock.recv(rbufsize)

except error, e:

if e.args[0] == EINTR:

continue

raise

if not data:

break

buf.write(data)

return buf.getvalue()

else:

# Read until size bytes or EOF seen, whichever comes first

buf_len = buf.tell()

if buf_len >= size:

# Already have size bytes in our buffer? Extract and return.

buf.seek(0)

rv = buf.read(size)

self._rbuf = StringIO()

self._rbuf.write(buf.read())

return rv

self._rbuf = StringIO() # reset _rbuf. we consume it via buf.

while True:

left = size - buf_len

# recv() will malloc the amount of memory given as its

# parameter even though it often returns much less data

# than that. The returned data string is short lived

# as we copy it into a StringIO and free it. This avoids

# fragmentation issues on many platforms.

try:

data = self._sock.recv(left)

except error, e:

if e.args[0] == EINTR:

continue

raise

if not data:

break

n = len(data)

if n == size and not buf_len:

# Shortcut. Avoid buffer data copies when:

# - We have no data in our buffer.

# AND

# - Our call to recv returned exactly the

# number of bytes we were asked to read.

return data

if n == left:

buf.write(data)

del data # explicit free

break

assert n <= left, "recv(%d) returned %d bytes" % (left, n)

buf.write(data)

buf_len += n

del data # explicit free

#assert buf_len == buf.tell()

return buf.getvalue()

def readline(self, size=-1):

buf = self._rbuf

buf.seek(0, 2) # seek end

if buf.tell() > 0:

# check if we already have it in our buffer

buf.seek(0)

bline = buf.readline(size)

if bline.endswith('\n') or len(bline) == size:

self._rbuf = StringIO()

self._rbuf.write(buf.read())

return bline

del bline

if size < 0:

# Read until \n or EOF, whichever comes first

if self._rbufsize <= 1:

# Speed up unbuffered case

buf.seek(0)

buffers = [buf.read()]

self._rbuf = StringIO() # reset _rbuf. we consume it via buf.

data = None

recv = self._sock.recv

while True:

try:

while data != "\n":

data = recv(1)

if not data:

break

buffers.append(data)

except error, e:

# The try..except to catch EINTR was moved outside the

# recv loop to avoid the per byte overhead.

if e.args[0] == EINTR:

continue

raise

break

return "".join(buffers)

buf.seek(0, 2) # seek end

self._rbuf = StringIO() # reset _rbuf. we consume it via buf.

while True:

try:

data = self._sock.recv(self._rbufsize)

except error, e:

if e.args[0] == EINTR:

continue

raise

if not data:

break

nl = data.find('\n')

if nl >= 0:

nl += 1

buf.write(data[:nl])

self._rbuf.write(data[nl:])

del data

break

buf.write(data)

return buf.getvalue()

else:

# Read until size bytes or \n or EOF seen, whichever comes first

buf.seek(0, 2) # seek end

buf_len = buf.tell()

if buf_len >= size:

buf.seek(0)

rv = buf.read(size)

self._rbuf = StringIO()

self._rbuf.write(buf.read())

return rv

self._rbuf = StringIO() # reset _rbuf. we consume it via buf.

while True:

try:

data = self._sock.recv(self._rbufsize)

except error, e:

if e.args[0] == EINTR:

continue

raise

if not data:

break

left = size - buf_len

# did we just receive a newline?

nl = data.find('\n', 0, left)

if nl >= 0:

nl += 1

# save the excess data to _rbuf

self._rbuf.write(data[nl:])

if buf_len:

buf.write(data[:nl])

break

else:

# Shortcut. Avoid data copy through buf when returning

# a substring of our first recv().

return data[:nl]

n = len(data)

if n == size and not buf_len:

# Shortcut. Avoid data copy through buf when

# returning exactly all of our first recv().

return data

if n >= left:

buf.write(data[:left])

self._rbuf.write(data[left:])

break

buf.write(data)

buf_len += n

#assert buf_len == buf.tell()

return buf.getvalue()

def readlines(self, sizehint=0):

total = 0

list = []

while True:

line = self.readline()

if not line:

break

list.append(line)

total += len(line)

if sizehint and total >= sizehint:

break

return list

# Iterator protocols

def __iter__(self):

return self

def next(self):

line = self.readline()

if not line:

raise StopIteration

return line

_GLOBAL_DEFAULT_TIMEOUT = object()

def create_connection(address, timeout=_GLOBAL_DEFAULT_TIMEOUT,

source_address=None):

"""Connect to *address* and return the socket object.

Convenience function. Connect to *address* (a 2-tuple ``(host,

port)``) and return the socket object. Passing the optional

*timeout* parameter will set the timeout on the socket instance

before attempting to connect. If no *timeout* is supplied, the

global default timeout setting returned by :func:`getdefaulttimeout`

is used. If *source_address* is set it must be a tuple of (host, port)

for the socket to bind as a source address before making the connection.

An host of '' or port 0 tells the OS to use the default.

"""

host, port = address

err = None

for res in getaddrinfo(host, port, 0, SOCK_STREAM):

af, socktype, proto, canonname, sa = res

sock = None

try:

sock = socket(af, socktype, proto)

if timeout is not _GLOBAL_DEFAULT_TIMEOUT:

sock.settimeout(timeout)

if source_address:

sock.bind(source_address)

sock.connect(sa)

return sock

except error as _:

err = _

if sock is not None:

sock.close()

if err is not None:

raise err

else:

raise error("getaddrinfo returns an empty list")

|

SocketServer模块 SocketServer内部使用 IO多路复用 以及 “多线程” 和 “多进程” ,从而实现并发处理多个客户端请求的Socket服务端。即:每个客户端请求连接到服务器时,Socket服务端都会在服务器是创建一个“线程”或者“进 程” 专门负责处理当前客户端的所有请求。

SocketServer模块源码如下:"""Generic socket server classes.

This module tries to capture the various aspects of defining a server:

For socket-based servers:

- address family:

- AF_INET{,6}: IP (Internet Protocol) sockets (default)

- AF_UNIX: Unix domain sockets

- others, e.g. AF_DECNET are conceivable (see <socket.h>

- socket type:

- SOCK_STREAM (reliable stream, e.g. TCP)

- SOCK_DGRAM (datagrams, e.g. UDP)

For request-based servers (including socket-based):

- client address verification before further looking at the request

(This is actually a hook for any processing that needs to look

at the request before anything else, e.g. logging)

- how to handle multiple requests:

- synchronous (one request is handled at a time)

- forking (each request is handled by a new process)

- threading (each request is handled by a new thread)

The classes in this module favor the server type that is simplest to

write: a synchronous TCP/IP server. This is bad class design, but

save some typing. (There's also the issue that a deep class hierarchy

slows down method lookups.)

There are five classes in an inheritance diagram, four of which represent

synchronous servers of four types:

+------------+

| BaseServer |

+------------+

|

v

+-----------+ +------------------+

| TCPServer |------->| UnixStreamServer |

+-----------+ +------------------+

|

v

+-----------+ +--------------------+

| UDPServer |------->| UnixDatagramServer |

+-----------+ +--------------------+

Note that UnixDatagramServer derives from UDPServer, not from

UnixStreamServer -- the only difference between an IP and a Unix

stream server is the address family, which is simply repeated in both

unix server classes.

Forking and threading versions of each type of server can be created

using the ForkingMixIn and ThreadingMixIn mix-in classes. For

instance, a threading UDP server class is created as follows:

class ThreadingUDPServer(ThreadingMixIn, UDPServer): pass

The Mix-in class must come first, since it overrides a method defined

in UDPServer! Setting the various member variables also changes

the behavior of the underlying server mechanism.

To implement a service, you must derive a class from

BaseRequestHandler and redefine its handle() method. You can then run

various versions of the service by combining one of the server classes

with your request handler class.

The request handler class must be different for datagram or stream

services. This can be hidden by using the request handler

subclasses StreamRequestHandler or DatagramRequestHandler.

Of course, you still have to use your head!

For instance, it makes no sense to use a forking server if the service

contains state in memory that can be modified by requests (since the

modifications in the child process would never reach the initial state

kept in the parent process and passed to each child). In this case,

you can use a threading server, but you will probably have to use

locks to avoid two requests that come in nearly simultaneous to apply

conflicting changes to the server state.

On the other hand, if you are building e.g. an HTTP server, where all

data is stored externally (e.g. in the file system), a synchronous

class will essentially render the service "deaf" while one request is

being handled -- which may be for a very long time if a client is slow

to read all the data it has requested. Here a threading or forking

server is appropriate.

In some cases, it may be appropriate to process part of a request

synchronously, but to finish processing in a forked child depending on

the request data. This can be implemented by using a synchronous

server and doing an explicit fork in the request handler class

handle() method.

Another approach to handling multiple simultaneous requests in an

environment that supports neither threads nor fork (or where these are

too expensive or inappropriate for the service) is to maintain an

explicit table of partially finished requests and to use select() to

decide which request to work on next (or whether to handle a new

incoming request). This is particularly important for stream services

where each client can potentially be connected for a long time (if

threads or subprocesses cannot be used).

Future work:

- Standard classes for Sun RPC (which uses either UDP or TCP)

- Standard mix-in classes to implement various authentication

and encryption schemes

- Standard framework for select-based multiplexing

XXX Open problems:

- What to do with out-of-band data?

BaseServer:

- split generic "request" functionality out into BaseServer class.

Copyright (C) 2000 Luke Kenneth Casson Leighton <lkcl@samba.org>

example: read entries from a SQL database (requires overriding

get_request() to return a table entry from the database).

entry is processed by a RequestHandlerClass.

"""

# Author of the BaseServer patch: Luke Kenneth Casson Leighton

# XXX Warning!

# There is a test suite for this module, but it cannot be run by the

# standard regression test.

# To run it manually, run Lib/test/test_socketserver.py.

__version__ = "0.4"

import socket

import select

import sys

import os

import errno

try:

import threading

except ImportError:

import dummy_threading as threading

__all__ = ["TCPServer","UDPServer","ForkingUDPServer","ForkingTCPServer",

"ThreadingUDPServer","ThreadingTCPServer","BaseRequestHandler",

"StreamRequestHandler","DatagramRequestHandler",

"ThreadingMixIn", "ForkingMixIn"]

if hasattr(socket, "AF_UNIX"):

__all__.extend(["UnixStreamServer","UnixDatagramServer",

"ThreadingUnixStreamServer",

"ThreadingUnixDatagramServer"])

def _eintr_retry(func, *args):

"""restart a system call interrupted by EINTR"""

while True:

try:

return func(*args)

except (OSError, select.error) as e:

if e.args[0] != errno.EINTR:

raise

class BaseServer:

"""Base class for server classes.

Methods for the caller:

- __init__(server_address, RequestHandlerClass)

- serve_forever(poll_interval=0.5)

- shutdown()

- handle_request() # if you do not use serve_forever()

- fileno() -> int # for select()

Methods that may be overridden:

- server_bind()

- server_activate()

- get_request() -> request, client_address

- handle_timeout()

- verify_request(request, client_address)

- server_close()

- process_request(request, client_address)

- shutdown_request(request)

- close_request(request)

- handle_error()

Methods for derived classes:

- finish_request(request, client_address)

Class variables that may be overridden by derived classes or

instances:

- timeout

- address_family

- socket_type

- allow_reuse_address

Instance variables:

- RequestHandlerClass

- socket

"""

timeout = None

def __init__(self, server_address, RequestHandlerClass):

"""Constructor. May be extended, do not override."""

self.server_address = server_address

self.RequestHandlerClass = RequestHandlerClass

self.__is_shut_down = threading.Event()

self.__shutdown_request = False

def server_activate(self):

"""Called by constructor to activate the server.

May be overridden.

"""

pass

def serve_forever(self, poll_interval=0.5):

"""Handle one request at a time until shutdown.

Polls for shutdown every poll_interval seconds. Ignores

self.timeout. If you need to do periodic tasks, do them in

another thread.

"""

self.__is_shut_down.clear()

try:

while not self.__shutdown_request:

# XXX: Consider using another file descriptor or

# connecting to the socket to wake this up instead of

# polling. Polling reduces our responsiveness to a

# shutdown request and wastes cpu at all other times.

r, w, e = _eintr_retry(select.select, [self], [], [],

poll_interval)

if self in r:

self._handle_request_noblock()

finally:

self.__shutdown_request = False

self.__is_shut_down.set()

def shutdown(self):

"""Stops the serve_forever loop.

Blocks until the loop has finished. This must be called while

serve_forever() is running in another thread, or it will

deadlock.

"""

self.__shutdown_request = True

self.__is_shut_down.wait()

# The distinction between handling, getting, processing and

# finishing a request is fairly arbitrary. Remember:

#

# - handle_request() is the top-level call. It calls

# select, get_request(), verify_request() and process_request()

# - get_request() is different for stream or datagram sockets

# - process_request() is the place that may fork a new process

# or create a new thread to finish the request

# - finish_request() instantiates the request handler class;

# this constructor will handle the request all by itself

def handle_request(self):

"""Handle one request, possibly blocking.

Respects self.timeout.

"""

# Support people who used socket.settimeout() to escape

# handle_request before self.timeout was available.

timeout = self.socket.gettimeout()

if timeout is None:

timeout = self.timeout

elif self.timeout is not None:

timeout = min(timeout, self.timeout)

fd_sets = _eintr_retry(select.select, [self], [], [], timeout)

if not fd_sets[0]:

self.handle_timeout()

return

self._handle_request_noblock()

def _handle_request_noblock(self):

"""Handle one request, without blocking.

I assume that select.select has returned that the socket is

readable before this function was called, so there should be

no risk of blocking in get_request().

"""

try:

request, client_address = self.get_request()

except socket.error:

return

if self.verify_request(request, client_address):

try:

self.process_request(request, client_address)

except:

self.handle_error(request, client_address)

self.shutdown_request(request)

def handle_timeout(self):

"""Called if no new request arrives within self.timeout.

Overridden by ForkingMixIn.

"""

pass

def verify_request(self, request, client_address):

"""Verify the request. May be overridden.

Return True if we should proceed with this request.

"""

return True

def process_request(self, request, client_address):

"""Call finish_request.

Overridden by ForkingMixIn and ThreadingMixIn.

"""

self.finish_request(request, client_address)

self.shutdown_request(request)

def server_close(self):

"""Called to clean-up the server.

May be overridden.

"""

pass

def finish_request(self, request, client_address):

"""Finish one request by instantiating RequestHandlerClass."""

self.RequestHandlerClass(request, client_address, self)

def shutdown_request(self, request):

"""Called to shutdown and close an individual request."""

self.close_request(request)

def close_request(self, request):

"""Called to clean up an individual request."""

pass

def handle_error(self, request, client_address):

"""Handle an error gracefully. May be overridden.

The default is to print a traceback and continue.

"""

print '-'*40

print 'Exception happened during processing of request from',

print client_address

import traceback

traceback.print_exc() # XXX But this goes to stderr!

print '-'*40

class TCPServer(BaseServer):

"""Base class for various socket-based server classes.

Defaults to synchronous IP stream (i.e., TCP).

Methods for the caller:

- __init__(server_address, RequestHandlerClass, bind_and_activate=True)

- serve_forever(poll_interval=0.5)

- shutdown()

- handle_request() # if you don't use serve_forever()

- fileno() -> int # for select()

Methods that may be overridden:

- server_bind()

- server_activate()

- get_request() -> request, client_address

- handle_timeout()

- verify_request(request, client_address)

- process_request(request, client_address)

- shutdown_request(request)

- close_request(request)

- handle_error()

Methods for derived classes:

- finish_request(request, client_address)

Class variables that may be overridden by derived classes or

instances:

- timeout

- address_family

- socket_type

- request_queue_size (only for stream sockets)

- allow_reuse_address

Instance variables:

- server_address

- RequestHandlerClass

- socket

"""

address_family = socket.AF_INET

socket_type = socket.SOCK_STREAM

request_queue_size = 5

allow_reuse_address = False

def __init__(self, server_address, RequestHandlerClass, bind_and_activate=True):

"""Constructor. May be extended, do not override."""

BaseServer.__init__(self, server_address, RequestHandlerClass)

self.socket = socket.socket(self.address_family,

self.socket_type)

if bind_and_activate:

try:

self.server_bind()

self.server_activate()

except:

self.server_close()

raise

def server_bind(self):

"""Called by constructor to bind the socket.

May be overridden.

"""

if self.allow_reuse_address:

self.socket.setsockopt(socket.SOL_SOCKET, socket.SO_REUSEADDR, 1)

self.socket.bind(self.server_address)

self.server_address = self.socket.getsockname()

def server_activate(self):

"""Called by constructor to activate the server.

May be overridden.

"""

self.socket.listen(self.request_queue_size)

def server_close(self):

"""Called to clean-up the server.

May be overridden.

"""

self.socket.close()

def fileno(self):

"""Return socket file number.

Interface required by select().

"""

return self.socket.fileno()

def get_request(self):

"""Get the request and client address from the socket.

May be overridden.

"""

return self.socket.accept()

def shutdown_request(self, request):

"""Called to shutdown and close an individual request."""

try:

#explicitly shutdown. socket.close() merely releases

#the socket and waits for GC to perform the actual close.

request.shutdown(socket.SHUT_WR)

except socket.error:

pass #some platforms may raise ENOTCONN here

self.close_request(request)

def close_request(self, request):

"""Called to clean up an individual request."""

request.close()

class UDPServer(TCPServer):

"""UDP server class."""

allow_reuse_address = False

socket_type = socket.SOCK_DGRAM

max_packet_size = 8192

def get_request(self):

data, client_addr = self.socket.recvfrom(self.max_packet_size)

return (data, self.socket), client_addr

def server_activate(self):

# No need to call listen() for UDP.

pass

def shutdown_request(self, request):

# No need to shutdown anything.

self.close_request(request)

def close_request(self, request):

# No need to close anything.

pass

class ForkingMixIn:

"""Mix-in class to handle each request in a new process."""

timeout = 300

active_children = None

max_children = 40

def collect_children(self):

"""Internal routine to wait for children that have exited."""

if self.active_children is None:

return

# If we're above the max number of children, wait and reap them until

# we go back below threshold. Note that we use waitpid(-1) below to be

# able to collect children in size(<defunct children>) syscalls instead

# of size(<children>): the downside is that this might reap children

# which we didn't spawn, which is why we only resort to this when we're

# above max_children.

while len(self.active_children) >= self.max_children:

try:

pid, _ = os.waitpid(-1, 0)

self.active_children.discard(pid)

except OSError as e:

if e.errno == errno.ECHILD:

# we don't have any children, we're done

self.active_children.clear()

elif e.errno != errno.EINTR:

break

# Now reap all defunct children.

for pid in self.active_children.copy():

try:

pid, _ = os.waitpid(pid, os.WNOHANG)

# if the child hasn't exited yet, pid will be 0 and ignored by

# discard() below

self.active_children.discard(pid)

except OSError as e:

if e.errno == errno.ECHILD:

# someone else reaped it

self.active_children.discard(pid)

def handle_timeout(self):

"""Wait for zombies after self.timeout seconds of inactivity.

May be extended, do not override.

"""

self.collect_children()

def process_request(self, request, client_address):

"""Fork a new subprocess to process the request."""

self.collect_children()

pid = os.fork()

if pid:

# Parent process

if self.active_children is None:

self.active_children = set()

self.active_children.add(pid)

self.close_request(request) #close handle in parent process

return

else:

# Child process.

# This must never return, hence os._exit()!

try:

self.finish_request(request, client_address)

self.shutdown_request(request)

os._exit(0)

except:

try:

self.handle_error(request, client_address)

self.shutdown_request(request)

finally:

os._exit(1)

class ThreadingMixIn:

"""Mix-in class to handle each request in a new thread."""

# Decides how threads will act upon termination of the

# main process

daemon_threads = False

def process_request_thread(self, request, client_address):

"""Same as in BaseServer but as a thread.

In addition, exception handling is done here.

"""

try:

self.finish_request(request, client_address)

self.shutdown_request(request)

except:

self.handle_error(request, client_address)

self.shutdown_request(request)

def process_request(self, request, client_address):

"""Start a new thread to process the request."""

t = threading.Thread(target = self.process_request_thread,

args = (request, client_address))

t.daemon = self.daemon_threads

t.start()

class ForkingUDPServer(ForkingMixIn, UDPServer):

pass

class ForkingTCPServer(ForkingMixIn, TCPServer):

pass

class ThreadingUDPServer(ThreadingMixIn, UDPServer):

pass

class ThreadingTCPServer(ThreadingMixIn, TCPServer):

pass

if hasattr(socket, 'AF_UNIX'):

class UnixStreamServer(TCPServer):

address_family = socket.AF_UNIX

class UnixDatagramServer(UDPServer):

address_family = socket.AF_UNIX

class ThreadingUnixStreamServer(ThreadingMixIn, UnixStreamServer): pass

class ThreadingUnixDatagramServer(ThreadingMixIn, UnixDatagramServer): pass

class BaseRequestHandler:

"""Base class for request handler classes.

This class is instantiated for each request to be handled. The

constructor sets the instance variables request, client_address

and server, and then calls the handle() method. To implement a

specific service, all you need to do is to derive a class which

defines a handle() method.

The handle() method can find the request as self.request, the

client address as self.client_address, and the server (in case it

needs access to per-server information) as self.server. Since a

separate instance is created for each request, the handle() method

can define arbitrary other instance variariables.

"""

def __init__(self, request, client_address, server):

self.request = request

self.client_address = client_address

self.server = server

self.setup()

try:

self.handle()

finally:

self.finish()

def setup(self):

pass

def handle(self):

pass

def finish(self):

pass

# The following two classes make it possible to use the same service

# class for stream or datagram servers.

# Each class sets up these instance variables:

# - rfile: a file object from which receives the request is read

# - wfile: a file object to which the reply is written

# When the handle() method returns, wfile is flushed properly

class StreamRequestHandler(BaseRequestHandler):

"""Define self.rfile and self.wfile for stream sockets."""

# Default buffer sizes for rfile, wfile.

# We default rfile to buffered because otherwise it could be

# really slow for large data (a getc() call per byte); we make

# wfile unbuffered because (a) often after a write() we want to

# read and we need to flush the line; (b) big writes to unbuffered

# files are typically optimized by stdio even when big reads

# aren't.

rbufsize = -1

wbufsize = 0

# A timeout to apply to the request socket, if not None.

timeout = None

# Disable nagle algorithm for this socket, if True.

# Use only when wbufsize != 0, to avoid small packets.

disable_nagle_algorithm = False

def setup(self):

self.connection = self.request

if self.timeout is not None:

self.connection.settimeout(self.timeout)

if self.disable_nagle_algorithm:

self.connection.setsockopt(socket.IPPROTO_TCP,

socket.TCP_NODELAY, True)

self.rfile = self.connection.makefile('rb', self.rbufsize)

self.wfile = self.connection.makefile('wb', self.wbufsize)

def finish(self):

if not self.wfile.closed:

try:

self.wfile.flush()

except socket.error:

# An final socket error may have occurred here, such as

# the local error ECONNABORTED.

pass

self.wfile.close()

self.rfile.close()

class DatagramRequestHandler(BaseRequestHandler):

# XXX Regrettably, I cannot get this working on Linux;

# s.recvfrom() doesn't return a meaningful client address.

"""Define self.rfile and self.wfile for datagram sockets."""

def setup(self):

try:

from cStringIO import StringIO

except ImportError:

from StringIO import StringIO

self.packet, self.socket = self.request

self.rfile = StringIO(self.packet)

self.wfile = StringIO()

def finish(self):

self.socket.sendto(self.wfile.getvalue(), self.client_address)

|

ThreadingTCPServer

ThreadingTCPServer实现的Soket服务器内部会为每个client创建一个 “线程”,该线程用来和客户端进行交互。

1、ThreadingTCPServer基础

使用ThreadingTCPServer:

①创建一个继承自 SocketServer.BaseRequestHandler 的类

②类中必须定义一个名称为 handle 的方法

③启动ThreadingTCPServer

SocketServer实现服务器

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

import SocketServer

class MyServer(SocketServer.BaseRequestHandler):

def handle(self):

# print self.request,self.client_address,self.server

conn = self.request

conn.sendall('欢迎致电 10086,请输入1xxx,0转人工服务.')

Flag = True

while Flag:

data = conn.recv(1024)

if data == 'exit':

Flag = False

elif data == '0':

conn.sendall('通过可能会被录音.balabala一大推')

else:

conn.sendall('请重新输入.')

if __name__ == '__main__':

server = SocketServer.ThreadingTCPServer(('127.0.0.1',8009),MyServer)

server.serve_forever()

|

|

客户端

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

import socket

ip_port = ('127.0.0.1',8009)

sk = socket.socket()

sk.connect(ip_port)

sk.settimeout(5)

while True:

data = sk.recv(1024)

print 'receive:',data

inp = raw_input('please input:')

sk.sendall(inp)

if inp == 'exit':

break

sk.close()

|

|

2、ThreadingTCPServer源码剖析

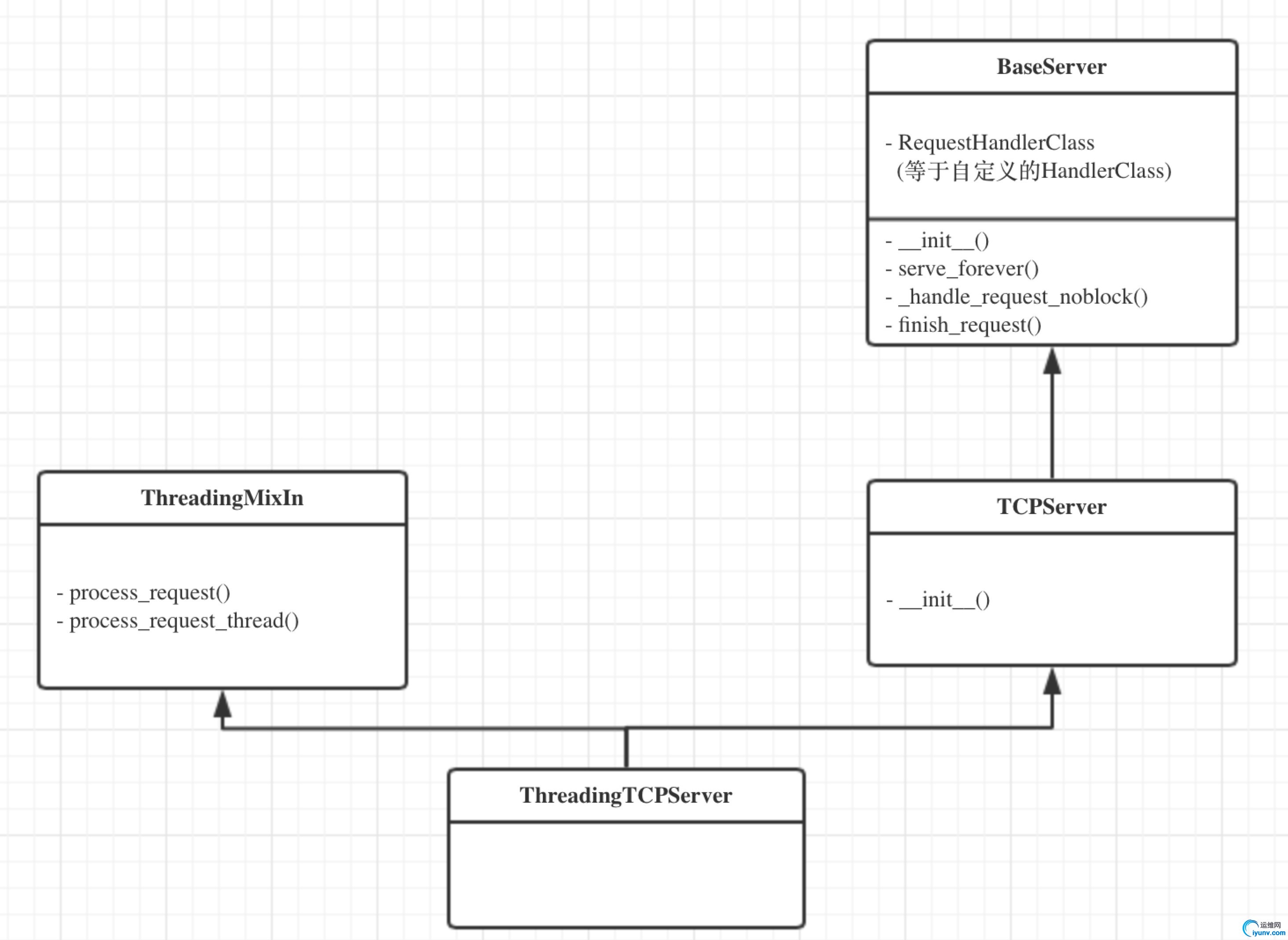

ThreadingTCPServer的类图关系如下:

内部调用流程为:

- 启动服务端程序

- 执行 TCPServer.__init__ 方法,创建服务端Socket对象并绑定 IP 和 端口

- 执行 BaseServer.__init__ 方法,将自定义的继承自SocketServer.BaseRequestHandler 的类 MyRequestHandle赋值给 self.RequestHandlerClass

- 执行 BaseServer.server_forever 方法,While 循环一直监听是否有客户端请求到达 ...

- 当客户端连接到达服务器

- 执行 ThreadingMixIn.process_request 方法,创建一个 “线程” 用来处理请求

- 执行 ThreadingMixIn.process_request_thread 方法

- 执行 BaseServer.finish_request 方法,执行 self.RequestHandlerClass() 即:执行 自定义 MyRequestHandler 的构造方法(自动调用基类BaseRequestHandler的构造方法,在该构造方法中又会调用 MyRequestHandler的handle方法)

ThreadingTCPServer相关源码:

BaseServer

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

| class BaseServer:

"""Base class for server classes.

Methods for the caller:

- __init__(server_address, RequestHandlerClass)

- serve_forever(poll_interval=0.5)

- shutdown()

- handle_request() # if you do not use serve_forever()

- fileno() -> int # for select()

Methods that may be overridden:

- server_bind()

- server_activate()

- get_request() -> request, client_address

- handle_timeout()

- verify_request(request, client_address)

- server_close()

- process_request(request, client_address)

- shutdown_request(request)

- close_request(request)

- handle_error()

Methods for derived classes:

- finish_request(request, client_address)

Class variables that may be overridden by derived classes or

instances:

- timeout

- address_family

- socket_type

- allow_reuse_address

Instance variables:

- RequestHandlerClass

- socket

"""

timeout = None

def __init__(self, server_address, RequestHandlerClass):

"""Constructor. May be extended, do not override."""

self.server_address = server_address

self.RequestHandlerClass = RequestHandlerClass

self.__is_shut_down = threading.Event()

self.__shutdown_request = False

def server_activate(self):

"""Called by constructor to activate the server.

May be overridden.

"""

pass

def serve_forever(self, poll_interval=0.5):

"""Handle one request at a time until shutdown.

Polls for shutdown every poll_interval seconds. Ignores

self.timeout. If you need to do periodic tasks, do them in

another thread.

"""

self.__is_shut_down.clear()

try:

while not self.__shutdown_request:

# XXX: Consider using another file descriptor or

# connecting to the socket to wake this up instead of

# polling. Polling reduces our responsiveness to a

# shutdown request and wastes cpu at all other times.

r, w, e = _eintr_retry(select.select, [self], [], [],

poll_interval)

if self in r:

self._handle_request_noblock()

finally:

self.__shutdown_request = False

self.__is_shut_down.set()

def shutdown(self):

"""Stops the serve_forever loop.

Blocks until the loop has finished. This must be called while

serve_forever() is running in another thread, or it will

deadlock.

"""

self.__shutdown_request = True

self.__is_shut_down.wait()

# The distinction between handling, getting, processing and

# finishing a request is fairly arbitrary. Remember:

#

# - handle_request() is the top-level call. It calls

# select, get_request(), verify_request() and process_request()

# - get_request() is different for stream or datagram sockets

# - process_request() is the place that may fork a new process

# or create a new thread to finish the request

# - finish_request() instantiates the request handler class;

# this constructor will handle the request all by itself

def handle_request(self):

"""Handle one request, possibly blocking.

Respects self.timeout.

"""

# Support people who used socket.settimeout() to escape

# handle_request before self.timeout was available.

timeout = self.socket.gettimeout()

if timeout is None:

timeout = self.timeout

elif self.timeout is not None:

timeout = min(timeout, self.timeout)

fd_sets = _eintr_retry(select.select, [self], [], [], timeout)

if not fd_sets[0]:

self.handle_timeout()

return

self._handle_request_noblock()

def _handle_request_noblock(self):

"""Handle one request, without blocking.

I assume that select.select has returned that the socket is

readable before this function was called, so there should be

no risk of blocking in get_request().

"""

try:

request, client_address = self.get_request()

except socket.error:

return

if self.verify_request(request, client_address):

try:

self.process_request(request, client_address)

except:

self.handle_error(request, client_address)

self.shutdown_request(request)

def handle_timeout(self):

"""Called if no new request arrives within self.timeout.

Overridden by ForkingMixIn.

"""

pass

def verify_request(self, request, client_address):

"""Verify the request. May be overridden.

Return True if we should proceed with this request.

"""

return True

def process_request(self, request, client_address):

"""Call finish_request.

Overridden by ForkingMixIn and ThreadingMixIn.

"""

self.finish_request(request, client_address)

self.shutdown_request(request)

def server_close(self):

"""Called to clean-up the server.

May be overridden.

"""

pass

def finish_request(self, request, client_address):

"""Finish one request by instantiating RequestHandlerClass."""

self.RequestHandlerClass(request, client_address, self)

def shutdown_request(self, request):

"""Called to shutdown and close an individual request."""

self.close_request(request)

def close_request(self, request):

"""Called to clean up an individual request."""

pass

def handle_error(self, request, client_address):

"""Handle an error gracefully. May be overridden.

The default is to print a traceback and continue.

"""

print '-'*40

print 'Exception happened during processing of request from',

print client_address

import traceback

traceback.print_exc() # XXX But this goes to stderr!

print '-'*40

|

|

TCPServer

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

| class TCPServer(BaseServer):

"""Base class for various socket-based server classes.

Defaults to synchronous IP stream (i.e., TCP).

Methods for the caller:

- __init__(server_address, RequestHandlerClass, bind_and_activate=True)

- serve_forever(poll_interval=0.5)

- shutdown()

- handle_request() # if you don't use serve_forever()

- fileno() -> int # for select()

Methods that may be overridden:

- server_bind()

- server_activate()

- get_request() -> request, client_address

- handle_timeout()

- verify_request(request, client_address)

- process_request(request, client_address)

- shutdown_request(request)

- close_request(request)

- handle_error()

Methods for derived classes:

- finish_request(request, client_address)

Class variables that may be overridden by derived classes or

instances:

- timeout

- address_family

- socket_type

- request_queue_size (only for stream sockets)

- allow_reuse_address

Instance variables:

- server_address

- RequestHandlerClass

- socket

"""

address_family = socket.AF_INET

socket_type = socket.SOCK_STREAM

request_queue_size = 5

allow_reuse_address = False

def __init__(self, server_address, RequestHandlerClass, bind_and_activate=True):

"""Constructor. May be extended, do not override."""

BaseServer.__init__(self, server_address, RequestHandlerClass)

self.socket = socket.socket(self.address_family,

self.socket_type)

if bind_and_activate:

try:

self.server_bind()

self.server_activate()

except:

self.server_close()

raise

def server_bind(self):

"""Called by constructor to bind the socket.

May be overridden.

"""

if self.allow_reuse_address:

self.socket.setsockopt(socket.SOL_SOCKET, socket.SO_REUSEADDR, 1)

self.socket.bind(self.server_address)

self.server_address = self.socket.getsockname()

def server_activate(self):

"""Called by constructor to activate the server.

May be overridden.

"""

self.socket.listen(self.request_queue_size)

def server_close(self):

"""Called to clean-up the server.

May be overridden.

"""

self.socket.close()

def fileno(self):

"""Return socket file number.

Interface required by select().

"""

return self.socket.fileno()

def get_request(self):

"""Get the request and client address from the socket.

May be overridden.

"""

return self.socket.accept()

def shutdown_request(self, request):

"""Called to shutdown and close an individual request."""

try:

#explicitly shutdown. socket.close() merely releases

#the socket and waits for GC to perform the actual close.

request.shutdown(socket.SHUT_WR)

except socket.error:

pass #some platforms may raise ENOTCONN here

self.close_request(request)

def close_request(self, request):

"""Called to clean up an individual request."""

request.close()

|

|

ThreadingMixIn

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| class ThreadingMixIn:

"""Mix-in class to handle each request in a new thread."""

# Decides how threads will act upon termination of the

# main process

daemon_threads = False

def process_request_thread(self, request, client_address):

"""Same as in BaseServer but as a thread.

In addition, exception handling is done here.

"""

try:

self.finish_request(request, client_address)

self.shutdown_request(request)

except:

self.handle_error(request, client_address)

self.shutdown_request(request)

def process_request(self, request, client_address):

"""Start a new thread to process the request."""

t = threading.Thread(target = self.process_request_thread,

args = (request, client_address))

t.daemon = self.daemon_threads

t.start()

|

|

ThreadingTCPServer

| class ThreadingTCPServer(ThreadingMixIn, TCPServer): pass |

RequestHandler相关源码

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| class BaseRequestHandler:

"""Base class for request handler classes.

This class is instantiated for each request to be handled. The

constructor sets the instance variables request, client_address

and server, and then calls the handle() method. To implement a

specific service, all you need to do is to derive a class which

defines a handle() method.

The handle() method can find the request as self.request, the

client address as self.client_address, and the server (in case it

needs access to per-server information) as self.server. Since a

separate instance is created for each request, the handle() method

can define arbitrary other instance variariables.

"""

def __init__(self, request, client_address, server):

self.request = request

self.client_address = client_address

self.server = server

self.setup()

try:

self.handle()

finally:

self.finish()

def setup(self):

pass

def handle(self):

pass

def finish(self):

pass

SocketServer.BaseRequestHandler

|

|

实例:

服务端

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

__author__ = 'ryan'

import SocketServer

import os

class MyServer(SocketServer.BaseRequestHandler):

def handle(self):

#print self.request,self.client_address,self.server

print "got connection from", self.client_address

while True:

data = self.request.recv(1024)

print "Recv from cmd:%s" %(data)

cmd_res = os.popen(data).read()

print 'cmd_res:',len(cmd_res)

self.request.send(str(len(cmd_res)))

self.request.recv(1024)#接收一次,将第一次与第二次之间数据隔开,解决连包问题

self.request.sendall(cmd_res)

if __name__ == '__main__':

server = SocketServer.ThreadingTCPServer(('127.0.0.1',8009),MyServer)

server.serve_forever()

|

|

客户端

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

__author__ = 'ryan'

import socket

ip_port = ('127.0.0.1',8009)

sk = socket.socket()

sk.connect(ip_port)

sk.settimeout(5)

while True:

inp = raw_input('please input:')

sk.sendall(inp)

res_size=sk.recv(1024)

print 'going to recv data size:',res_size,type(res_size)

total_size = int(res_size)

sk.send(inp)#---->多发送一次解决连包问题

received_size=0

while True:

data=sk.recv(1024)

received_size += len(data)

print '----data----'

#if len(data)<1024:

if total_size == received_size:

print data

print '----not data---'

break

print data

if inp == 'exit':

break

sk.close()

|

|

源码精简:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| import socket

import threading

import select

def process(request, client_address):

print request,client_address

conn = request

conn.sendall('欢迎致电 10086,请输入1xxx,0转人工服务.')

flag = True

while flag:

data = conn.recv(1024)

if data == 'exit':

flag = False

elif data == '0':

conn.sendall('通过可能会被录音.balabala一大推')

else:

conn.sendall('请重新输入.')

sk = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

sk.bind(('127.0.0.1',8002))

sk.listen(5)

while True:

r, w, e = select.select([sk,],[],[],1)

print 'looping'

if sk in r:

print 'get request'

request, client_address = sk.accept()

t = threading.Thread(target=process, args=(request, client_address))

t.daemon = False

t.start()

sk.close()

|

|

如精简代码可以看出,SocketServer的ThreadingTCPServer之所以可以同时处理请求得益于 select 和 Threading 两个东西,其实本质上就是在服务器端为每一个客户端创建一个线程,当前线程用来处理对应客户端的请求,所以,可以支持同时n个客户端链接(长连接)。

ForkingTCPServer

ForkingTCPServer和ThreadingTCPServer的使用和执行流程基本一致,只不过在内部分别为请求者建立 “线程” 和 “进程”。

基本使用:

服务端

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

import SocketServer

class MyServer(SocketServer.BaseRequestHandler):

def handle(self):

# print self.request,self.client_address,self.server

conn = self.request

conn.sendall('欢迎致电 10086,请输入1xxx,0转人工服务.')

Flag = True

while Flag:

data = conn.recv(1024)

if data == 'exit':

Flag = False

elif data == '0':

conn.sendall('通过可能会被录音.balabala一大推')

else:

conn.sendall('请重新输入.')

if __name__ == '__main__':

server = SocketServer.ForkingTCPServer(('127.0.0.1',8009),MyServer)

server.serve_forever()

|

|

客户端

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

import socket

ip_port = ('127.0.0.1',8009)

sk = socket.socket()

sk.connect(ip_port)

sk.settimeout(5)

while True:

data = sk.recv(1024)

print 'receive:',data

inp = raw_input('please input:')

sk.sendall(inp)

if inp == 'exit':

break

sk.close()

|

|

以上ForkingTCPServer只是将 ThreadingTCPServer 实例中的代码:

server = SocketServer.ThreadingTCPServer(('127.0.0.1',8009),MyRequestHandler)

变更为:

server = SocketServer.ForkingTCPServer(('127.0.0.1',8009),MyRequestHandler)

SocketServer的ThreadingTCPServer之所以可以同时处理请求得益于 select 和 os.fork 两个东西,其实本质上就是在服务器端为每一个客户端创建一个进程,当前新创建的进程用来处理对应客户端的请求,所以,可以支持同时n个客户端链接(长连接)。

源码剖析参考 ThreadingTCPServer

TwistedTwisted是一个事件驱动的网络框架,其中包含了诸多功能,例如:网络协议、线程、数据库管理、网络操作、电子邮件等。

事件驱动

简而言之,事件驱动分为二个部分:第一,注册事件;第二,触发事件。

自定义事件驱动框架,命名为:“弑君者”:

最牛逼的事件驱动框架

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

# event_drive.py

event_list = []

def run():

for event in event_list:

obj = event()

obj.execute()

class BaseHandler(object):

"""

用户必须继承该类,从而规范所有类的方法(类似于接口的功能)

"""

def execute(self):

raise Exception('you must overwrite execute')

|

|

程序员使用“弑君者框架”:

1

2

3

4

5

6

7

8

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

from source import event_drive

class MyHandler(event_drive.BaseHandler):

def execute(self):

print 'event-drive execute MyHandler'

event_drive.event_list.append(MyHandler)

event_drive.run()

|

|

如上述代码,事件驱动只不过是框架规定了执行顺序,程序员在使用框架时,可以向原执行顺序中注册“事件”,从而在框架执行时可以出发已注册的“事件”。

基于事件驱动Socket

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

from twisted.internet import protocol

from twisted.internet import reactor

class Echo(protocol.Protocol):

def dataReceived(self, data):

self.transport.write(data)

def main():

factory = protocol.ServerFactory()

factory.protocol = Echo

reactor.listenTCP(8000,factory)

reactor.run()

if __name__ == '__main__':

main()

|

|

程序执行流程:

- 运行服务端程序

- 创建Protocol的派生类Echo

- 创建ServerFactory对象,并将Echo类封装到其protocol字段中

- 执行reactor的 listenTCP 方法,内部使用 tcp.Port 创建socket server对象,并将该对象添加到了 reactor的set类型的字段 _read 中

- 执行reactor的 run 方法,内部执行 while 循环,并通过 select 来监视 _read 中文件描述符是否有变化,循环中...

- 客户端请求到达

- 执行reactor的 _doReadOrWrite 方法,其内部通过反射调用 tcp.Port 类的 doRead 方法,内部 accept 客户端连接并创建Server对象实例(用于封装客户端socket信息)和 创建 Echo 对象实例(用于处理请求) ,然后调用 Echo 对象实例的 makeConnection 方法,创建连接。

- 执行 tcp.Server 类的 doRead 方法,读取数据,

- 执行 tcp.Server 类的 _dataReceived 方法,如果读取数据内容为空(关闭链接),否则,出发 Echo 的 dataReceived 方法

- 执行 Echo 的 dataReceived 方法

从源码可以看出,上述实例本质上使用了事件驱动的方法 和 IO多路复用的机制来进行Socket的处理。

异步IO操作代码:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

| #!/usr/bin/env python

# -*- coding:utf-8 -*-

from twisted.internet import reactor, protocol

from twisted.web.client import getPage

from twisted.internet import reactor

import time

class Echo(protocol.Protocol):

def dataReceived(self, data):

deferred1 = getPage('http://cnblogs.com')

deferred1.addCallback(self.printContents)

deferred2 = getPage('http://baidu.com')

deferred2.addCallback(self.printContents)

for i in range(2):

time.sleep(1)

print 'execute ',i

def execute(self,data):

self.transport.write(data)

def printContents(self,content):

print len(content),content[0:100],time.time()

def main():

factory = protocol.ServerFactory()

factory.protocol = Echo

reactor.listenTCP(8000,factory)

reactor.run()

if __name__ == '__main__':

main()

|

|

|

|