|

|

前言:

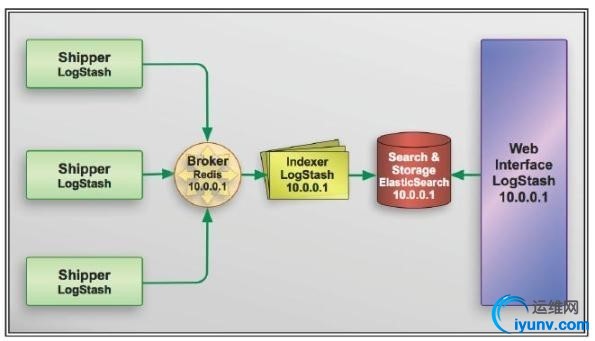

Elk主要就是3个软件的组合,主要是elasticsearch搜索引擎,Logstash是一个日志收集日志,kibana实时分析进行展示。

[关于日志搜集的软件,如:scribe,flume,heka,logstash,chukwa,fluentd,当然rsyslog rsyslog-ng都可以搜集。

关于日志手机后存储软件,如:HDFS,Cassandra mongodb, redis,elasticsearch。

关于日志分析软件比如要用HDFS就可以写mapreduce分析,如果需要实时分析就是用Kibana进行展示。]

112.74.76.115 #安装logstash agent、nginx

115.29.150.217 #安装logstash index、elasticsearch、redis、nginx

一.Redis安装配置(115.29.150.217)

1,下载安装:

#wget https://github.com/antirez/redis/archive/2.8.20.tar.gz

# tar xf 2.8.20.tar.gz

# cd redis-2.8.20/ && make

Make之后会在/usr/local/redis-2.8.20/src 目录生成响应的执行文件

2,然后在创建redis的数据存储,配置文件等目录。

#mkdir /usr/local/redis/{conf,run,db} –pv# cp redis.conf /usr/local/redis/conf/# cd src

# cp redis-benchmark redis-check-aof redis-check-dump redis-cli redis-server mkreleasehdr.sh /usr/local/redis

3,启动redis

# /usr/local/redis/redis-server /usr/local/redis/conf/redis.conf & 后台启动redis 6379

二.Elasticsearch安装配置(115.29.150.217)

1,下载安装

#wget https://download.elastic.co/elas ... search-2.3.2.tar.gz

# tar xf elasticsearch-2.3.2.tar.gz

# mv elasticsearch-2.3.2 /usr/local/elk/

# ln -s /usr/local/elk/elasticsearch-2.3.2/bin/elasticsearch /usr/bin/

2,后台启动

# elasticsearch start –d

3,测试是否成功

# curl 115.29.150.217:9200

1

2

3

4

5

6

7

8

9

10

11

12

13

| {

"status" : 200,

"name" : "Gorgeous George",

"cluster_name" : "elasticsearch",

"version" : {

"number" : "1.4.1",

"build_hash" : "89d3241d670db65f994242c8e8383b169779e2d4",

"build_timestamp" : "2014-11-26T15:49:29Z",

"build_snapshot" : false,

"lucene_version" : "4.10.2"

},

"tagline" : "You Know, for Search"

}

|

4,yum安装安装[小扩展]

#rpm --import https://packages.elastic.co/GPG-KEY-elasticsearch 导入密钥

# vim /etc/yum.repos.d/CentOS-Base.repo 添加yum

1

2

3

4

5

6

| [elasticsearch-2.x]

name=Elasticsearch repository for 2.x packages

baseurl=http://packages.elastic.co/elasticsearch/2.x/centos

gpgcheck=1

gpgkey=http://packages.elastic.co/GPG-KEY-elasticsearch

enabled=1

|

# yum makecache 更新yum缓存

# yum install elasticsearch –y 安装

#chkconfig --add elasticsearch 添加到系统系统

#service elasticsearch start 启动

5,安装插件[小扩展]

# cd /usr/share/elasticsearch/ # ./plugin -install mobz/elasticsearch-head && ./bin/plugin -install lukas-vlcek/bigdesk/2.5.0

关于这2个插件更多详情查阅:https://github.com/lukas-vlcek/bigdesk

http://115.29.150.217:9200/_plugin/bigdesk/#nodes

http://115.29.150.217:9200/_plugin/head/ 查看relasticsearch cluster信息及监控情况

[kibana安装参考官网文档http://kibana.logstash.es/content/kibana/v4/setup.html ]

三.Logstash安装(112.74.76.115)

1,下载解压

#wget https://download.elastic.co/logs ... gstash-1.5.3.tar.gz

#tar xf logstash-1.5.3.tar.gz -C /usr/local/

#mkdir /usr/local/logstash-1.5.3/etc

四.yum安装logstash(115.29.150.217)

# rpm --import https://packages.elasticsearch.org/GPG-KEY-elasticsearch

# vi /etc/yum.repos.d/CentOS-Base.repo

1

2

3

4

5

6

| [logstash-1.5]

name=Logstash repository for 1.5.x packages

baseurl=http://packages.elasticsearch.org/logstash/1.5/centos

gpgcheck=1

gpgkey=http://packages.elasticsearch.org/GPG-KEY-elasticsearch

enabled=1

|

测试logstash:

在115.29.150.217上测试logstash

#cd /opt/logstash/bin

#./logstash -e 'input{stdin{}}output{stdout{codec=>rubydebug}}'

1

2

3

4

5

6

7

8

| hello

Logstash startup completed

{

"message" => "hello",

"@version" => "1",

"@timestamp" => "2016-05-26T11:01:44.039Z",

"host" => "iZ947d960cbZ"

}

|

也可以通过curl测试:

# curl 'http://115.29.150.217:9200/_search?pretty'

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| {

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 0,

"successful" : 0,

"failed" : 0

},

"hits" : {

"total" : 0,

"max_score" : 0.0,

"hits" : [ ]

}

}

|

五.Logstash配置

1,设置nginx的日志格式,在两台服务器都要修改

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log logs/test.access.log logstash; 设置access日志,有访问时候自动写入该文件

#/usr/local/nginx/sbin/nginx -s reload 重加载nginx

2,开启logstash agent

Logstash agent 负责手信息传送到redis队列上,

[root@112.74.76.115 logstash-1.5.3]#vim etc/logstash_agent.conf

1

2

3

4

5

6

7

8

9

10

11

12

13

| input {

file {

type => "nginx access log"

path => ["/usr/local/nginx/logs/test.access.log"]

}

}

output {

redis {

host => "115.29.150.217" #redis server

data_type => "list"

key => "logstash:redis"

}

}

|

#nohup /usr/local/logstash-1.5.3/bin/logstash -f /usr/local/logstash-1.5.3/etc/logstash_agent.conf & 把日志推送到217服务器上面。

[root@115.29.150.217]vim /etc/logstash/logstash_agent.conf

1

2

3

4

5

6

7

8

9

10

11

12

13

| input {

file {

type => "nginx access log"

path => ["/usr/local/nginx/logs/test.access.log"]

}

}

output {

redis {

host => "115.29.150.217" #redis server

data_type => "list"

key => "logstash:redis"

}

}

|

# /opt/logstash/bin/logstash -f /etc/logstash/logstash_agent.conf & 在217上也把日志推送到队列。如果要添加多台,方法一样,先把安装logstash,然后再用logstash把搜集的日志推送过去。

#ps -ef |grep logstash 可以查看后台运行的进程 确保redis运行,否则会提示:Failed to send event to Redis

出现:表示成功推送。

[1460] 26 May 19:53:01.066 * 10 changes in 300 seconds. Saving...

[1460] 26 May 19:53:01.067 * Background saving started by pid 1577

[1577] 26 May 19:53:01.104 * DB saved on disk

[1577] 26 May 19:53:01.104 * RDB: 0 MB of memory used by copy-on-write

[1460] 26 May 19:53:01.167 * Background saving terminated with success

3,开启logstash indexer (115.29.150.217)

# vim /etc/logstash/logstash_indexer.conf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

| input {

redis {

host => "115.29.150.217"

data_type => "list"

key => "logstash:redis"

type => "redis-input"

}

}

filter {

grok {

type => "nginx_access"

match => [

"message", "%{IPORHOST:http_host} %{IPORHOST:client_ip} \[%{HTTPDA

TE:timestamp}\] \"(?:%{WORD:http_verb} %{NOTSPACE:http_request}(?: HTTP/%{NUMBER:http_vers

ion})?|%{DATA:raw_http_request})\"

%{NUMBER:http_status_code} (?:%{NUMBER:bytes_read}|-) %{QS:referrer}

%{QS:agent} %{NUMBER:time_duration:float}

%{NUMBER:time_backend_response:float}",

"message",

"%{IPORHOST:http_host} %{IPORHOST:client_ip} \[%{HTTPDATE:timestamp}\]

\"(?:%{WORD:http_verb} %{NOTSPACE:http_request}(?:

HTTP/%{NUMBER:http_version})?|%{DA

TA:raw_http_request})\"

%{NUMBER:http_status_code} (?:%{NUMBER:bytes_read}|-) %{QS:referrer}

%{QS:agent} %{NUMBER:time_duration:float}"

]

}

}

output {

elasticsearch {

embedded => false

protocol => "http"

host => "localhost"

port => "9200"

}

}

|

# nohup /opt/logstash/bin/logstash -f /etc/logstash/logstash_indexer.conf &

六.kibana安装

Kibana4的新特性介绍:

1)突出标签,按键链接更好用,风格上来支持数据密度和更一致的UI 。

2)一致性查询和过滤布局

3)100%的全新时间范围选择器

4)可过滤的字段列表

5)动态的仪表盘和URL参数等

1,下载解压

# wget https://download.elastic.co/kiba ... .1-linux-x64.tar.gz

# tar xf kibana-4.1.1-linux-x64.tar.gz

# mv kibana-4.1.1-linux-x64 /usr/local/elk/kibana

2,启动kibana

# pwd

/usr/local/elk/kibana/bin

# ./kibana &

打开浏览器即可查看 http://115.29.150.217:5601

[小扩展]

kibana3.0版本的安装:

#wget https://download.elasticsearch.org/kibana/kibana/kibana-3.1.2.zip

# tar fxz kibana-3.1.2.zip && mv kibana-3.1.2.zip kibana

# mv kibana /usr/local/nginx/html/

在nginx配置好kibana.

1

2

3

4

| location /kibana/ {

alias /usr/local/nginx/html/kibana/;

index index.php index.html index.htm;

}

|

http://115.29.150.217/kibana/index.html 访问

七.添加kibana登录认证

Kibana是nodejs开发的,本身没有任何安全限制,只要浏览url即可访问,如果公网环境不安全,可以通过nginx请求转发增加认证,方法如下:

kibana没有重启,只能通过ps –ef | greo node来查看nodejs进程来结束。

在此处,

1, 修改nginx配置文件添加认证

# vim nginx.conf

1

2

3

4

5

6

7

| location /kibana/ {

#alias /usr/local/nginx/html/kibana/;

proxy_pass http://115.29.150.216:5602/;

index index.php index.html index.htm;

auth_basic "secret";

auth_basic_user_file /usr/local/nginx/db/passwd.db;

}

|

[安全加固]

为了安全期间我把kibana的默认5601端口修改为5602,不仅要修改kibana的配置文件,还要在nginx配置的反代配置中指向到该端口,否则会无法访问。

# pwd

/usr/local/elk/kibana/config

# vim kibana.yml

port: 5602 修改该段。

host: "0.0.0.0" 若想再提高公网对外的安全性,改成localhost 127.0.0.1,再修改nginx中反代地址即可。

# mkdir -p /usr/local/nginx/db/

2,配置登录用户密码

# yum install -y httpd-tools 安装htpasswd工具

# htpasswd -c /usr/local/nginx/db/passwd.db elkuser

New password: 输入密码

Re-type new password: 输入密码

Adding password for user elkuser ok..

重启nginx

访问测试这个是在kibana3版本测试:

Elasticsearch-Logstash-kibana-Redis服务启动关闭:

[Redis]

#/usr/local/redis/redis-server /usr/local/redis/conf/redis.conf & 启动

#killall redis-server

[elasticsearch]

#elasticsearch start -d 启动

# ps -ef | grep elasticsearch 查看pid并kill掉

[logstash]

#nohup /usr/local/logstash-1.5.3/bin/logstash -f /usr/local/logstash-1.5.3/etc/logstash_agent.conf & 启动日志推送

# ps -ef | grep logstash查看pid并kill掉

[kibana]

3.0版本直接解压放到web目录即可访问。

4.0版本:

#/usr/local/elk/kibana/bin/kibana & 后台启动

# ps -ef | grep node 查看node进程并kill掉

|

|