|

|

keepalived是一款用C编写的,旨在给linux系统和基于linux的设施提供简单、稳固的高可用和负载均衡功能的软件。它基于linux内核的ipvs模块实现4层负载均衡,能应用一系列的健康状态检测机制基于VRRP协议实现服务的高可用。

一、VRRP协议

VRRP(Virtual Router Redundancy Protocol,虚拟路由冗余协议)是一种容错协议。通常,一个网络内的所有主机都设置一条默认路由,这样,主机发出的目的地址不在本网段的报文将被通过默认路由发往路由器RouterA,从而实现了主机与外部网络的通信。当路由器RouterA 坏掉时,本网段内所有以RouterA 为默认路由下一跳的主机将无法与外部通信,这就是单点故障。VRRP就是为解决上述问题而提出的。

VRRP 将局域网的一组路由器组织成一个虚拟路由器。这个虚拟路由器通过虚拟IP对外提供服务,而在虚拟路由器内部是多个物理路由器协同工作,同一时间内只有一台物理路由器占有这个虚拟IP,作为master实际负责ARP响应和数据包转发等工作;其它物理路由器作为backup,不提供对外服务,仅接收master的vrrp状态通告信息。

master由优先级选举产生,每个物理路由器都有一个 1-255 之间的优先级,级别最高的(highest priority)将成为master,若优先级相同,则IP地址较大者胜出。

在vrrp协议中,所有的报文都是通过IP多播形式发送的,而在一个虚拟路由器中,只有处于master角色的路由器会一直发送VRRP数据包,处于backup角色的路由器只接收master发过来的报文信息,用来监控master的运行状态,因此不会发生backup抢占的现象,除非它的优先级更高。当master不可用时,backup也就无法收到master发过来的报文信息,于是就认定master出现故障,接着多台backup就会进行优先级最高的backup就将成为新的master,这样就保证了服务的持续可用性。

二、keepalived的组件

keepalived的主要组件:

WatchDog:负责监控checkers和VRRP进程

Checkers:实现对服务器运行状态检测和故障隔离

VRRP Stack:实现vrrp协议,即实现HA集群中失败切换功能

IPVS wrapper:将设置好的ipvs规则送给内核ipvs模块

Netlink Reflector:负责虚拟IP的设置和切换

三、keepalive的适用场景

keepalived理论上可以为mysqld,httpd等服务提供高可用,给这些服务做高可用通常需要配置共享存储资源,对此keepalived需要借助额外的命令或脚本实现,这种情况下keepalived的性能是显然不如heartbeat或corosync的。keepalived适合提供轻量级的高可用方案,如对作为反向代理的nginx、haproxy以及ipvs做高可用,给这些服务做高可用都无需配置共享存储资源。keepalived即能给ipvs做高可用,又能基于ipvs做负载均衡,并且能应用一系列的健康状态检测机制,获知后端服务器的状态,实现服务器池的动态维护和管理。

四、使用keepalived做反向代理nginx的高可用

1、实验拓扑图

2、在node3上安装httpd,在node1和node2上安装nginx并配置为反向代理服务器,代理至node3;另外,要确保做高可用的两个节点时间同步。

1

2

3

4

5

6

7

| [iyunv@node3 ~]# yum -y install httpd

...

[iyunv@node3 ~]# vim /var/www/html/test.html #创建一个测试页面

hello,keepalived

[iyunv@node3 ~]# service httpd start

...

|

1

2

3

4

5

6

7

8

9

10

11

12

| [iyunv@node1 ~]# yum -y install nginx;ssh root@node2 'yum -y install nginx'

...

[iyunv@node1 ~]# vim /etc/nginx/conf.d/default.conf

...

location ~ \.html$ {

proxy_pass http://192.168.30.13;

}

...

[iyunv@node1 ~]# scp /etc/nginx/conf.d/default.conf root@node2:/etc/nginx/conf.d/

...

[iyunv@node1 ~]# service nginx start;ssh root@node2 'service nginx start' #启动两个节点上的nginx服务

...

|

3、在node1和node2上安装配置keepalived

yum -y install keepalived

主配置文件:/etc/keepalived/keepalived.conf

查看配置文件帮助信息:man keepalived.conf

keepalived的日志信息会输出至/var/log/messages

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| [iyunv@node1 ~]# yum -y install keepalived;ssh root@node2 'yum -y install keepalived'

...

[iyunv@node1 ~]# rpm -ql keepalived

/etc/keepalived

/etc/keepalived/keepalived.conf #配置文件

/etc/rc.d/init.d/keepalived

/etc/sysconfig/keepalived

/usr/bin/genhash

/usr/libexec/keepalived

/usr/sbin/keepalived

...

/usr/share/doc/keepalived-1.2.13/samples #该目录下有一些样例文件,供参考

...

[iyunv@node1 keepalived]# ls /usr/share/doc/keepalived-1.2.13/samples

keepalived.conf.fwmark keepalived.conf.SMTP_CHECK keepalived.conf.vrrp.lvs_syncd

keepalived.conf.HTTP_GET.port keepalived.conf.SSL_GET keepalived.conf.vrrp.routes

keepalived.conf.inhibit keepalived.conf.status_code keepalived.conf.vrrp.scripts

keepalived.conf.IPv6 keepalived.conf.track_interface keepalived.conf.vrrp.static_ipaddress

keepalived.conf.misc_check keepalived.conf.virtualhost keepalived.conf.vrrp.sync

keepalived.conf.misc_check_arg keepalived.conf.virtual_server_group sample.misccheck.smbcheck.sh

keepalived.conf.quorum keepalived.conf.vrrp

keepalived.conf.sample keepalived.conf.vrrp.localcheck

|

keepalived配置文件主要包括四大段:

global_defs:全局配置段,主要配置邮件通知和机器标识

vrrp_instance:vrrp实例配置段,配置高可用集群

virtual_server:lvs配置段,配置负载均衡集群

vrrp_script:vrrp脚本配置段,用来实现对集群资源的监控,要在vrrp_instance配置段中调用才能生效

注:以上各配置段并非都必不可少,可按需配置。如果只是单纯地做负载均衡集群,可不用配置vrrp_instance段,反过来也是;甚至如果不想使用邮件通知,都不需要配置global_defs段。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

| [iyunv@node1 ~]# cd /etc/keepalived/

[iyunv@node1 keepalived]# mv keepalived.conf keepalived.conf.back

[iyunv@node1 keepalived]# vim keepalived.conf

! Configuration File for keepalived #以“!”开头的为注释

global_defs { #全局配置段,主要配置邮件通知和机器标识

notification_email { #指定keepalived在发生事件(如切换、故障)时发送email给谁,多个写多行

root@localhost #收件人

magedu@126.com

}

notification_email_from kanotify@magedu.com #发件人

smtp_connect_timeout 10 #连接smtp服务器的超时时长

smtp_server 127.0.0.1 #smtp服务器地址

router_id nginx-node1 #机器标识;通常为hostname,但不是必须为hostname,会显示在邮件主题中

}

vrrp_script chk_nginx { #vrrp脚本配置段,要在vrrp实例中调用才生效

script "killall -0 nginx" #信号0用来判断进程的状态;返回状态码为0表示正常,返回状态码非0表示异常

interval 1 #检测的间隔时长

weight -2 #确定异常则将优先级减2

fall 3 #确定为异常需要检测的次数

rise 1 #确定为正常需要检测的次数

}

vrrp_script chk_mantaince_down { #此vrrp脚本的作用在于可以让我们通过手动创建文件的方式使节点降级

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1

weight -2

fall 3

rise 1

}

vrrp_instance VI_1 { #vrrp实例配置段,可定义多个,每个vrrp实例名称要惟一

interface eth0 #指定vrrp绑定的物理接口

state MASTER #指定节点的初始状态;但这里指定的不算,keepalived启动时根据节点的优先级高低来确定节点的角色

priority 100 #优点级,1-255

virtual_router_id 11 #虚拟路由id,用来区分多个instance的VRRP组播

garp_master_delay 1 #当切为master状态后多久更新ARP缓存,默认5秒

smtp_alert #当有状态切换时使用全局配置段的邮件设置来发送通知

authentication { #节点间通信认证,认证类型有PASS和AH

auth_type PASS

auth_pass magedu #在一个vrrp实例中,master和backup要使用相同密码才能正常通信

}

track_interface { #跟踪接口,若接口出现故障,则节点状态变成fault

eth0

}

virtual_ipaddress {

192.168.30.30/24 dev eth0 label eth0:0 #虚拟IP地址,会添加在master上

}

track_script { #调用vrrp脚本

chk_nginx

chk_mantaince_down

}

}

# vrrp实例配置段中其它常用参数:

nopreempt 表示不抢占。允许一个priority较低的节点保持master状态,即使priority更高的节点恢复正常。

因为节点的切换会毕竟会造成服务短暂的中断,而且存在一定的风险和不稳定性。因此应尽量减少切换操作。

要配置非抢占模式,在优先级较高节点的配置文件中:

state BACKUP

nopreempt

preempt_delay 抢占延迟时间;有时候系统重启之后需要经过一段时间后才能工作,在这种情况下进行主备切换是没必要的

notify_master/backup/fault 分别表示节点状态为主/备/出错时所执行的脚本

use_vmac 是否使用VRRP的虚拟MAC地址

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

| [iyunv@node2 ~]# vim /etc/keepalived/keepalived.conf #对node2上的keepalived配置文件做适当修改

...

router_id nginx-node2

...

state BACKUP

priority 99 #从节点优点级较低

...

[iyunv@node2 ~]# service keepalived start #先尝试启动从节点

Starting keepalived: [ OK ]

[iyunv@node2 ~]# tail -f /var/log/messages

...

May 27 21:34:15 node2 Keepalived[17797]: Starting Keepalived v1.2.13 (03/19,2015)

May 27 21:34:15 node2 Keepalived[17798]: Starting Healthcheck child process, pid=17799

May 27 21:34:15 node2 Keepalived[17798]: Starting VRRP child process, pid=17801

...

May 27 21:34:15 node2 Keepalived_vrrp[17801]: Opening file '/etc/keepalived/keepalived.conf'.

...

May 27 21:34:15 node2 Keepalived_vrrp[17801]: Configuration is using : 67460 Bytes

...

May 27 21:34:15 node2 Keepalived_healthcheckers[17799]: Opening file '/etc/keepalived/keepalived.conf'.

May 27 21:34:15 node2 Keepalived_healthcheckers[17799]: Configuration is using : 7507 Bytes

May 27 21:34:15 node2 Keepalived_vrrp[17801]: VRRP_Instance(VI_1) Entering BACKUP STATE

May 27 21:34:15 node2 Keepalived_vrrp[17801]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

May 27 21:34:15 node2 Keepalived_vrrp[17801]: Remote SMTP server [127.0.0.1]:25 connected.

May 27 21:34:15 node2 Keepalived_healthcheckers[17799]: Using LinkWatch kernel netlink reflector...

May 27 21:34:15 node2 Keepalived_vrrp[17801]: VRRP_Script(chk_mantaince_down) succeeded

May 27 21:34:15 node2 Keepalived_vrrp[17801]: VRRP_Script(chk_nginx) succeeded

May 27 21:34:16 node2 Keepalived_vrrp[17801]: SMTP alert successfully sent.

May 27 21:34:19 node2 Keepalived_vrrp[17801]: VRRP_Instance(VI_1) Transition to MASTER STATE

May 27 21:34:20 node2 Keepalived_vrrp[17801]: VRRP_Instance(VI_1) Entering MASTER STATE

May 27 21:34:20 node2 Keepalived_vrrp[17801]: VRRP_Instance(VI_1) setting protocol VIPs.

May 27 21:34:20 node2 Keepalived_vrrp[17801]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.30.30

May 27 21:34:20 node2 Keepalived_healthcheckers[17799]: Netlink reflector reports IP 192.168.30.30 added

May 27 21:34:20 node2 Keepalived_vrrp[17801]: Remote SMTP server [127.0.0.1]:25 connected.

May 27 21:34:20 node2 Keepalived_vrrp[17801]: SMTP alert successfully sent.

May 27 21:34:21 node2 Keepalived_vrrp[17801]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.30.30

# keepalived会启动Healthcheck和VRRP两个子进程;

# keepalived备节点在启动keepalived后,由于自身角色为backup,所以会首先进入backup状态,然后运行vrrp_script模块检查资源状态,

如果nginx正常,则输出succeeded。

# 因为keepalived主节点还没启动,此时备节点发现自己的优先级最高,于是转变为master状态,然后设置vip。

[iyunv@node2 keepalived]# ip addr show #可以看到vip已配置

...

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:bd:68:23 brd ff:ff:ff:ff:ff:ff

inet 192.168.30.20/24 brd 192.168.30.255 scope global eth0

inet 192.168.30.30/24 scope global secondary eth0:0

inet6 fe80::20c:29ff:febd:6823/64 scope link

valid_lft forever preferred_lft forever

[iyunv@node2 keepalived]# mail

Heirloom Mail version 12.4 7/29/08. Type ? for help.

"/var/spool/mail/root": 2 messages 2 new

>N 1 kanotify@magedu.com Fri May 27 21:34 14/505 "[nginx-node2] VRRP Instance VI_1 - Entering BACKUP state"

N 2 kanotify@magedu.com Fri May 27 21:34 14/500 "[nginx-node2] VRRP Instance VI_1 - Entering MASTER state"

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| [iyunv@node1 ~]# service keepalived start

Starting keepalived: [ OK ]

[iyunv@node1 ~]# tail -f /var/log/messages

...

May 27 21:55:06 node1 Keepalived_vrrp[17364]: VRRP_Script(chk_mantaince_down) succeeded

May 27 21:55:06 node1 Keepalived_vrrp[17364]: VRRP_Script(chk_nginx) succeeded

May 27 21:55:07 node1 Keepalived_vrrp[17364]: VRRP_Instance(VI_1) Transition to MASTER STATE

May 27 21:55:07 node1 Keepalived_vrrp[17364]: VRRP_Instance(VI_1) Received lower prio advert, forcing new election

May 27 21:55:08 node1 Keepalived_vrrp[17364]: VRRP_Instance(VI_1) Entering MASTER STATE

May 27 21:55:08 node1 Keepalived_vrrp[17364]: VRRP_Instance(VI_1) setting protocol VIPs.

May 27 21:55:08 node1 Keepalived_vrrp[17364]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.30.30

May 27 21:55:08 node1 Keepalived_healthcheckers[17363]: Netlink reflector reports IP 192.168.30.30 added

May 27 21:55:08 node1 Keepalived_vrrp[17364]: Remote SMTP server [127.0.0.1]:25 connected.

May 27 21:55:08 node1 Keepalived_vrrp[17364]: SMTP alert successfully sent.

May 27 21:55:09 node1 Keepalived_vrrp[17364]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.30.30

# 从日志可看出,在keepalived主节点启动后,由于自身角色为master,所以先用vrrp_script模块进行检测,检测正常后才转为master状态

# 当收到一个优先级较低的通告,强制进行新的选举,然后确定进入master状态

[iyunv@node1 ~]# ip addr show #可以看到node1已抢到vip

...

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:40:35:9d brd ff:ff:ff:ff:ff:ff

inet 192.168.30.10/24 brd 192.168.30.255 scope global eth0

inet 192.168.30.30/24 scope global secondary eth0:0

inet6 fe80::20c:29ff:fe40:359d/64 scope link

valid_lft forever preferred_lft forever

[iyunv@node1 keepalived]# mail

Heirloom Mail version 12.4 7/29/08. Type ? for help.

"/var/spool/mail/root": 1 message 1 new

>N 1 kanotify@magedu.com Fri May 27 21:55 14/500 "[nginx-node1] VRRP Instance VI_1 - Entering MASTER state"

|

1

2

3

4

5

6

7

8

| [iyunv@node2 ~]# tail -f /var/log/messages #node2上配置的vip已被移除

...

May 27 21:55:07 node2 Keepalived_vrrp[22205]: VRRP_Instance(VI_1) Received higher prio advert

May 27 21:55:07 node2 Keepalived_vrrp[22205]: VRRP_Instance(VI_1) Entering BACKUP STATE

May 27 21:55:07 node2 Keepalived_vrrp[22205]: VRRP_Instance(VI_1) removing protocol VIPs.

May 27 21:55:07 node2 Keepalived_healthcheckers[22204]: Netlink reflector reports IP 192.168.30.30 removed

May 27 21:55:07 node2 Keepalived_vrrp[22205]: Remote SMTP server [127.0.0.1]:25 connected.

May 27 21:55:07 node2 Keepalived_vrrp[22205]: SMTP alert successfully sent.

|

4、测试

1

2

| [iyunv@node5 ~]# curl 192.168.30.30/test.html

hello,keepalived

|

模拟故障切换:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| [iyunv@node1 keepalived]# service nginx stop

Stopping nginx: [ OK ]

[iyunv@node1 ~]# tail -f /var/log/messages

...

May 27 22:06:17 node1 Keepalived_vrrp[17364]: VRRP_Script(chk_nginx) failed

May 27 22:06:19 node1 Keepalived_vrrp[17364]: VRRP_Instance(VI_1) Received higher prio advert

May 27 22:06:19 node1 Keepalived_vrrp[17364]: VRRP_Instance(VI_1) Entering BACKUP STATE

May 27 22:06:19 node1 Keepalived_vrrp[17364]: VRRP_Instance(VI_1) removing protocol VIPs.

May 27 22:06:19 node1 Keepalived_healthcheckers[17363]: Netlink reflector reports IP 192.168.30.30 removed

May 27 22:06:19 node1 Keepalived_vrrp[17364]: Remote SMTP server [127.0.0.1]:25 connected.

May 27 22:06:19 node1 Keepalived_vrrp[17364]: SMTP alert successfully sent.

# 由于nginx服务停止,vrrp_script(chk_nginx)随即检测失败,优先级减2,低于对方节点的优先级,于是转变为backup状态

[iyunv@node1 keepalived]# ip addr show #vip已被移除

...

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:40:35:9d brd ff:ff:ff:ff:ff:ff

inet 192.168.30.10/24 brd 192.168.30.255 scope global eth0

inet6 fe80::20c:29ff:fe40:359d/64 scope link

valid_lft forever preferred_lft forever

|

1

2

3

4

5

6

7

8

| [iyunv@node2 keepalived]# ip addr show #vip已转移到node2上

...

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:bd:68:23 brd ff:ff:ff:ff:ff:ff

inet 192.168.30.20/24 brd 192.168.30.255 scope global eth0

inet 192.168.30.30/24 scope global secondary eth0:0

inet6 fe80::20c:29ff:febd:6823/64 scope link

valid_lft forever preferred_lft forever

|

1

2

| [iyunv@node5 ~]# curl 192.168.30.30/test.html

hello,keepalived

|

5、优化配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| [iyunv@node1 keepalived]# vim keepalived.conf #将配置文件做如下修改

...

vrrp_script chk_nginx { #检测失败后keepalived的状态变成fault

script "killall -0 nginx"

interval 1

fall 3

rise 1

}

...

vrrp_instance VI_1 {

...

preempt_delay 300 #设置抢占延迟

...

notify_fault "/etc/rc.d/init.d/nginx restart"

#当状态为fault时尝试重启nginx,若重启不成功,会被对方节点抢占

}

# node2的配置文件也做相同修改

[iyunv@node1 keepalived]# service nginx status;ssh root@node2 'service nginx status'

nginx (pid 27762) is running...

nginx (pid 10962) is running...

[iyunv@node1 keepalived]# service keepalived start;ssh root@node2 'service nginx start'

...

Starting nginx: [ OK ]

[iyunv@node1 keepalived]# ifconfig

...

eth0:0 Link encap:Ethernet HWaddr 00:0C:29:40:35:9D

inet addr:192.168.30.30 Bcast:0.0.0.0 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

|

模拟短暂性故障:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| [iyunv@node1 keepalived]# service nginx stop

Stopping nginx: [ OK ]

[iyunv@node1 ~]# tail -f /var/log/messages

...

May 27 22:46:58 node1 Keepalived_vrrp[27784]: VRRP_Script(chk_nginx) failed

May 27 22:46:59 node1 Keepalived_vrrp[27784]: VRRP_Instance(VI_1) Entering FAULT STATE

May 27 22:46:59 node1 Keepalived_vrrp[27784]: VRRP_Instance(VI_1) removing protocol VIPs.

May 27 22:46:59 node1 Keepalived_healthcheckers[27783]: Netlink reflector reports IP 192.168.30.30 removed

May 27 22:46:59 node1 Keepalived_vrrp[27784]: VRRP_Instance(VI_1) Now in FAULT state

May 27 22:46:59 node1 Keepalived_vrrp[27784]: VRRP_Script(chk_nginx) succeeded

May 27 22:47:00 node1 Keepalived_vrrp[27784]: VRRP_Instance(VI_1) prio is higher than received advert

May 27 22:47:00 node1 Keepalived_vrrp[27784]: VRRP_Instance(VI_1) Transition to MASTER STATE

May 27 22:47:00 node1 Keepalived_vrrp[27784]: VRRP_Instance(VI_1) Received lower prio advert, forcing new election

May 27 22:47:01 node1 Keepalived_vrrp[27784]: VRRP_Instance(VI_1) Entering MASTER STATE

May 27 22:47:01 node1 Keepalived_vrrp[27784]: VRRP_Instance(VI_1) setting protocol VIPs.

...

# 停止nginx服务后,vrrp_script(chk_nginx)随即检测失败,进入fault状态,触发notify_fault指定的重启操作,然后重新检测成功

[iyunv@node1 keepalived]# service nginx status

nginx (pid 29373) is running...

|

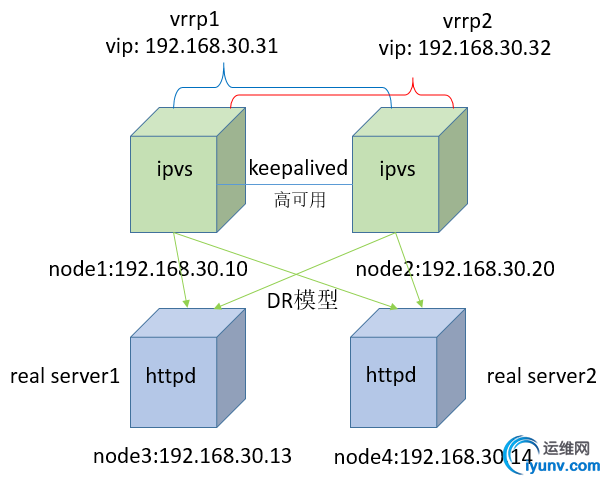

五、使用keepalived做ipvs的高可用,采用双主模型

1、实验拓扑图

2、配置好两个后端real server

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| [iyunv@node3 ~]# echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

[iyunv@node3 ~]# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

[iyunv@node3 ~]# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

[iyunv@node3 ~]# echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

[iyunv@node3 ~]# ifconfig lo:0 192.168.30.31 netmask 255.255.255.255 broadcast 192.168.30.31 up

[iyunv@node3 ~]# ifconfig lo:1 192.168.30.32 netmask 255.255.255.255 broadcast 192.168.30.32 up

[iyunv@node3 ~]# route add -host 192.168.30.31 dev lo:0

[iyunv@node3 ~]# route add -host 192.168.30.32 dev lo:1

[iyunv@node3 ~]# cd /var/www/html

[iyunv@node3 html]# ls

[iyunv@node3 html]# vim index.html

hello

[iyunv@node3 html]# vim test.html

hello,this is node3

[iyunv@node3 html]# service httpd start

...

# 在node4上执行类似的步骤

|

3、对ipvs做双主模型的高可用,并将两个ipvs节点作为后端RS出现故障时的备用服务器

要配置双主模型,需要配置两个vrrp实例,两个节点在一个实例中的角色为分别为主/从,在另一个实例中则刚好反过来,为从/主

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

| [iyunv@node1 ~]# yum -y install httpd;ssh root@node2 'yum -y install httpd'

...

[iyunv@node1 ~]# vim /var/www/html/test.html

fallback1

[iyunv@node1 ~]# service httpd start

...

[iyunv@node1 ~]# cd /etc/keepalived/

[iyunv@node1 keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

magedu@126.com

}

notification_email_from kanotify@magedu.com

smtp_connect_timeout 10

smtp_server 127.0.0.1

router_id LVS_DEVEL

}

vrrp_script chk_schedown1 {

script "[[ -f /etc/keepalived/down1 ]] && exit 1 || exit 0"

interval 2

weight -2

}

vrrp_script chk_schedown2 {

script "[[ -f /etc/keepalived/down2 ]] && exit 1 || exit 0"

interval 2

weight -2

}

vrrp_instance VI_1 { #第一个vrrp实例

interface eth0

state MASTER #将node1配置为第一个实例中的master

priority 100

virtual_router_id 51

garp_master_delay 1

smtp_alert #开启邮件通知

authentication {

auth_type PASS

auth_pass magedu

}

track_interface {

eth0

}

virtual_ipaddress {

192.168.30.31/24 dev eth0 label eth0:0 #vip1

}

track_script {

chk_schedown1

}

}

virtual_server 192.168.30.31 80 { #定义一个集群服务

delay_loop 6 #健康检查的时间间隔

lb_algo rr #lvs调度算法

lb_kind DR #lvs模型

persistence_timeout 30 #持久连接

protocol TCP

sorry_server 127.0.0.1 80 #指定所有后端real server不可用时的备用服务器

real_server 192.168.30.13 80 { #定义后端real server

weight 1

HTTP_GET { #定义后端RS健康状态检测的方式

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 3 #重试次数

delay_before_retry 3 #重试间隔

}

}

# RS健康状态检测方式有多种:HTTP_GET|SSL_GET|TCP_CHECK|SMTP_CHECK|MISC_CHECK,具体用法可man keepalived.conf

# 如果要使用TCP_CHECK检测各real server的健康状态,那么,上面HTTP_GET部分的定义可以替换为如下内容:

# TCP_CHECK {

# connect_port 80

# connect_timeout 3

# }

real_server 192.168.30.14 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

vrrp_instance VI_2 { #第二个vrrp实例

interface eth0

state BACKUP

priority 99

virtual_router_id 52 #每个vrrp实例的虚拟路由ID必须惟一

garp_master_delay 1

smtp_alert

authentication {

auth_type PASS

auth_pass magedu

}

track_interface {

eth0

}

virtual_ipaddress {

192.168.30.32/24 dev eth0 label eth0:1 #vip2

}

track_script {

chk_schedown2

}

}

virtual_server 192.168.30.32 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 30

protocol TCP

sorry_server 127.0.0.1 80

real_server 192.168.30.13 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.30.14 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[iyunv@node1 keepalived]# scp keepalived.conf root@node2:/etc/keepalived/

keepalived.conf 100% 2694 2.6KB/s 00:00

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

| [iyunv@node2 ~]# vim /var/www/html/test.html

fallback2

[iyunv@node2 ~]# service httpd start

...

[iyunv@node2 ~]# cd /etc/keepalived/

[iyunv@node2 keepalived]# vim keepalived.conf #对node2上的keepalived配置文件做适当修改

...

vrrp_instance VI_1 {

...

state BACKUP

priority 99

...

}

...

vrrp_instance VI_2 {

...

state MASTER

priority 100

...

}

[iyunv@node2 keepalived]# service keepalived start;ssh root@node1 'service keepalived start'

Starting keepalived: [ OK ]

Starting keepalived: [ OK ]

[iyunv@node2 keepalived]# yum -y install ipvsadm;ssh root@node1 'yum -y install ipvsadm'

...

#安装ipvsadm只是便于查看ipvs规则,非必须

[iyunv@node2 keepalived]# ip addr show #因node2在第二个vrrp实例中优先级列高,故vip2已配置在node2上

...

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:bd:68:23 brd ff:ff:ff:ff:ff:ff

inet 192.168.30.20/24 brd 192.168.30.255 scope global eth0

inet 192.168.30.32/24 scope global secondary eth0:1

inet6 fe80::20c:29ff:febd:6823/64 scope link

valid_lft forever preferred_lft forever

[iyunv@node2 keepalived]# ipvsadm -L -n #ipvs规则已生成

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.30.31:80 rr persistent 30

-> 192.168.30.13:80 Route 1 0 0

-> 192.168.30.14:80 Route 1 0 0

TCP 192.168.30.32:80 rr persistent 30

-> 192.168.30.13:80 Route 1 0 0

-> 192.168.30.14:80 Route 1 0 0

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| [iyunv@node1 keepalived]# ip addr show #vip1已配置在node1上

...

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:40:35:9d brd ff:ff:ff:ff:ff:ff

inet 192.168.30.10/24 brd 192.168.30.255 scope global eth0

inet 192.168.30.31/24 scope global secondary eth0:0

inet6 fe80::20c:29ff:fe40:359d/64 scope link

valid_lft forever preferred_lft forever

[iyunv@node1 keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.30.31:80 rr persistent 30

-> 192.168.30.13:80 Route 1 0 0

-> 192.168.30.14:80 Route 1 0 0

TCP 192.168.30.32:80 rr persistent 30

-> 192.168.30.13:80 Route 1 0 0

-> 192.168.30.14:80 Route 1 0 0

|

4、测试

1

2

3

4

5

6

7

8

9

10

| [iyunv@node5 ~]# curl 192.168.30.31/test.html #因为启用了持久连接,所以请求可能在一段时间内始终被定向至同一RS

hello,this is node4

[iyunv@node5 ~]# curl 192.168.30.31/test.html

hello,this is node4

[iyunv@node5 ~]# curl 192.168.30.31/test.html

hello,this is node3

[iyunv@node5 ~]# curl 192.168.30.32/test.html

hello,this is node3

[iyunv@node5 ~]# curl 192.168.30.32/test.html

hello,this is node3

|

1

2

| [iyunv@node4 html]# service httpd stop #将一个RS上的服务停掉

Stopping httpd: [ OK ]

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| [iyunv@node1 keepalived]# mail #收到邮件通知

Heirloom Mail version 12.4 7/29/08. Type ? for help.

"/var/spool/mail/root": 3 messages 1 new 3 unread

U 1 kanotify@magedu.com Fri May 27 11:53 15/508 "[LVS_DEVEL] VRRP Instance VI_2 - Entering BACKUP state"

U 2 kanotify@magedu.com Fri May 27 11:53 15/503 "[LVS_DEVEL] VRRP Instance VI_1 - Entering MASTER state"

>N 3 kanotify@magedu.com Fri May 27 12:03 14/492 "[LVS_DEVEL] Realserver [192.168.30.14]:80 - DOWN"

[iyunv@node1 keepalived]# ipvsadm -L -n #keepalived探测到后端RS的异常状态,将故障RS移除

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.30.31:80 rr persistent 30

-> 192.168.30.13:80 Route 1 0 0

TCP 192.168.30.32:80 rr persistent 30

-> 192.168.30.13:80 Route 1 0 1

|

1

2

3

4

5

6

| [iyunv@node5 ~]# curl 192.168.30.31/test.html

hello,this is node3

[iyunv@node5 ~]# curl 192.168.30.31/test.html

hello,this is node3

[iyunv@node5 ~]# curl 192.168.30.32/test.html

hello,this is node3

|

1

2

| [iyunv@node3 html]# service httpd stop #将另一个RS上的服务也停掉

Stopping httpd: [ OK ]

|

1

2

3

4

5

6

7

8

| [iyunv@node1 ~]# ipvsadm -L -n #可以看到sorry server已被添加进ipvs规则中

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.30.31:80 rr persistent 30

-> 127.0.0.1:80 Local 1 0 0

TCP 192.168.30.32:80 rr persistent 30

-> 127.0.0.1:80 Local 1 0 0

|

1

2

3

4

| [iyunv@node5 ~]# curl 192.168.30.31/test.html

fallback1

[iyunv@node5 ~]# curl 192.168.30.32/test.html

fallback2

|

|

|