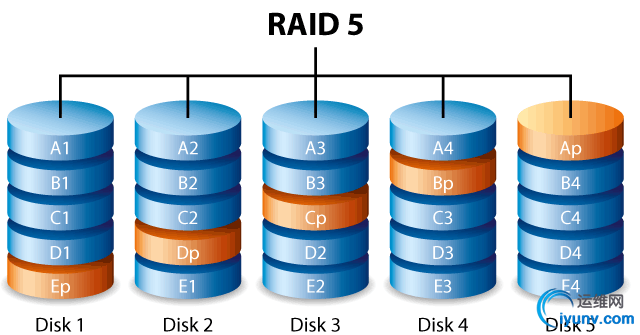

构建软RAID5磁盘阵列&RAID设备恢复

需求描述- 为Linux服务器添加4块SCSI硬盘

- 使用mdadm软件包,构建RAID5磁盘阵列,提高磁盘存储的性能和可靠性

- 安装mdadm

- 准备用于RAID阵列的分区 -- 为Linux服务器添加4块SCSI硬盘,并使用fdisk工具个划分出一块2GB的分区依次为:/dev/sde1、/dev/sdg1、/dev/sdh1、/dev/sdf1 -- 将其类型ID更改为“fd”,对应为“Linux raid autodetect”,表示支持用于RAID磁盘阵列

- 创建RAID设备

- 创建热备磁盘

- 查看mdstat

- 设定faulty

- 再次查看mdstat

- 在RAID设备中建立文件系统

- 挂载并使用文件系统

- 设备恢复

- 查看mdadm.conf examples,根据这个创建mdadm.conf

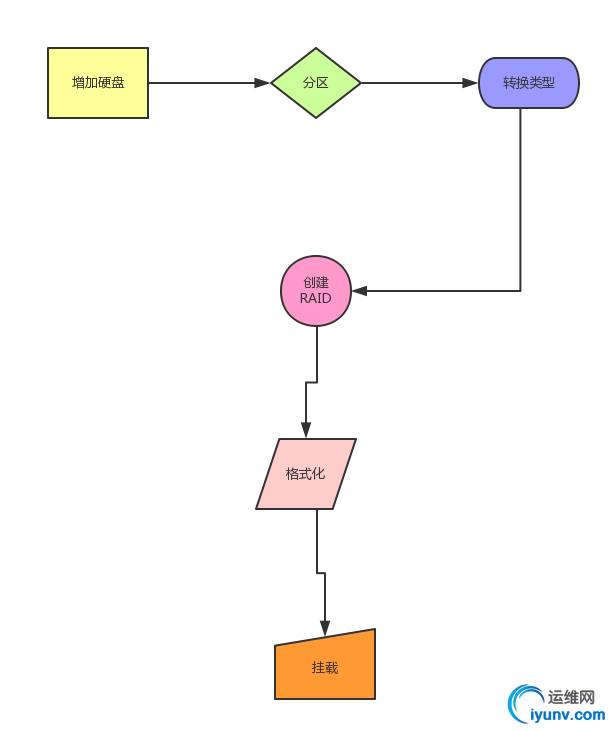

一、准备用于RAID阵列的分区

(一)增加硬盘、分区(关掉虚拟机的情况下,增加硬盘)

A.增加硬盘(虚拟机是这样,真机是需要加硬盘)

B.对“/dev/sde1、/dev/sdg1、/dev/sdh1、/dev/sdf1、/dev/sd ”进行分区以及检验分区信息

C.转换类型为Linux raid autodetect(fd)以及查看转换后的信息

现有磁盘信息:

其实这里可以直接显示出来你已经添加的4块硬盘就可以,可以用fdisk /dev/sd [eghf]

1.sde2分出2G的主分区,并转换成"fd”(Linux raid autodetect ):

这里是可以拆分的,包括创建、转换、还有查看、保存。

(1).创建

[iyunv@test2 jason]# fdisk /dev/sde

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): p

Disk /dev/sde: 21.5 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0xe4b1d138

Device Boot Start End Blocks Id System

/dev/sde1 1 262 2104483+ fd Linux raid autodetect

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 2

First cylinder (263-2610, default 263):

Using default value 263

Last cylinder, +cylinders or +size{K,M,G} (263-2610, default 2610): +2G

(2).转换

Command (m for help): t

Partition number (1-4): fd

Partition number (1-4): 2

Hex code (type L to list codes): fd

Changed system type of partition 2 to fd (Linux raid autodetect)

(3).查看

Command (m for help): p

Disk /dev/sde: 21.5 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0xe4b1d138

Device Boot Start End Blocks Id System

/dev/sde1 1 262 2104483+ fd Linux raid autodetect

/dev/sde2 263 524 2104515 fd Linux raid autodetect

(4).保存并退出

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 16: Device or resource busy.

The kernel still uses the old table. The new table will be used at

the next reboot or after you run partprobe(8) or kpartx(8)

Syncing disks.

2.sdg2分出2G的主分区,并转换成"fd”(Linux raid autodetect ):

(1).创建

[iyunv@test2 jason]# fdisk /dev/sdg

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 2

First cylinder (263-2610, default 263):

Using default value 263

Last cylinder, +cylinders or +size{K,M,G} (263-2610, default 2610): +2G

(2).转换

Command (m for help): t

Partition number (1-4): 2

Hex code (type L to list codes): fd

Changed system type of partition 2 to fd (Linux raid autodetect)

(3).查看

Command (m for help): p

Disk /dev/sdg: 21.5 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x37970afa

Device Boot Start End Blocks Id System

/dev/sdg1 1 262 2104483+ fd Linux raid autodetect

/dev/sdg2 263 524 2104515 fd Linux raid autodetect

(4).保存并退出

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 16: Device or resource busy.

The kernel still uses the old table. The new table will be used at

the next reboot or after you run partprobe(8) or kpartx(8)

Syncing disks.

[iyunv@test2 jason]#

3.sdh2分出2G的主分区,并转换成"fd”(Linux raid autodetect ):

(1).创建

[iyunv@test2 jason]# fdisk /dev/sdh

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 2

First cylinder (263-2610, default 263):

Using default value 263

Last cylinder, +cylinders or +size{K,M,G} (263-2610, default 2610): +2G

(2).转换

Command (m for help): t

Partition number (1-4): 2

Hex code (type L to list codes): fd

Changed system type of partition 2 to fd (Linux raid autodetect)

(3).查看

Command (m for help): p

Disk /dev/sdh: 21.5 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0xdeb9d0d0

Device Boot Start End Blocks Id System

/dev/sdh1 1 262 2104483+ fd Linux raid autodetect

/dev/sdh2 263 524 2104515 fd Linux raid autodetect

(4).保存并退出

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 16: Device or resource busy.

The kernel still uses the old table. The new table will be used at

the next reboot or after you run partprobe(8) or kpartx(8)

Syncing disks.

[iyunv@test2 jason]#

4.sdf2分出2G的主分区,并转换成"fd”(Linux raid autodetect ):

(1).创建

[iyunv@test2 jason]# fdisk /dev/sdf

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 2

First cylinder (263-2610, default 263):

Using default value 263

Last cylinder, +cylinders or +size{K,M,G} (263-2610, default 2610): +2G

(2).转换

Command (m for help): t

Partition number (1-4): f

Partition number (1-4): 2

Hex code (type L to list codes): fd

Changed system type of partition 2 to fd (Linux raid autodetect)

(3).查看

Command (m for help): p

Disk /dev/sdf: 21.5 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x65c9c4f1

Device Boot Start End Blocks Id System

/dev/sdf1 1 262 2104483+ fd Linux raid autodetect

/dev/sdf2 263 524 2104515 fd Linux raid autodetect

(4).保存并退出

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 16: Device or resource busy.

The kernel still uses the old table. The new table will be used at

the next reboot or after you run partprobe(8) or kpartx(8)

Syncing disks.

[iyunv@test2 jason]#

5.sdc3 分出2G的主分区,并转换成"fd”(Linux raid autodetect ):

(1).创建

[iyunv@test2 jason]# fdisk /dev/sdc

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

3

Invalid partition number for type `3'

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 3

First cylinder (5225-10443, default 5225):

Using default value 5225

Last cylinder, +cylinders or +size{K,M,G} (5225-10443, default 10443): +2G

(2).转换

Command (m for help): t

Partition number (1-4): 3

Hex code (type L to list codes): fd

Changed system type of partition 3 to fd (Linux raid autodetect)

(3).查看

Command (m for help): p'

Disk /dev/sdc: 85.9 GB, 85899345920 bytes 255 heads, 63 sectors/track, 10443 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x8967e34e

Device Boot Start End Blocks Id System /dev/sdc1 1261220980858+ 83 Linux /dev/sdc22613522420980890 8e Linux LVM /dev/sdc352255486 2104515 fd Linux raid autodetect

(4).保存并退出

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 16: Device or resource busy.

The kernel still uses the old table. The new table will be used at

the next reboot or after you run partprobe(8) or kpartx(8)

6.探测刚刚的分区是否被电脑识别:

其实这里面也可以使用partprobe /dev/sd [eghf]

[iyunv@test2 jason]# partprobe /dev/sde

sde sde1

[iyunv@test2 jason]# partprobe /dev/sdg

sdg sdg1

[iyunv@test2 jason]# partprobe /dev/sdh

sdh sdh1

[iyunv@test2 jason]# partprobe /dev/sdf

sdf sdf1

[iyunv@test2 jason]# partprobe /dev/sdc

sdc sdc1 sdc2

[iyunv@test2 jason]# partx -a /dev/sde

sde sde1

[iyunv@test2 jason]# partx -a /dev/sdg

sdg sdg1

[iyunv@test2 jason]# partx -a /dev/sdh

sdh sdh1

[iyunv@test2 jason]# partx -a /dev/sdf

sdf sdf1

[iyunv@test2 jason]# partx -a /dev/sdf

第一次探测失败。

[iyunv@test2 jason]# partx -a /dev/sdf //按“enter”键

BLKPG: Device or resource busy

error adding partition 1

[iyunv@test2 jason]#

[iyunv@test2 jason]#

[iyunv@test2 jason]# partx -a /dev/sdf

sdf sdf1 sdf2

[iyunv@test2 jason]# partx -a /dev/sdf

sdf sdf1 sdf2

[iyunv@test2 jason]# partx -a /dev/sde //按“enter”键

BLKPG: Device or resource busy

error adding partition 1

[iyunv@test2 jason]# partx -a /dev/sde

sde sde1 sde2

[iyunv@test2 jason]# partx -a /dev/sdg //按“enter”键

BLKPG: Device or resource busy

error adding partition 1

[iyunv@test2 jason]# partx -a /dev/sdg

sdg sdg1 sdg2

[iyunv@test2 jason]# partx -a /dev/sdh

sdh sdh1

[iyunv@test2 jason]# partx -a /dev/sdh //按“enter”键

BLKPG: Device or resource busy

error adding partition 1

[iyunv@test2 jason]# partx -a /dev/sdh //按“enter”键

sdh sdh1 sdh2

[iyunv@test2 jason]# partx -a /dev/sdc

BLKPG: Device or resource busy

error adding partition 1

BLKPG: Device or resource busy

error adding partition 2

[iyunv@test2 jason]# partx -a /dev/sdc

sdc sdc1 sdc2 sdc3

这里在partx -a /dev/sdx后面按enter键之后,再Tab,就能识别出来.第一次的时候每个命令后面接的都是Tab键,都没有识别出来

7.再次查看分区信息:

[iyunv@test2 jason]# fdisk -l | grep "/dev/sd[eghf]2"

/dev/sde2 263 524 2104515 fd Linux raid autodetect

/dev/sdg2 263 524 2104515 fd Linux raid autodetect

/dev/sdf2 263 524 2104515 fd Linux raid autodetect

/dev/sdh2 263 524 2104515 fd Linux raid autodetect

[iyunv@test2 jason]# fdisk -l | grep "/dev/sdc3"

/dev/sdc352255486 2104515 fd Linux raid autodetect

[iyunv@test2 jason]#

此处可以优化,就是grep "/dev/sd [egfh]",这样的话,显示的时候就可以让显示更少。

二、创建RAID设备

A.创建

B.查看

ls -l /dev/md0

cat /proc/mdstat

0.查看创建前的信息(很有可能是查不到任何信息,主要是为了对比)

1.创建阵列

此处可以优化,就是可以写成是/dev/sd [eghf] 1

注意:此处要增加一个热备"-x 1"[eghf]后面加上热备的硬盘分区(这里可以挑战一些创建两个热备)

[iyunv@test2 jason]# mdadm -Cv /dev/md1 -a yes -n4 -l5 -x1 /dev/sd[eghf]2 /dev/sdc3

mdadm: layout defaults to left-symmetric

mdadm: layout defaults to left-symmetric

mdadm: chunk size defaults to 512K

mdadm: size set to 2102272K

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

[iyunv@test2 jason]#

解释:

这里可以将man mdadm当中对应的选项粘贴出来甚至是翻译出来

* -a:就是如果有需要创建的,就直接创建,比如说md0这个之前是不存在的目录,那么在创建阵列的同时直接创建md0

* md0:一般讲RAID命名成md0

* -n:几块硬盘,就写几

* -l:级别,或者说类型,5,就是RAID5

* 后面跟着的是把具体某个硬盘的某个分区创建成RAID

* -C:是创建阵列

简便写法:mdadm -Cv /dev/md0 -a yes -n4 -l5 /dev/sd [eghf] 1

2.创建后的查看:

[iyunv@test2 jason]# ls -l /dev/md1

brw-rw---- 1 root disk 9, 1 Jun 20 13:07 /dev/md1

另一种查看方式:

[iyunv@test2 jason]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md1 : active raid5 sdh2[5] sdc3[4](S) sdg2[2] sdf2[1] sde2[0]

6306816 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

md0 : active raid5 sde1[5] sdf1[4] sdh1[2] sdg1[1]

6306816 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

unused devices: <none>

[iyunv@test2 jason]#

解释:

[4/4]:第一个4是代表4个分区,第二个4表示正常工作的有几个

[UUUU]:表示up,正常启动的有几个,如果有一个有问题,那么会用“_”(下划线)来表示。

上面sdf1[4]代表的是最后一个U

sde1[0]代表是第一个U

sdg1[1]则是第二个

sdh1[2]是第三个

三、在RAID设备中建立文件系统

A.查看格式化前的信息(此次操作的时候缺少这个信息)

B.格式化成ext4

C.检验格式化的结果(此次并没有检验格化后的信息)

0.查看格式化前dev/md0的信息

1.格式化:

[iyunv@test2 jason]# mkfs -t ext4 /dev/md1

mke2fs 1.41.12 (17-May-2010)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=128 blocks, Stripe width=384 blocks

394352 inodes, 1576704 blocks

78835 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=1614807040

49 block groups

32768 blocks per group, 32768 fragments per group

8048 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

This filesystem will be automatically checked every 38 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

[iyunv@test2 jason]#

四、挂载并使用文件系统

1.检查挂载前的信息

2.明确挂载目录(如果已经存在可以直接挂载,如果不存在就需要自己挂载)

3.挂载方式

一次性挂载

开机后自动挂载

4.检验挂载行为的结果

mount

df -hT

1.创建mdata目录在根下面,作为挂载点:

[iyunv@test2 jason]# mkdir /mdata2

2.挂载/dev/md1:

[iyunv@test2 jason]# cd /

[iyunv@test2 /]# ls -l | grep mdata2

drwxr-xr-x2 root root 4096 Jun 20 13:12 mdata2

[iyunv@test2 /]# mount /dev/md1 /mdata2

3.查看挂载信息的方式:

a.mount

优化:可以使用mount | grep “md0”

[iyunv@test2 /]# mount | grep "/dev/md1"

/dev/md1 on /mdata2 type ext4 (rw)

b.另一种查看挂载信息的方式:

[iyunv@test2 /]# df -hT | grep "/dev/md1"

/dev/md1 ext4 6.0G 141M 5.5G 3% /mdata2

[iyunv@test2 /]#

因为是RAID 5,有一块盘是用来做校验的,所以实际的容量是6G。

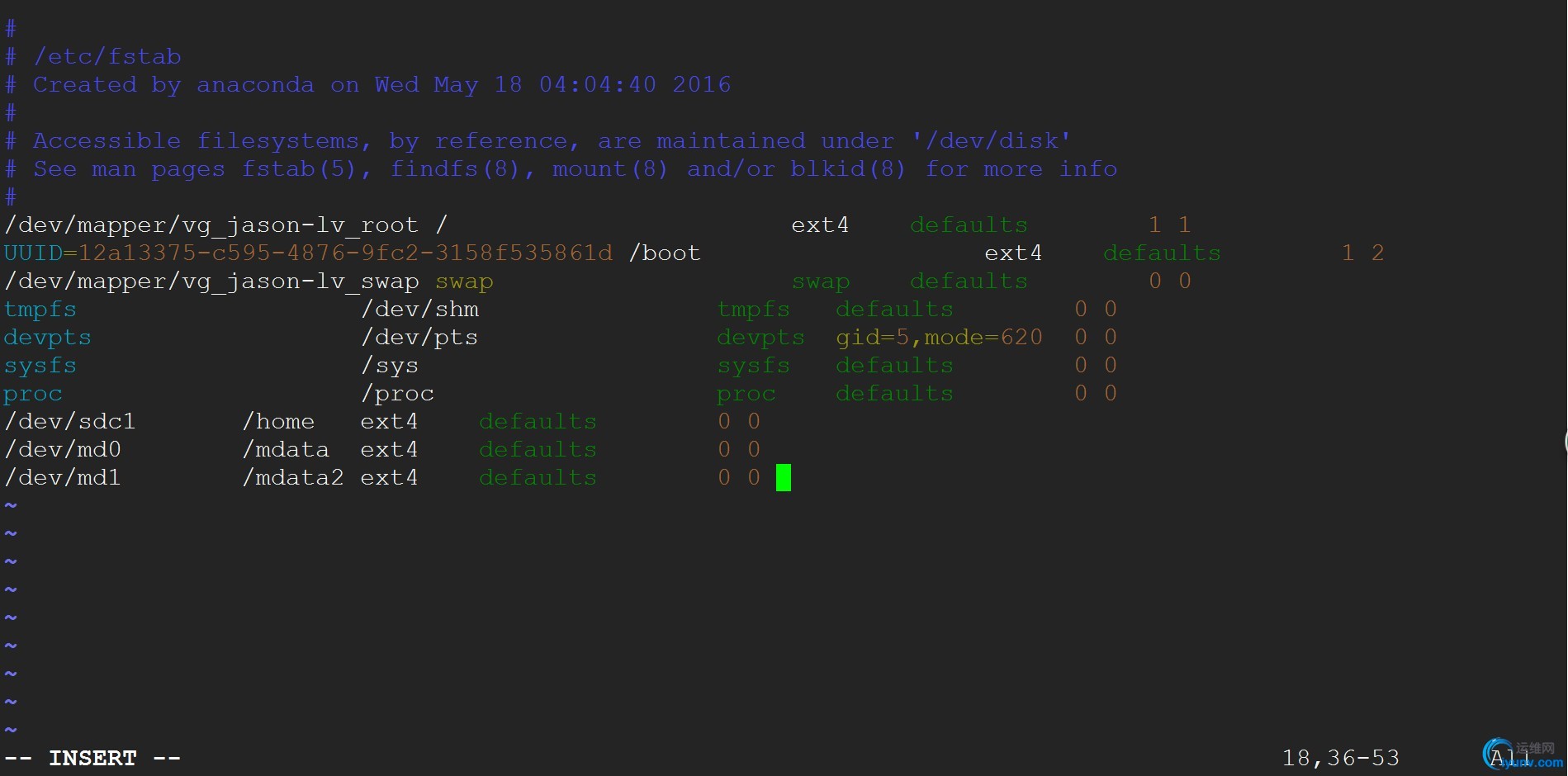

五、修改/etc/fstab文件,实现开机自动启动

修改fstab配置文件,达到开机后自动挂载的目的。

A.备份fstab

B.修改文件

C.测试修改的效果

卸载

重新挂载

查看挂载信息

1.先备份一下fstab文件

[iyunv@test2 etc]# cp fstab fstab.bak2

2.更改配置文件

[iyunv@test2 etc]# vim fstab

[iyunv@test2 etc]#

3.测试是否奏效:

a.先卸载并查看挂载信息:

[iyunv@test2 etc]# umount /dev/md1

[iyunv@test2 etc]# mount | grep "md1"

b.挂载,并查看信息:

[iyunv@test2 etc]# mount /mdata2

[iyunv@test2 etc]# mount | grep "md1"

/dev/md1 on /mdata2 type ext4 (rw)

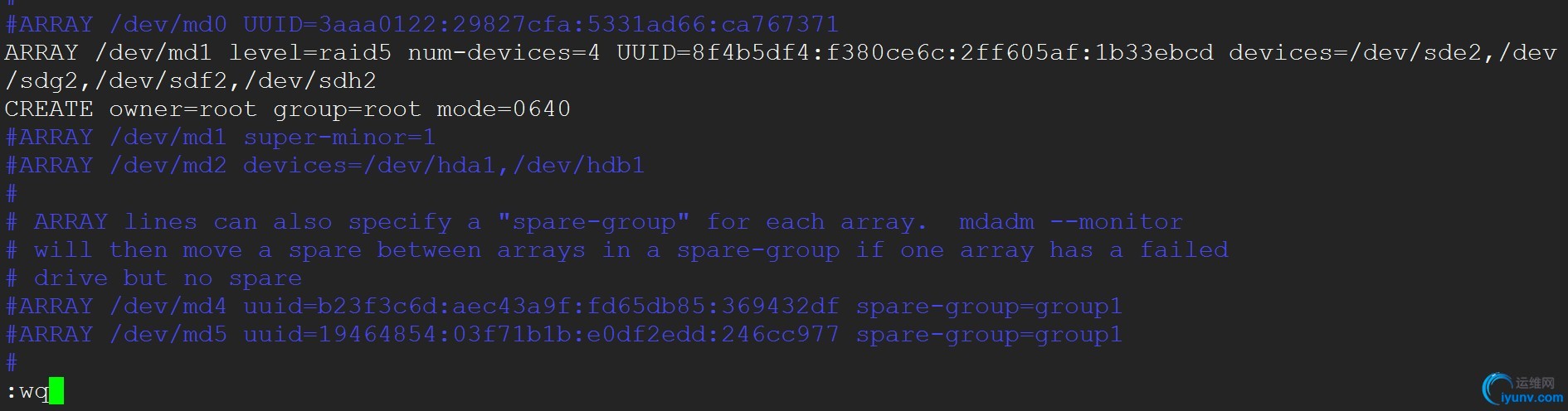

六、设备恢复

1.扫描或查看阵列信息

2.参照examples创建mdadm.conf

查找到mdadm.conf

修改

3.启动

4.停止

停止

卸载

再次停止

启动

挂载

5.设备恢复操作

查看设定前的状态

设定faulty

移除

查看移除后的状态

1.扫描或查看阵列信息

[iyunv@test2 etc]# mdadm -vDs

ARRAY /dev/md0 level=raid5 num-devices=4 metadata=1.2 name=test2:0 UUID=10a00bcf:d67ab596:18b5fa25:8ef335e1

devices=/dev/sde1,/dev/sdg1,/dev/sdh1,/dev/sdf1

ARRAY /dev/md1 level=raid5 num-devices=4 metadata=1.2 spares=1 name=test2:1 UUID=8f4b5df4:f380ce6c:2ff605af:1b33ebcd

devices=/dev/sde2,/dev/sdf2,/dev/sdg2,/dev/sdh2,/dev/sdc3

[iyunv@test2 etc]#

2.参照examples创建mdadm.conf

a.查找到mdadm.conf

[iyunv@test2 /]# locate mdadm.conf

/etc/mdadm.conf

/usr/share/doc/mdadm-3.2.6/mdadm.conf-example

/usr/share/man/man5/mdadm.conf.5.gz

b.修改

先将exmple复制到/etc/mdadm.conf

[iyunv@test2 /]# cd /usr/share/doc/mdadm-3.2.6/

[iyunv@test2 mdadm-3.2.6]# vim mdadm.conf-example

[iyunv@test2 mdadm-3.2.6]# cd /etc

[iyunv@test2 etc]# ls -l | grep mdadm.conf

-rw-r--r--1 root root181 Jun 16 17:05 mdadm.conf

[iyunv@test2 etc]# cp mdadm.conf mdadm.conf.bak

[iyunv@test2 etc]# cd /usr/share/doc/mdadm-3.2.6/

cp: overwrite `/etc/mdadm.conf'? y

[iyunv@test2 mdadm-3.2.6]# cd /etc

[iyunv@test2 etc]# vim mdadm.conf

[iyunv@test2 etc]#

查看example:

[iyunv@test2 etc]# vim mdadm.conf

[jason@test2 ~]$ vim /usr/share/doc/mdadm-3.2.6/mdadm.conf-example

# mdadm configuration file

#

# mdadm will function properly without the use of a configuration file,

# but this file is useful for keeping track of arrays and member disks.

# In general, a mdadm.conf file is created, and updated, after arrays

# are created. This is the opposite behavior of /etc/raidtab which is

# created prior to array construction.

#

#

# the config file takes two types of lines:

#

# DEVICE lines specify a list of devices of where to look for

# potential member disks

#

# ARRAY lines specify information about how to identify arrays so

# so that they can be activated

#

# You can have more than one device line and use wild cards. The first

# example includes SCSI the first partition of SCSI disks /dev/sdb,

# /dev/sdc, /dev/sdd, /dev/sdj, /dev/sdk, and /dev/sdl. The second

# line looks for array slices on IDE disks.

#

#DEVICE /dev/sd[bcdjkl]1

#DEVICE /dev/hda1 /dev/hdb1

#

# If you mount devfs on /dev, then a suitable way to list all devices is:

#DEVICE /dev/discs/*/*

#

#

# The AUTO line can control which arrays get assembled by auto-assembly,

# meaing either "mdadm -As" when there are no 'ARRAY' lines in this file,

# or "mdadm --incremental" when the array found is not listed in this file.

# By default, all arrays that are found are assembled.

# If you want to ignore all DDF arrays (maybe they are managed by dmraid),

# and only assemble 1.x arrays if which are marked for 'this' homehost,

# but assemble all others, then use

#AUTO -ddf homehost -1.x +all

#

# ARRAY lines specify an array to assemble and a method of identification.

# Arrays can currently be identified by using a UUID, superblock minor number,

# or a listing of devices.

#

# super-minor is usually the minor number of the metadevice

# UUID is the Universally Unique Identifier for the array

# Each can be obtained using

#

# mdadm -D <md>

#

#ARRAY /dev/md0 UUID=3aaa0122:29827cfa:5331ad66:ca767371

#ARRAY /dev/md1 super-minor=1

#ARRAY /dev/md2 devices=/dev/hda1,/dev/hdb1

#

# ARRAY lines can also specify a "spare-group" for each array. mdadm --monitor

# will then move a spare between arrays in a spare-group if one array has a failed

# drive but no spare

#ARRAY /dev/md4 uuid=b23f3c6d:aec43a9f:fd65db85:369432df spare-group=group1

#ARRAY /dev/md5 uuid=19464854:03f71b1b:e0df2edd:246cc977 spare-group=group1

#

# When used in --follow (aka --monitor) mode, mdadm needs a

# mail address and/or a program. This can be given with "mailaddr"

# and "program" lines to that monitoring can be started using

#mdadm --follow --scan & echo $! > /run/mdadm/mon.pid

# If the lines are not found, mdadm will exit quietly

#MAILADDR root@mydomain.tld

#PROGRAM /usr/sbin/handle-mdadm-events

65,1 Bot [iyunv@test2 jason]# mdadm -S /dev/md1

mdadm: unrecognised word on ARRAY line: levle=raid5

mdadm: Unknown keyword devices=/dev/sde2,/dev/sdg2,/dev/sdf2,/dev/sdh2

mdadm: Cannot get exclusive access to /dev/md1:Perhaps a running process, mounted filesystem or active volume group?

分析:这里有两个错误

拼写错误

devices是未知的关键字

man mdadm.conf

devices=

The value is a comma separated list of device names or device name pat-terns. Only devices with names which match one entry in the list will be used to assemble the array. Note that the devices listed there must also be listed on a DEVICE line.

解释:devices和DEVICE要在一行,才可以被识别。

修改level拼写

[iyunv@test2 jason]# vim /etc/mdadm.conf 修改devices:[iyunv@test2 jason]# vim /etc/mdadm.conf

查看md1:

[iyunv@test2 jason]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md1 : active raid5 sde2[0] sdh2[5] sdg2[2] sdf2[1]

6306816 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

md0 : active raid5 sde1[5] sdf1[4] sdh1[2] sdg1[1]

6306816 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

unused devices: <none>

查看md1的属组和属主:

[iyunv@test2 jason]# ls -lh /dev/md1

brw-rw---- 1 root disk 9, 1 Jun 20 20:34 /dev/md1

未能实现:通过CREATE改变group和mode.

4.停止

停止

卸载

[iyunv@test2 jason]# umount /dev/md1

再次停止

[iyunv@test2 jason]# mdadm -S /dev/md1

mdadm: stopped /dev/md1

[iyunv@test2 jason]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sde1[5] sdf1[4] sdh1[2] sdg1[1]

6306816 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

unused devices: <none>

启动

[iyunv@test2 jason]# mdadm -A /dev/md1

mdadm: /dev/md1 has been started with 4 drives.

[iyunv@test2 jason]# ls -l /dev/md1

brw-rw---- 1 root disk 9, 1 Jun 20 20:55 /dev/md1

挂载

[iyunv@test2 jason]# mount /dev/md1

[iyunv@test2 jason]# mount | grep "md1"

/dev/md1 on /mdata2 type ext4 (rw)

[iyunv@test2 jason]#

5.设备恢复操作

查看设定前的状态

[iyunv@test2 jason]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md1 : active raid5 sde2[0] sdh2[5] sdg2[2] sdf2[1]

6306816 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

md0 : active raid5 sde1[5] sdf1[4] sdh1[2] sdg1[1]

6306816 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

unused devices: <none>

设定faulty

mdadm: set /dev/sde2 faulty in /dev/md1

[iyunv@test2 jason]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md1 : active raid5 sdh2[5] sdf2[1] sde2[0](F) sdg2[2]

6306816 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/3] [_UUU]

unused devices: <none>

移除

[iyunv@test2 jason]# mdadm /dev/md1 -r /dev/sde2

mdadm: hot removed /dev/sde2 from /dev/md1

查看移除后的状态

[iyunv@test2 jason]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md1 : active raid5 sdh2[5] sdf2[1] sdg2[2]

6306816 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/3] [_UUU]

unused devices: <none>

未能实现:热备盘的自动挂载

|