1.RHCS: RedHat Cluster Suite,红帽集群套件

RHCS必备基础包:cman rgmanager system-cluster-config

2.RHCS集群部署基本前提:

2.1.时间同步;建议使用ntp服务

2.2.跳板机与各节点名称解析且每个主机的主机名与其'uname -n'保持一致;

2.3.跳板机与各节点之间ssh实现密钥认证

2.4.配置好每个节点的Yum;

3.本实验使用3个节点主机实现RHCS集群,GW主机作为跳板主机,IP分布如下:

1.1.1.18 node1.wilow.com node1

1.1.1.19 node2.wilow.com node2

1.1.1.20 node2.wilow.com node3

1.1.1.88 GW.wilow.com GW #作为跳板主机,管理node1,node2,node3节点主机

4.在GW.willow.com跳板主机上向3个节点主机分别安装cman,rgmanager,system-cluster-config

[iyunv@GW ~]# for I in {1..3}; do ssh node$I 'yum install -y cman rgmanager system-config-cluster'; done

[iyunv@GW ~]#

5.RHCS集群服务启动前提:

5.1.每个集群都有唯一集群名称;

5.2.至少有一个fence设备;

5.3.至少应该有三个节点;两个节点的场景中要使用qdisk仲裁磁盘;

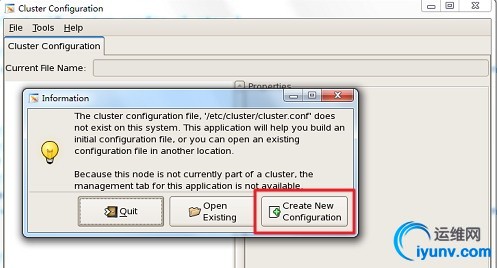

6.创建集群名称,节点以及fence设备

[iyunv@node1 cluster]# system-config-cluster &

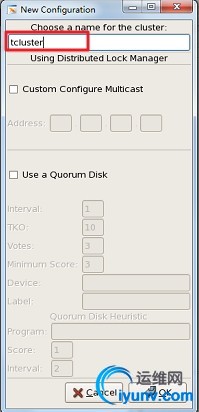

6.1.创建一个新的集群名称:

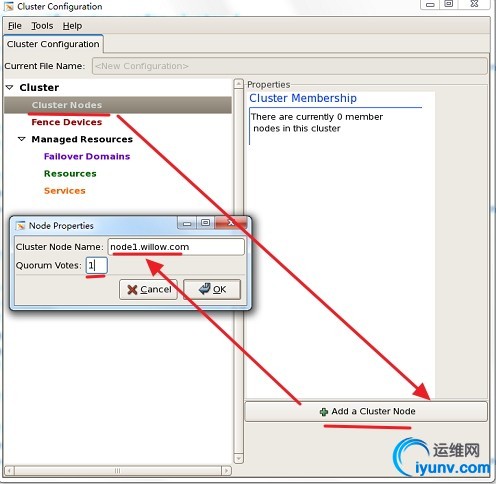

6.2.增加3个节点主机:

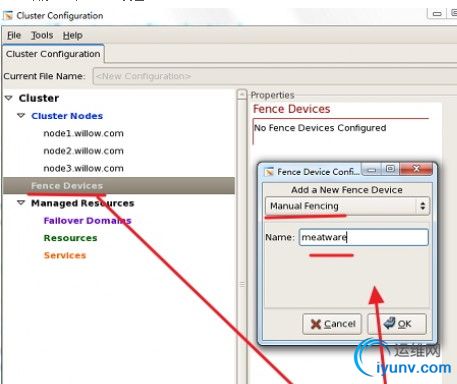

6.3.增加一个fence设备:

6.4.保存配置:File->Save,默认集群配置文件保存至/etc/cluster/cluster.conf

6.5.分别在各节点启动cman服务,否则fence设备卡住,无法启动

并且会自动启动ccsd服务,从而将cluster.conf文件传播给其他节点,

保证所有节点cluster.conf文件同步一致

[iyunv@node1 cluster]# service cman start

Starting cluster:

Loading modules... done

Mounting configfs... done

Starting ccsd... done

Starting cman... done

Starting daemons... done

Starting fencing... done

[ OK ]

[iyunv@node2 cluster]# service cman start

[iyunv@node3 cluster]# service cman start

6.6.启动rgmanager服务来管理资源等信息

[iyunv@GW ~]# for I in {1..3}; do ssh node$I 'service rgmanager start'; done

6.7.安装一个apache服务来做集群测试:

[iyunv@GW ~]# for I in {1..3}; do ssh node$I 'yum install -y httpd'; done [iyunv@GW ~]#ssh node1 'echo node1.willow.com > /var/www/html/index.html' [iyunv@GW ~]#ssh node2 'echo node2.willow.com > /var/www/html/index.html' [iyunv@GW ~]#ssh node3 'echo node3.willow.com > /var/www/html/index.html' [iyunv@GW ~]# for I in {1..3}; do ssh node$I 'chkconfig httpd off'; done 6.8.查看集群的基本信息: [iyunv@node1 ~]# cman_tool status

Version: 6.2.0

Config Version: 2

Cluster Name: tcluster

Cluster Id: 28212

Cluster Member: Yes

Cluster Generation: 12

Membership state: Cluster-Member

Nodes: 3

Expected votes: 3

Total votes: 3

Node votes: 1

Quorum: 2

Active subsystems: 8

Flags: Dirty

Ports Bound: 0 177

Node name: node1.willow.com

Node ID: 1

Multicast addresses: 239.192.110.162

Node addresses: 1.1.1.18

6.9.查看集群各节点状态:

[iyunv@node1 ~]# clustat

Cluster Status for tcluster @ Thu Aug 18 10:37:31 2016

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

node1.willow.com 1 Online, Local

node2.willow.com 2 Online

node3.willow.com 3 Online

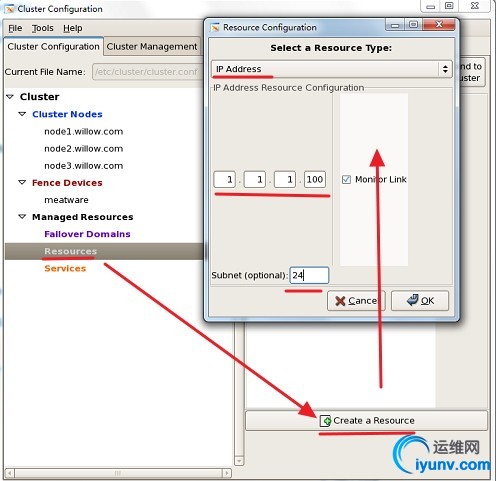

6.10.增加一个VIP和一个httpd集群资源:

[iyunv@node1 cluster]# system-config-cluster &

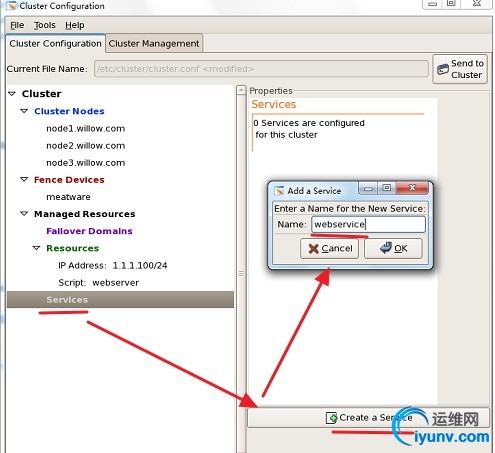

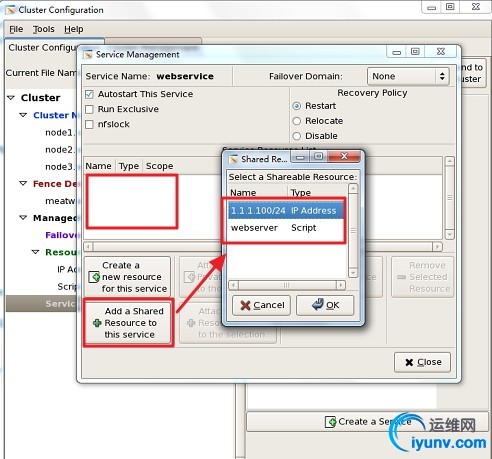

6.11.由于在cman中资源无法启动,必须创建服务:如下创建一个webservice服务

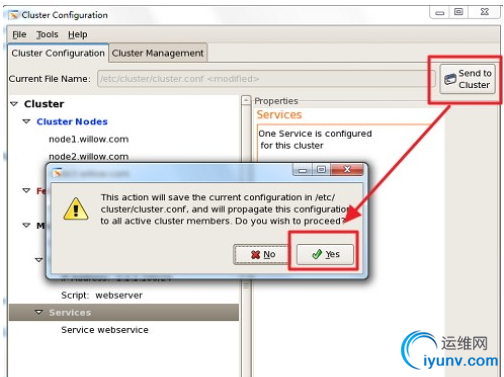

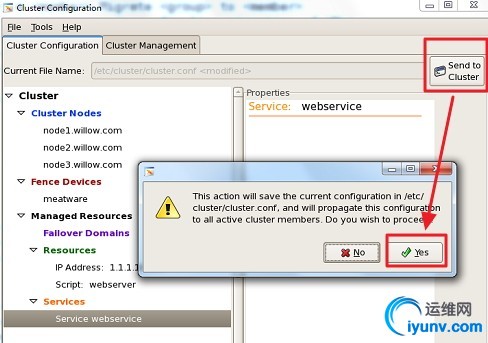

6.12.将刚才配置的cluster.conf文件信息再次传播至其他节点:

6.13.查看webservcie服务运行在哪个节点上

[iyunv@node1 ~]# clustat

Cluster Status for tcluster @ Thu Aug 18 10:55:17 2016

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

node1.willow.com 1 Online, Local, rgmanager

node2.willow.com 2 Online, rgmanager

node3.willow.com 3 Online, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:webservice node1.willow.com started

6.14.查看VIP启动状况:ifconfig命令无法查看

[iyunv@node1 ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 00:0c:29:10:47:b6 brd ff:ff:ff:ff:ff:ff

inet 1.1.1.18/24 brd 1.1.1.255 scope global eth0

inet 1.1.1.100/24 scope global secondary eth0

这时,通过web测试访问http://1.1.1.100 会显示node1节点的web信息

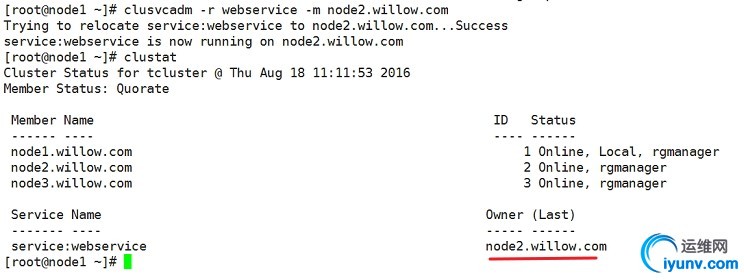

6.15.查看clusvcadm帮助: [iyunv@node2 cluster]# clusvcadm -h 6.16.将当前node1.willow.com运行的webservice服务组迁移至node2.willow.com

[iyunv@node1 ~]# clusvcadm -r webservice -m node2.willow.com

Trying to relocate service:webservice to node2.willow.com...Success

service:webservice is now running on node2.willow.com

[iyunv@node1 ~]# clustat

这时,通过web测试访问http://1.1.1.100 会显示node2节点的web信息

7.通过nfs让3个节点共享同一页面,实现共享存储

7.1.创建nfs共享并增加nfs资源

[iyunv@GW ~]# mkdir /web/ha/

[iyunv@GW ~]# vim /etc/exports

/web/ha 1.1.1.0/24(ro)

[iyunv@GW ~]# service nfs start

[iyunv@GW ~]# chkconfig nfs on

[iyunv@node1 cluster]# system-config-cluster &

7.2.重启一下webservice服务组

[iyunv@node1 ~]# clusvcadm -R webservice

这时,通过web测试访问http://1.1.1.100 会显示共享的web信息

8.以命令方式配置并启动基本集群,但资源和服务无法通过命令方式配置

8.1.停止各集群服务并清空集群配置文件

[iyunv@node1 ~]# clusvcadm -s webservice

[iyunv@GW ~]# for I in {1..3};do ssh node$I 'service rgmanager stop'; done

[iyunv@GW ~]# for I in {1..3};do ssh node$I 'service cman stop'; done

[iyunv@GW ~]# for I in {1..3};do ssh node$I 'rm -rf /etc/cluster/*'; done

8.2.创建一个集群名称

[iyunv@node1 cluster]# ccs_tool create tcluster

8.3.增加一个fence设备

[iyunv@node1 cluster]# ccs_tool addfence meatware fence_manual

8.3.增加节点

[iyunv@node1 cluster]# ccs_tool addnode -h #查看帮助

[iyunv@node1 cluster]# ccs_tool addnode -n 1 -v 1 -f meatware node1.willow.com

[iyunv@node1 cluster]# ccs_tool addnode -n 2 -v 1 -f meatware node2.willow.com

[iyunv@node1 cluster]# ccs_tool addnode -n 3 -v 1 -f meatware node3.willow.com

[iyunv@node1 cluster]# ccs_tool lsnode #查看刚加入的节点主机

Cluster name: tcluster, config_version: 5

Nodename Votes Nodeid Fencetype

node1.willow.com 1 1 meatware

node2.willow.com 1 2 meatware

node3.willow.com 1 3 meatware

8.4.启动cman和rgmanager集群服务

[iyunv@node1 cluster]# service cman start

[iyunv@node2 cluster]# service cman start

[iyunv@node3 cluster]# service cman start

[iyunv@GW ~]# for I in {1..3}; do ssh node$I 'service rgmanager start'; done

[iyunv@node1 cluster]#

8.5.如需配置额外资源和服务,只能使用图形化工具system-config-cluster

9.iSCSI安装及配置

9.1.命令及概念解释:

服务器端iSCSI Target:安装包是scsi-target-utils

工作在3260端口

客户端认证方式:基于IP 及 基于用户的CHAP认证

客户端iSCSI Initiator:安装包是 iscsi-initiator-utils

9.2.tgtadm模式化命令,服务器端命令

--mode常用模式:target、logicalunit、account

target --op

new、delete、show、update、bind、unbind

logicalunit --op

new、delete

account --op

new、delete、bind、unbind

--lld,短选项是:-L

--tid, 短选项是:-t

--lun, 短选项是:-l

--backing-store <path>, 短选项是:-b

--initiator-address <address>,短选项是: -I

--targetname <targetname>,短选项是:-T,

9.3.targetname: target命令规则:

iqn.yyyy-mm.<reversed domain name>[:identifier]

例如:iqn.2016-08.com.willow:tstore.disk1

9.4.iscsiadm模式化命令,客户端命令

-m {discovery|node|session|iface}

discovery: 发现某服务器是否有target输出,以及输出了哪些target;

node: 管理跟某target的关联关系;

session: 会话管理

iface: 接口管理

iscsiadm -m discovery [ -d debug_level ] [ -P printlevel ] [ -I iface -t type -p ip:port [ -l ] ]

-d: 0-8

-I:

-t type: SendTargets(st), SLP, and iSNS

-p: IP:port

iscsiadm -m discovery -d 2 -t st -p 172.16.100.100

iscsiadm -m node [ -d debug_level ] [ -L all,manual,automatic ] | [ -U all,manual,automatic ]

iscsiadm -m node [ -d debug_level ] [ [ -T targetname -p ip:port -I ifaceN ] [ -l | -u ] ] [ [ -o operation ] [ -n name ] [ -v value ] ]

iscsi-initiator-utils:

不支持discovery认证;

如果使用基于用户的认证,必须首先开放基于IP的认证;

9.5.安装iscsi服务器端:iSCSI Target,安装包是scsi-target-utils

首先新增一块硬盘或分区或raid作测试,这里我新增两块分区,/dev/sda5,/dev/sda6(省略)

[iyunv@GW ~]# yum install -y scsi-target-utils

[iyunv@GW ~]# service tgtd start

[iyunv@GW ~]# chkconfig tgtd on

新增一个target并取名:

[iyunv@GW ~]# tgtadm --lld iscsi --mode target --op new --targetname iqn.2016-08.com.willow:tstore.disk1 --tid 1

显示target:

[iyunv@GW ~]# tgtadm --lld iscsi --mode target --op show

Target 1: iqn.2016-08.com.willow:tstore.disk1

System information:

Driver: iscsi

State: ready

I_T nexus information:

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB, Block size: 1

Online: Yes

Removable media: No

Readonly: No

Backing store type: null

Backing store path: None

Backing store flags:

Account information:

ACL information:

新增一个lun:

[iyunv@GW ~]# tgtadm --lld iscsi --mode logicalunit --op new --tid 1 --lun 1 --backing-store /dev/sda6

显示target: [iyunv@GW ~]# tgtadm --lld iscsi --mode target --op show Target 1: iqn.2016-08.com.willow:tstore.disk1

System information:

Driver: iscsi

State: ready

I_T nexus information:

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB, Block size: 1

Online: Yes

Removable media: No

Readonly: No

Backing store type: null

Backing store path: None

Backing store flags:

LUN: 1

Type: disk

SCSI ID: IET 00010001

SCSI SN: beaf11

Size: 1012 MB, Block size: 512

Online: Yes

Removable media: No

Readonly: No

Backing store type: rdwr

Backing store path: /dev/sda6

Backing store flags:

Account information:

ACL information:

基于IP认证:

[iyunv@GW ~]# tgtadm --lld iscsi --mode target --op bind --tid 1 --initiator-address 1.1.1.0/24

显示target:

[iyunv@GW ~]# tgtadm --lld iscsi --mode target --op show

Target 1: iqn.2016-08.com.willow:tstore.disk1

System information:

Driver: iscsi

State: ready

I_T nexus information:

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB, Block size: 1

Online: Yes

Removable media: No

Readonly: No

Backing store type: null

Backing store path: None

Backing store flags:

LUN: 1

Type: disk

SCSI ID: IET 00010001

SCSI SN: beaf11

Size: 1012 MB, Block size: 512

Online: Yes

Removable media: No

Readonly: No

Backing store type: rdwr

Backing store path: /dev/sda6

Backing store flags:

Account information:

ACL information:

1.1.1.0/24

9.6.安装iscsi客户端:iSCSI Initiator,安装包是iscsi-initiators-utils

[iyunv@node1 cluster]# yum install -y iscsi-initiator-utils

客户端命名:

[iyunv@node1 cluster]# iscsi-iname -p iqn.2016-08.com.willow

iqn.2016-08.com.willow:b66a29864

[iyunv@node1 cluster]# echo "InitiatorName=`iscsi-iname -p iqn.2016-08.com.willow`" > /etc/iscsi/initiatorname.iscsi

[iyunv@node1 cluster]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2016-08.com.willow:2be4ce331532

[iyunv@node1 cluster]#

|