|

|

电子版在http://caibinbupt.iyunv.com/blog/418846下载

Getting started with hadoop core

Applications frequently require more resources than are available on an inexpensive machine. Many organizations find themselves with business processes that no longer fit on

a single cost-effective computer.

A simple but expensive solution has been to buy specialty machines that have a lot of memory and many CPUs. This solution scales as far as what is supported

by the fastest machines available, and usually the only limiting factor is your budget.

An alternative solution is to build a high-availability cluster.

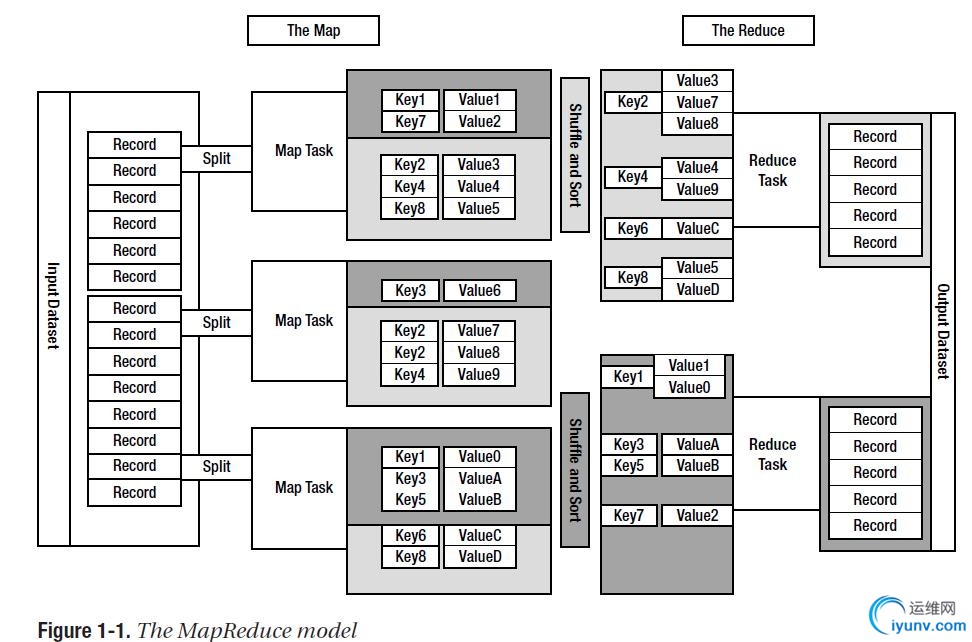

MapReduce Model:

· Map: An initial ingestion and transformation step, in which individual input records can be processed in parallel.

· Reduce: An aggregation or summarization step, in which all associated records must be processed together by a single entity.

MapReduce Application is a specialized web crawler which received as input large sets of URLs.Job had serverl steps:

1,Ingest Urls.

2,Normalize the urls.

3,eliminate duplicate urls.

4,filter all urls.

5,fetch the urls.

6,fingerprint the content items.

7,update the recently sets.

8,prepare the work list for next application.

The Hadoop-based application was running faster and well.

Introducing Hadoop

this is a top-level project in apache,provoding and supporting development of open source software that supplies a framework for developments of highly scalable distributed computing applications.

The two fundamental pieces of hadoop are the mapreduce framework and hadoop distributed file system(HDFS).

The mapreduce framework required a shared file system such as HDFS,S3,NFS,GFS..but the HDFS is the best suitable.

Introducing MapReduce

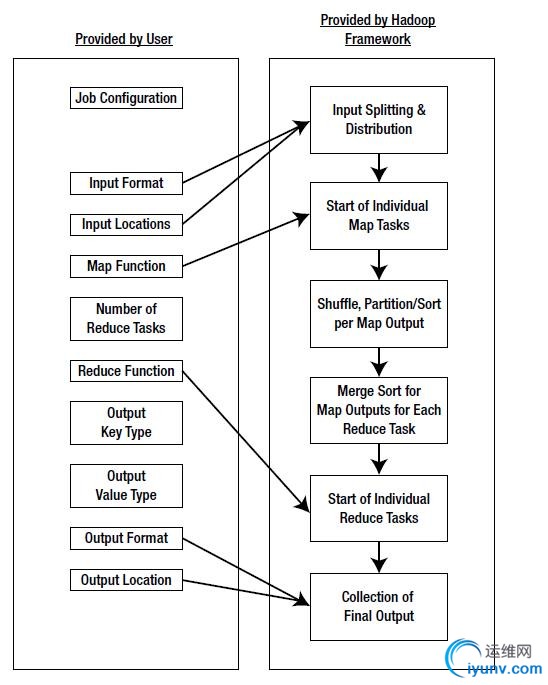

required as following:

1,The locations in the distributed file system of input.

2,the locations in the distributed file system for output.

3,the input format.

4,the output format.

5,the class contains the map function.

6,optionally,the class contains the reduce function.

7,the jar fils containing the above class.

if a job does not need a reduce function,the framework will partition the input,and schedule and execute maps tasks across the cluster.if requested, it will sort the results of the map task and execute the map reduce with the map output.the final output will be moved the output directory and the state of job report user.

Managing the mapreduce:

there are two process to manage jobs:

TaskTracker manages the execution of individual map and reduce task on a compute node in the cluster.

JobTracker accepts job submission provides job monitoring and control,and manager the distribution of tasks to the tasktracker nodes.

Note: one nice feature is that you can add tasktracker to the cluster when a job is running and have the job spread to the new node.

Introducing HDFS

HDFS is designed for use for mapreduce jobs that read input in large churks of input and write large churk of output.this is referred as replication in hadoop.

Installing Hadoop

the prerequisites:

1,fedora 8

2,jdk1.6

3,hadoop 0.19 or later

Go to the Hadoop download site at http://www.apache.org/dyn/closer.cgi/hadoop/core/. find the gz file,download the file,tar the file,then export HADOOP_HOME=[yourdirectory],export PATH=${HADOOP_HOME}/bin:${PATH}.

last,check all..

Running examples and tests

domonstrate all examples...:)

Chapter 2 the basices of mapreduce job

the chapter

the user is responsiable for handing the job setup,specifying the inputs locations,specifying .

there is a simple example:

package com.apress.hadoopbook.examples.ch2;

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapred.FileInputFormat;

import org.apache.hadoop.mapred.FileOutputFormat;

import org.apache.hadoop.mapred.JobClient;

import org.apache.hadoop.mapred.JobConf;

import org.apache.hadoop.mapred.KeyValueTextInputFormat;

import org.apache.hadoop.mapred.RunningJob;

import org.apache.hadoop.mapred.lib.IdentityMapper;

import org.apache.hadoop.mapred.lib.IdentityReducer;

import org.apache.log4j.Logger;

/** A very simple MapReduce example that reads textual input where

* each record is a single line, and sorts all of the input lines into

* a single output file.

*

* The records are parsed into Key and Value using the first TAB

* character as a separator. If there is no TAB character the entire

* line is the Key. *

*

* @author Jason Venner

*

*/

public class MapReduceIntro {

protected static Logger logger = Logger.getLogger(MapReduceIntro.class);

/**

* Configure and run the MapReduceIntro job.

*

* @param args

* Not used.

*/

public static void main(final String[] args) {

try {

/** Construct the job conf object that will be used to submit this job

* to the Hadoop framework. ensure that the jar or directory that

* contains MapReduceIntroConfig.class is made available to all of the

* Tasktracker nodes that will run maps or reduces for this job.

*/

final JobConf conf = new JobConf(MapReduceIntro.class);

/**

* Take care of some housekeeping to ensure that this simple example

* job will run

*/

MapReduceIntroConfig.

exampleHouseKeeping(conf,

MapReduceIntroConfig.getInputDirectory(),

MapReduceIntroConfig.getOutputDirectory());

/**

* This section is the actual job configuration portion /**

* Configure the inputDirectory and the type of input. In this case

* we are stating that the input is text, and each record is a

* single line, and the first TAB is the separator between the key

* and the value of the record.

*/

conf.setInputFormat(KeyValueTextInputFormat.class);

FileInputFormat.setInputPaths(conf,

MapReduceIntroConfig.getInputDirectory());

/** Inform the framework that the mapper class will be the

* {@link IdentityMapper}. This class simply passes the

* input Key Value pairs directly to its output, which in

* our case will be the shuffle.

*/

conf.setMapperClass(IdentityMapper.class);

/** Configure the output of the job to go to the output

* directory. Inform the framework that the Output Key

* and Value classes will be {@link Text} and the output

* file format will {@link TextOutputFormat}. The

* TextOutput format class joins produces a record of

* output for each Key,Value pair, with the following

* format. Formatter.format( "%s\t%s%n", key.toString(),

* value.toString() );.

*

* In addition indicate to the framework that there will be

* 1 reduce. This results in all input keys being placed

* into the same, single, partition, and the final output

* being a single sorted file.

*/

FileOutputFormat.setOutputPath(conf,

MapReduceIntroConfig.getOutputDirectory());

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(Text.class);

conf.setNumReduceTasks(1);

/** Inform the framework that the reducer class will be the {@link

* IdentityReducer}. This class simply writes an output record key,

* value record for each value in the key, valueset it receives as

* input. The value ordering is arbitrary.

*/

conf.setReducerClass(IdentityReducer.class);

logger .info("Launching the job.");

/** Send the job configuration to the framework and request that the

* job be run.

*/

final RunningJob job = JobClient.runJob(conf);

logger.info("The job has completed.");

if (!job.isSuccessful()) {

logger.error("The job failed.");

System.exit(1);

}

logger.info("The job completed successfully.");

System.exit(0);

} catch (final IOException e) {

logger.error("The job has failed due to an IO Exception", e);

e.printStackTrace();

}

}

}

IdentityMapper:

the framework will make one call to your map function for echo record for your input.

IdentityReducer:

the framework will calls the reduce function one time for each unique key.

If you require the output of your job to be sorted, the reducer function must pass the key

objects to the output.collect() method unchanged. The reduce phase is, however, free to

output any number of records, including zero records, with the same key and different values.

This particular constraint is also why the map tasks may be multithreaded, while the reduce

tasks are explicitly only single-threaded.

Special the input formats:

KeyValueTextInputFormat,TextInputFormat,NLineInputFormat,MultiFileInputFormat,SequenceFileInputFormat

keyvaluetextinputformat and sequenceinputformat are the most commonly used input formats.

Setting the out format:

Configuring the reduce phase:

Five pieces:

The number of reduce tasks;

The class supplying the reduce method;

The input/output key and value types for reduce task;

The output file type for reduce task output;

Creating a custom mapper and reducer

As you're seen,your first hadoop job produced sorted output,but the sorting was not suitable.Let's work out what is required to sort,using custom mapper.

creating a custom mapper:

you must change your configuration and provide a custom class .this is done by two calls on the jobconf.class:

conf.setOutputKeyClass(xxx.class):informs the type;

conf.setMapperClass(TransformKeysToLongMapper.class)

as blow: you must informs:

/** Transform the input Text, Text key value

* pairs into LongWritable, Text key/value pairs.

*/

public class TransformKeysToLongMapperMapper

extends MapReduceBase implements Mapper<Text, Text, LongWritable, Text>

Creating a custom reducer:

after your work with the custom mapper in the preceding sections,creating a custom reducer will seem familiar.

so add the following single line:

conf.setReducerClass(MergeValuesToCSV.class);

public class MergeValuesToCSVReducer<K, V>

extends MapReduceBase implements Reducer<K, V, K, Text> {

...

}

Why do the mapper and reducer extend MapReduceBase?

The class provides basic implementations of two additinal methods the required of a mapper or reducer by the framework..

/** Default implementation that does nothing. */

public void close() throws IOException {

}

/** Default implementation that does nothing. */

public void configure(JobConf job) {

}

the configure is the way to access to the jobconf..

the close is the way to close resource or other things.

The makeup of cluster

In the context of Hadoop, a node/machine running the TaskTracker or DataNode server is considered a slave node. It is common to have nodes that run both the TaskTracker and

DataNode servers. The Hadoop server processes on the slave nodes are controlled by their respective masters, the JobTracker and NameNode servers.

|

|