|

|

最近正在学习Python,看了几本关于Python入门的书和用Python进行自然语言处理的书,如《Python编程实践》、《Python基础教程》(第2版)、《Python自然语言处理》(影印版)。因为以前是学Java的,有着良好的面向对象的思维方式,所以刚开始看Python的语法,觉得Pyhon太随意了,很别扭,有不正规之嫌。而且,Python自己也正在向面向对象(OO)靠拢。但是,后来看到Python有强大的类库,尤其在自然语言处理方面有着强大的NLTK支持,我逐渐改变了对它的看法。不得不承认,Python非常简洁和清晰,很容易上手,对于有编程经验的人来说,可以快速编写程序来实现某个应用。下面是本人学习中的一些心得,与大家分享。

Python NLP实战之一:环境准备

要下载和安装的软件和资源有:

- Python

- PyYAML

- NLTK

- NLTK-Data

- NumPy

- Matplotlib

(一)下载地址和版本:

- Python:http://www.python.org/getit/releases/2.7.2/ 版本:Python 2.7.2 (注:现在是2.7.3。Python已经发布3.3版了,之所以下载2.7,是因为2.x比较稳定,兼容的第三方软件多。Python官网提示:如果你不知道用哪个版本的话,就从2.7开始吧!)

- PyYAML:http://pypi.python.org/pypi/PyYAML/ 版本:PyYAML 3.10 功能:YAML的解析工具

- NLTK: http://www.nltk.org 版本:nltk-2.0.1 功能:自然语言工具包

- NumPy: http://pypi.python.org/pypi/numpy 版本:numpy 1.6.1 功能:支持多维数组和线性代数

- Matplotlib: http://sourceforge.net/projects/matplotlib/files/matplotlib/matplotlib-1.1.0/ 版本:matplotlib-1.1.0 功能:用于数据可视化的二维图库

安装都很简单,我是在Window下安装的。

(二)运行Python IDLE

Python安装完成后,运行Python集成开发环境IDLE:开始->所有程序->Python 2.7 ->IDLE (Python GUI),打开一个新的窗口,显示如下信息,表明安装成功。

Python 2.7.2 (default, Jun 12 2011, 15:08:59) [MSC v.1500 32 bit (Intel)] on win32

Type "copyright", "credits" or "license()" for more information.

>>>

(三)下载NLTK数据包

接下来,导入NLTK工具包,然后,下载NLTK数据源。

>>> import nltk

>>> nltk.download()

注意:在导入NLTK工具包时,如果显示如下信息,表明没有安装PyYAML。

>>> import nltk

Traceback (most recent call last):

File "<pyshell#0>", line 1, in <module>

import nltk

File "C:\Python27\lib\site-packages\nltk\__init__.py", line 107, in <module>

from yamltags import *

File "C:\Python27\lib\site-packages\nltk\yamltags.py", line 10, in <module>

import yaml

ImportError: No module named yaml

按照(一)所列的地址下载、安装完PyYAML后,再打开Python IDLE,导入NLTK,执行nltk.download(),我的界面出现的是文字提示,书上和网上有同学说是图形界面,两者都可以吧。

Python 2.7.2 (default, Jun 12 2011, 15:08:59) [MSC v.1500 32 bit (Intel)] on win32

Type "copyright", "credits" or "license()" for more information.

>>> import nltk

>>> nltk.download()

NLTK Downloader

---------------------------------------------------------------------------

d) Download l) List u) Update c) Config h) Help q) Quit

---------------------------------------------------------------------------

Downloader>

选择d) Download,敲入d,再敲入l,然后按提示敲几次回车,显示的是将要下载的各种不同的数据包。

Downloader> d

Download which package (l=list; x=cancel)?

Identifier> l

Packages:

[ ] maxent_ne_chunker... ACE Named Entity Chunker (Maximum entropy)

[ ] abc................. Australian Broadcasting Commission 2006

[ ] alpino.............. Alpino Dutch Treebank

[ ] biocreative_ppi..... BioCreAtIvE (Critical Assessment of Information

Extraction Systems in Biology)

[ ] brown_tei........... Brown Corpus (TEI XML Version)

[ ] cess_esp............ CESS-ESP Treebank

[ ] chat80.............. Chat-80 Data Files

[ ] brown............... Brown Corpus

[ ] cmudict............. The Carnegie Mellon Pronouncing Dictionary (0.6)

[ ] city_database....... City Database

[ ] cess_cat............ CESS-CAT Treebank

[ ] comtrans............ ComTrans Corpus Sample

[ ] conll2002........... CONLL 2002 Named Entity Recognition Corpus

[ ] conll2007........... Dependency Treebanks from CoNLL 2007 (Catalan

and Basque Subset)

[ ] europarl_raw........ Sample European Parliament Proceedings Parallel

Corpus

[ ] dependency_treebank. Dependency Parsed Treebank

[ ] conll2000........... CONLL 2000 Chunking Corpus

Hit Enter to continue:

[ ] floresta............ Portuguese Treebank

[ ] names............... Names Corpus, Version 1.3 (1994-03-29)

[ ] gazetteers.......... Gazeteer Lists

[ ] genesis............. Genesis Corpus

[ ] gutenberg........... Project Gutenberg Selections

[ ] inaugural........... C-Span Inaugural Address Corpus

[ ] jeita............... JEITA Public Morphologically Tagged Corpus (in

ChaSen format)

[ ] movie_reviews....... Sentiment Polarity Dataset Version 2.0

[ ] ieer................ NIST IE-ER DATA SAMPLE

[ ] nombank.1.0......... NomBank Corpus 1.0

[ ] indian.............. Indian Language POS-Tagged Corpus

[ ] paradigms........... Paradigm Corpus

[ ] kimmo............... PC-KIMMO Data Files

[ ] knbc................ KNB Corpus (Annotated blog corpus)

[ ] langid.............. Language Id Corpus

[ ] mac_morpho.......... MAC-MORPHO: Brazilian Portuguese news text with

part-of-speech tags

[ ] machado............. Machado de Assis -- Obra Completa

[ ] pe08................ Cross-Framework and Cross-Domain Parser

Evaluation Shared Task

Hit Enter to continue:

[ ] pl196x.............. Polish language of the XX century sixties

[ ] pil................. The Patient Information Leaflet (PIL) Corpus

[ ] nps_chat............ NPS Chat

[ ] reuters............. The Reuters-21578 benchmark corpus, ApteMod

version

[ ] qc.................. Experimental Data for Question Classification

[ ] rte................. PASCAL RTE Challenges 1, 2, and 3

[ ] ppattach............ Prepositional Phrase Attachment Corpus

[ ] propbank............ Proposition Bank Corpus 1.0

[ ] problem_reports..... Problem Report Corpus

[ ] sinica_treebank..... Sinica Treebank Corpus Sample

[ ] verbnet............. VerbNet Lexicon, Version 2.1

[ ] state_union......... C-Span State of the Union Address Corpus

[ ] semcor.............. SemCor 3.0

[ ] senseval............ SENSEVAL 2 Corpus: Sense Tagged Text

[ ] smultron............ SMULTRON Corpus Sample

[ ] shakespeare......... Shakespeare XML Corpus Sample

[ ] stopwords........... Stopwords Corpus

[ ] swadesh............. Swadesh Wordlists

[ ] switchboard......... Switchboard Corpus Sample

[ ] toolbox............. Toolbox Sample Files

Hit Enter to continue:

[ ] unicode_samples..... Unicode Samples

[ ] webtext............. Web Text Corpus

[ ] timit............... TIMIT Corpus Sample

[ ] ycoe................ York-Toronto-Helsinki Parsed Corpus of Old

English Prose

[ ] treebank............ Penn Treebank Sample

[ ] udhr................ Universal Declaration of Human Rights Corpus

[ ] sample_grammars..... Sample Grammars

[ ] book_grammars....... Grammars from NLTK Book

[ ] spanish_grammars.... Grammars for Spanish

[ ] wordnet............. WordNet

[ ] wordnet_ic.......... WordNet-InfoContent

[ ] words............... Word Lists

[ ] tagsets............. Help on Tagsets

[ ] basque_grammars..... Grammars for Basque

[ ] large_grammars...... Large context-free and feature-based grammars

for parser comparison

[ ] maxent_treebank_pos_tagger Treebank Part of Speech Tagger (Maximum entropy)

[ ] rslp................ RSLP Stemmer (Removedor de Sufixos da Lingua

Portuguesa)

[ ] hmm_treebank_pos_tagger Treebank Part of Speech Tagger (HMM)

Hit Enter to continue:

[ ] punkt............... Punkt Tokenizer Models

Collections:

[ ] all-corpora......... All the corpora

[ ] all................. All packages

[ ] book................ Everything used in the NLTK Book

(

marks installed packages)

你可以选择敲入 all-corpora,或all,或book,我选的是all。保持网络畅通,下载可能需要一段时间。显示信息如下:

Download which package (l=list; x=cancel)?

Identifier> all

Downloading collection 'all'

|

| Downloading package 'abc' to C:\Documents and

| Settings\lenovo\Application Data\nltk_data...

| Unzipping corpora\abc.zip.

| Downloading package 'alpino' to C:\Documents and

| Settings\lenovo\Application Data\nltk_data...

| Unzipping corpora\alpino.zip.

| Downloading package 'biocreative_ppi' to C:\Documents and

| Settings\lenovo\Application Data\nltk_data...

| Unzipping corpora\biocreative_ppi.zip.

| Downloading package 'brown' to C:\Documents and

| Settings\lenovo\Application Data\nltk_data...

| Unzipping corpora\brown.zip.

| Downloading package 'brown_tei' to C:\Documents and

| Settings\lenovo\Application Data\nltk_data...

| Unzipping corpora\brown_tei.zip.

| Downloading package 'cess_cat' to C:\Documents and

| Settings\lenovo\Application Data\nltk_data...

| Unzipping corpora\cess_cat.zip.

| Downloading package 'cess_esp' to C:\Documents and

| Settings\lenovo\Application Data\nltk_data...

| Unzipping corpora\cess_esp.zip.

| Downloading package 'chat80' to C:\Documents and

...

...

...

| Downloading package 'book_grammars' to C:\Documents and

| Settings\lenovo\Application Data\nltk_data...

| Unzipping grammars\book_grammars.zip.

| Downloading package 'sample_grammars' to C:\Documents and

| Settings\lenovo\Application Data\nltk_data...

| Unzipping grammars\sample_grammars.zip.

| Downloading package 'spanish_grammars' to C:\Documents and

| Settings\lenovo\Application Data\nltk_data...

| Unzipping grammars\spanish_grammars.zip.

| Downloading package 'basque_grammars' to C:\Documents and

| Settings\lenovo\Application Data\nltk_data...

| Unzipping grammars\basque_grammars.zip.

| Downloading package 'large_grammars' to C:\Documents and

| Settings\lenovo\Application Data\nltk_data...

| Unzipping grammars\large_grammars.zip.

| Downloading package 'tagsets' to C:\Documents and

| Settings\lenovo\Application Data\nltk_data...

|

Done downloading collection 'all'

---------------------------------------------------------------------------

d) Download l) List u) Update c) Config h) Help q) Quit

---------------------------------------------------------------------------

Downloader>

选择q,退出下载。

Downloader> q

True

注1:在这个过程中,可以获取帮助,用h) Help,敲入:h。显示如下:Downloader> h

Commands:

d) Download a package or collection u) Update out of date packages

l) List packages & collections h) Help

c) View & Modify Configuration q) Quit

---------------------------------------------------------------------------

d) Download l) List u) Update c) Config h) Help q) Quit

---------------------------------------------------------------------------

Downloader>

注2:下载之前,可以选择下载到本地的路径,选择c) Config,敲入:c,进入Config环境。显示默认的下载路径,如下:

Downloader> c

Data Server:

- URL: <http://nltk.googlecode.com/svn/trunk/nltk_data/index.xml>

- 3 Package Collections Available

- 74 Individual Packages Available

Local Machine:

- Data directory: C:\Documents and Settings\lenovo\Application Data\nltk_data

---------------------------------------------------------------------------

s) Show Config u) Set Server URL d) Set Data Dir m) Main Menu

---------------------------------------------------------------------------

Config>

选择d) Set Data Dir,敲入:d,键入新的下载路径:

Config> d

New Directory> D:\nltk_data

---------------------------------------------------------------------------

s) Show Config u) Set Server URL d) Set Data Dir m) Main Menu

---------------------------------------------------------------------------

Config>

如果不改变下载路径,直接退出设置环境。

New Directory> q

Cancelled!

---------------------------------------------------------------------------

s) Show Config u) Set Server URL d) Set Data Dir m) Main Menu

---------------------------------------------------------------------------

Config>

返回到主菜单:

Config> m

---------------------------------------------------------------------------

d) Download l) List u) Update c) Config h) Help q) Quit

---------------------------------------------------------------------------

Downloader>

退出下载环境:

Downloader> q

True

注3:如果上述不成功的话,你可以直接到 http://nltk.googlecode.com/svn/trunk/nltk_data/index.xml 去下载数据包,放到下载路径的目录下即可。

(四)测试NLTK数据包

导入nltk.book包中所有的东西:

>>> from nltk.book import *

显示如下,表明NLTK数据成功装载。

>>> from nltk.book import *

*** Introductory Examples for the NLTK Book ***

Loading text1, ..., text9 and sent1, ..., sent9

Type the name of the text or sentence to view it.

Type: 'texts()' or 'sents()' to list the materials.

text1: Moby Dick by Herman Melville 1851

text2: Sense and Sensibility by Jane Austen 1811

text3: The Book of Genesis

text4: Inaugural Address Corpus

text5: Chat Corpus

text6: Monty Python and the Holy Grail

text7: Wall Street Journal

text8: Personals Corpus

text9: The Man Who Was Thursday by G . K . Chesterton 1908

>>>

(五)开始NLP工作

运行《Python自然语言处理》(影印版)中的例子,检索含“monstrous”的句子,查询词居中显示:

>>> text1.concordance('monstrous')

Building index...

Displaying 11 of 11 matches:

ong the former , one was of a most monstrous size . ... This came towards us ,

ON OF THE PSALMS . " Touching that monstrous bulk of the whale or ork we have r

ll over with a heathenish array of monstrous clubs and spears . Some were thick

d as you gazed , and wondered what monstrous cannibal and savage could ever hav

that has survived the flood ; most monstrous and most mountainous ! That Himmal

they might scout at Moby Dick as a monstrous fable , or still worse and more de

th of Radney .'" CHAPTER 55 Of the Monstrous Pictures of Whales . I shall ere l

ing Scenes . In connexion with the monstrous pictures of whales , I am strongly

ere to enter upon those still more monstrous stories of them which are to be fo

ght have been rummaged out of this monstrous cabinet there is no telling . But

of Whale - Bones ; for Whales of a monstrous size are oftentimes cast up dead u

>>>

再看几个例子。

查看语料库中的文本信息,直接敲它的名字:

>>> text1

<Text: Moby Dick by Herman Melville 1851>

>>> text2

<Text: Sense and Sensibility by Jane Austen 1811>

查看与检索词类似的词语:

>>> text1.similar('monstrous')

Building word-context index...

abundant candid careful christian contemptible curious delightfully

determined doleful domineering exasperate fearless few gamesome

horrible impalpable imperial lamentable lazy loving

>>> text2.similar('monstrous')

Building word-context index...

very exceedingly heartily so a amazingly as extremely good great

remarkably sweet vast

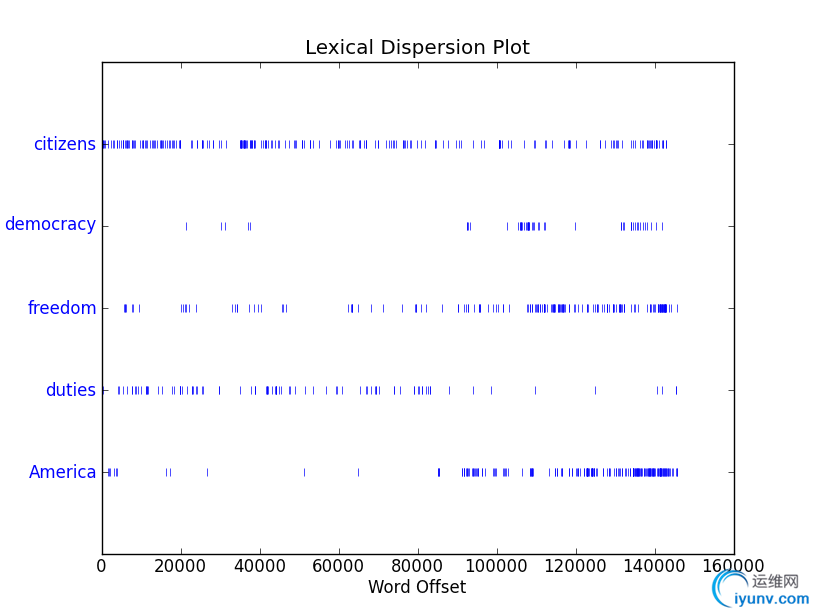

查看词语的分散度图:

>>> text4.dispersion_plot(['citizens','democracy','freedom','duties','America'])

Traceback (most recent call last):

File "<pyshell#12>", line 1, in <module>

text4.dispersion_plot(['citizens','democracy','freedom','duties','America'])

File "C:\Python27\lib\site-packages\nltk\text.py", line 454, in dispersion_plot

dispersion_plot(self, words)

File "C:\Python27\lib\site-packages\nltk\draw\dispersion.py", line 25, in

dispersion_plot

raise ValueError('The plot function requires the matplotlib package (aka pylab).'

ValueError: The plot function requires the matplotlib package (aka pylab).See

http://matplotlib.sourceforge.net/

>>>

注意到这里出错了,是因为找不到画图的工具包。按照提示,从(一)中所列的网站上下载、安装Matplotlib即可。我安装了NumPy和Matplotlib。显示如下图:

文本生成的例子:

>>> text3.generate()

Building ngram index...

In the six hundredth year of Noah , and Epher , and I put the stone

from the field , And Ophir , and laid him on the morrow , that thou

dost overtake them , and herb yielding seed after him that curseth

thee , of a tree yielding fruit after his kind , cattle , and spread

his tent , and Abimael , and Lot went out . The LORD God had taken

from our father is in the inn , he gathered up his hand . And say ye

moreover , Behold , I know that my

查看文本中的词例(token)数:

>>> len(text3)

44764

查看文本中的词型(type)数,并按字母升序列出:

>>> sorted(set(text3))

['!', "'", '(', ')', ',', ',)', '.', '.)', ':', ';', ';)', '?', '?)', 'A', 'Abel', 'Abelmizraim', 'Abidah', 'Abide', 'Abimael', 'Abimelech', 'Abr', 'Abrah', 'Abraham', 'Abram', 'Accad', 'Achbor', 'Adah', 'Adam', 'Adbeel', 'Admah', 'Adullamite', 'After', 'Aholibamah', 'Ahuzzath', 'Ajah', 'Akan', 'All', 'Allonbachuth', 'Almighty', 'Almodad', 'Also', 'Alvah', 'Alvan', 'Am', 'Amal', 'Amalek', 'Amalekites', 'Ammon', 'Amorite',...]

好了,准备工作已经做完了,以后就可以开始进行各种各样的NLP工作了,特别是对于中文(汉语)的自然语言处理工作,《Python自然语言处理》(影印版)并没有给出特别的篇幅。看来,这些工作还得我们自己想办法解决啊。 |

|