|

|

Cloud in Action: Install and Deploy the Self-Service Networks for OpenStack

薛国锋 xueguofeng2011@gmail.com

Last week we deployed the simplest architecture of OpenStack with Networking Option 1, which only supports the layer-2 services and VLAN segmentation of networks: http://8493144.blog.51cto.com/8483144/1975230

This time we are going to deploy Networking Option 2, which allows to enable self-service networking using overlay segmentation methods such as VXLAN, and provide the layer-3 services such as routing and NAT, as well as the foundation foradvanced services such as LBaaS and FWaaS. We adopt the same physical network design as last time but with the different software installation and configuration.

Theory of Self-Service Networks

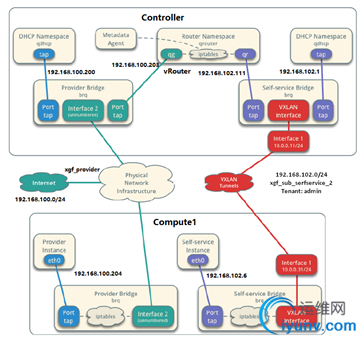

The provider network such as “xgf_provider”,includes a DHCP server and connects to Internet directly. When a VM is launched in the provider network, it will get the IP addr such as 192.168.100.204 from the DHCP server(192.168.100.0/24) and then visit Internet.

The self-service network such as “xgf_sub_selfservice_2”, which is created and supported by VXLAN and also includes its own DHCP server, connects to the provider network and Internet via the vRouter(uplink to the provider network:192.168.100.203 and downlink to self-service network:192.168.102.111) providing the NAT service. The instances launched in this self-service network,will get the IP address such as 192.168.102.6 from the DHCP server(192.168.102.0/24) and then are able to visit Internet.

In this test, we use the IP addresses of management interface(10.0.0.11,10.0.0.31,10.0.0.32) to handle overlay networks and tunnel traffic.

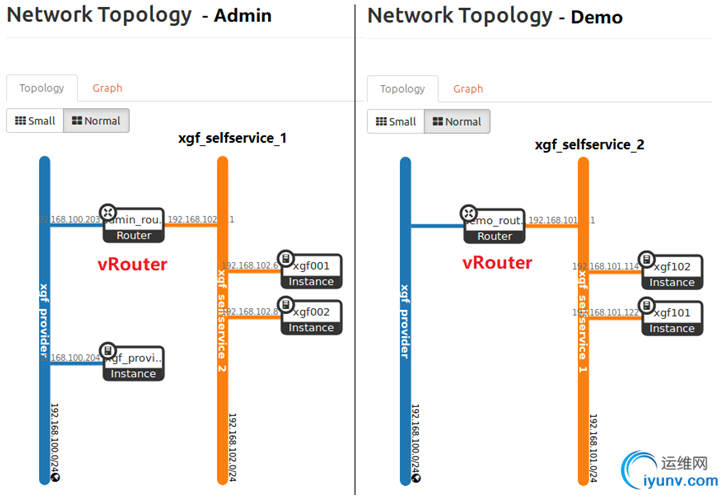

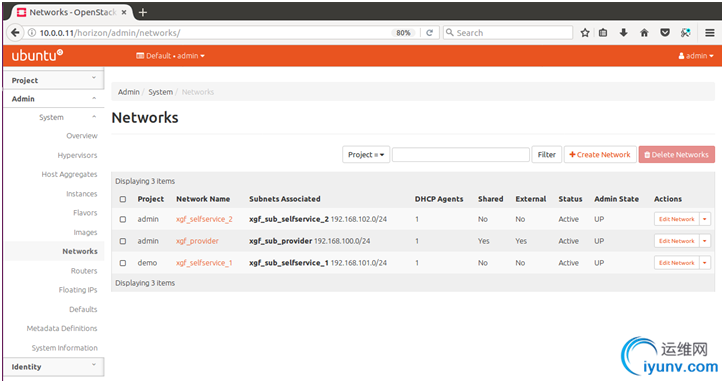

The logical design and configuration for self-service networks in this test:

The provider network: xgf_provider;

The self-service network of Demo: xgf_sub_selfservice_1;

The self-service network of Admin: xgf_sub_selfservice_2;

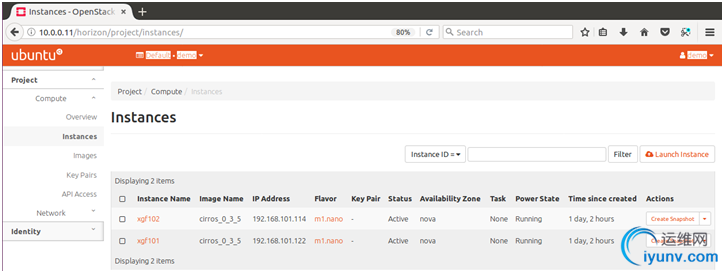

Admin launches VMs in both xgf_provider and xgf_sub_selfservice_2;

Demo launches VMs in xgf_sub_selfservice_1;

Software Installation and Configuration

Please refer the last part.

Lanuch instances

Lanuch an instance

| controller

| compute1

| compute2

| ///////////////////// Create the provider network

. admin-openrc

openstack network create --share --external --provider-physical-network provider --provider-network-type flat xgf_provider

///////////////////// Create a subnet on the provider network

openstack subnet create --network xgf_provider --allocation-pool start=192.168.100.200,end=192.168.100.220 --dns-nameserver 10.0.1.1 --gateway 192.168.100.111 --subnet-range 192.168.100.0/24 xgf_sub_provider

///////////////////// Create m1.nano flavor

openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano

///////////////////// Add rules for the default security group

openstack security group rule create --proto icmp default

openstack security group rule create --proto tcp --dst-port 22 default

///////////////////// Create the self-service network for Demo

. demo-openrc

openstack network create xgf_selfservice_1

openstack subnet create --network xgf_selfservice_1 --dns-nameserver 10.0.1.1 --gateway 192.168.101.111 --subnet-range 192.168.101.0/24 xgf_sub_selfservice_1

// Create the virtual router

openstack router createdemo_router

neutron router-interface-add demo_router xgf_sub_selfservice_1

neutron router-gateway-set demo_router xgf_provider

///////////////////// Create the self-service network for Admin

. admin-openrc

openstack network create xgf_selfservice_2

openstack subnet create --network xgf_selfservice_2 --dns-nameserver 10.0.1.1 --gateway 192.168.102.111 --subnet-range 192.168.102.0/24 xgf_sub_selfservice_2

// Create the virtual router

openstack router createadmin_router

neutron router-interface-add admin_router xgf_sub_selfservice_2

neutron router-gateway-set admin_router xgf_provider

| ////////////////////////////// | ///////////////////////////// | ///////////////////// Verify operation

. admin-openrc

openstack flavor list

openstack image list

openstack network list

openstack security group list

openstack server list

ip netns

. admin-openrc

neutron router-port-list admin_router

. demo-openrc

neutron router-port-list demo_router

|

|

|

Software Installation andConfiguration

/////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

Basic configuration

Basic configuration

| controller

| compute1

| compute2

| ///////////////////// Create VMs

| user/pw: gset/ipcc2014

c:\xgf\OpenStack\controller

8.192 GB MEM

4 Processors with Virtualize Intel VT-x/EPT

30GB HD

Network Adapter(eth0): NAT

Network Adapter2(eth1):Host-only

| user/pw: gset/ipcc2014

c:\xgf\OpenStack\compute1

4.096 GB MEM

2 Processors with Virtualize Intel VT-x/EPT

20GB HD

Network Adapter(eth0): NAT

Network Adapter2(eth1):Host-only

| user/pw: gset/ipcc2014

c:\xgf\OpenStack\compute2

4.096 GB MEM

2 Processors with Virtualize Intel VT-x/EPT

20GB HD

Network Adapter(eth0): NAT

Network Adapter2(eth1):Host-only

| System settings/brightness&lock – uncheck ‘Lock’

CTRL+ALT+T / Terminal / Edit / Profile Preferences / Terminal Size: 80 x 40

///////////////////// Upgrade Ubuntu Software

sudo apt-get update

sudo apt-get upgrade

sudo apt-get dist-upgrade

///////////////////// Install VMware Tools

sudo mkdir /mnt/cdrom

Reinstall VMware Tools by VMware Workstation

sudo mount /dev/cdrom /mnt/cdrom

cd /mnt/cdrom

sudo cp VMwareTools-10.1.6-5214329.tar.gz /opt

cd /opt

sudo tar -xvzf VMwareTools-10.1.6-5214329.tar.gz

cd vmware-tools-distrib

sudo ./vmware-install.pl

///////////////////// Change interace names to eth0, eth1, eth2….

sudo gedit /etc/default/grub

GRUB_CMDLINE_LINUX_DEFAULT="quiet splash"

GRUB_CMDLINE_LINUX="net.ifnames=0 biosdevname=0"

sudo update-grub

sudo grub-mkconfig -o /boot/grub/grub.cfg

///////////////////// Configure name resolution

sudo gedit /etc/hosts

127.0.0.1 localhost

10.0.0.11 controller

10.0.0.31 compute1

10.0.0.32 compute2

| ///////////////////// Configure interfaces, IP Addr and DNS

| sudo gedit /etc/network/interfaces

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet static

address 10.0.0.11

netmask 255.255.255.0

gateway 10.0.0.2

dns-nameserver 10.0.1.1

auto eth1

iface eth1 inet manual

| sudo gedit /etc/network/interfaces

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet static

address 10.0.0.31

netmask 255.255.255.0

gateway 10.0.0.2

dns-nameserver 10.0.1.1

auto eth1

iface eth1 inet manual

| sudo gedit /etc/network/interfaces

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet static

address 10.0.0.32

netmask 255.255.255.0

gateway 10.0.0.2

dns-nameserver 10.0.1.1

auto eth1

iface eth1 inet manual

| ///////////////////// Configure hostnames

sudo gedit /etc/hostname

| controller

| compute1

| compute2

| ///////////////////// Install openssh & git

sudo apt-get install openssh-server

sudo apt-get install openssh-client

sudo apt-get install sysv-rc-conf

sudo sysv-rc-conf ssh on

sudo sysv-rc-conf --list | grep ssh

netstat -ta | grep ssh

sudo apt-get install git-core

|

NTP– Chrony

Network Time Protocl – NTP

| controller

| compute1

| compute2

|

///////////////////// Install Chrony to synchronize services among nodes

| sudo apt-get install chrony

sudo gedit /etc/chrony/chrony.conf

allow 10.0.0.0./24 // put at the first line

sudo service chrony restart

| sudo apt-get install chrony

sudo gedit /etc/chrony/chrony.conf

server controller iburst // put at the first line

# pool 2.debian.pool.ntp.org offline iburst // comment out

sudo service chrony restart

| sudo apt-get install chrony

sudo gedit /etc/chrony/chrony.conf

server controller iburst // put at the first line

# pool 2.debian.pool.ntp.org offline iburst // comment out

sudo service chrony restart

| ///////////////////// Verify operation

|

OpenStackpackages

OpenStack packages

| controller

| compute1

| compute2

| ///////////////////// For all nodes: controller, compute and block storage….

sudo apt-get install software-properties-common

sudo add-apt-repository cloud-archive:ocata

sudo apt-get update

sudo apt-get dist-upgrade

sudo apt-get install python-openstackclient

|

SQLdatabase - MariaDB

SQL database

| controller

| compute1

| compute2

| ///////////////////// Install and Run MySQL

sudo apt-get install mariadb-server python-pymysql

sudo gedit /etc/mysql/mariadb.conf.d/99-openstack.cnf

[mysqld]

bind-address = 10.0.0.11

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

sudo service mysql restart

sudo mysqladmin -u root password ipcc2014 // for database root user

sudo mysql_secure_installation

netstat -tnlp | grep 3306

///////////////////// Set password for Linux root user

sudo passwd // set ‘ipcc2014’

su root // enter the root mode

#mysql // the root user no longer uses a password for local access to MySQL server

MariaDB [(none)]>

show databases;

use mysql;

show tables;

|

|

|

Messagequeue – RabbitMQ

Message queue

| Controller

| compute1

| compute2

| ///////////////////// Install RabbitMQ

sudo apt-get install rabbitmq-server

sudo rabbitmqctl add_user openstack ipcc2014

sudo rabbitmqctl set_permissions openstack ".*" ".*" ".*"

|

|

|

Memcached

Memcached

| Controller

| compute1

| compute2

| ///////////////////// Install Memcached

sudo apt-get install memcached python-memcache

sudo gedit /etc/memcached.conf

-l 10.0.0.11

sudo service memcached restart

|

|

|

Identityservice – Keystone

Identity service

| controller

| compute1

| compute2

| ///////////////////// Create a database

su root // enter the root mode

#mysql

MariaDB [(none)]>

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'ipcc2014';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'ipcc2014';

///////////////////// Install Keystone

sudo apt-get install keystone

sudo gedit /etc/keystone/keystone.conf

[database]

connection = mysql+pymysql://keystone:ipcc2014@controller/keystone

[token]

provider = fernet

sudo su -s /bin/sh -c "keystone-manage db_sync" keystone

sudo keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

sudo keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

sudo keystone-manage bootstrap --bootstrap-password ipcc2014 --bootstrap-admin-url http://controller:35357/v3/ --bootstrap-internal-url http://controller:5000/v3/ --bootstrap-public-url http://controller:5000/v3/ --bootstrap-region-id RegionOne

sudo gedit /etc/apache2/apache2.conf

ServerName controller // put in the first line

sudo service apache2 restart

sudo rm -f /var/lib/keystone/keystone.db

export OS_USERNAME=admin

export OS_PASSWORD=ipcc2014

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

openstack project create --domain default --description "Service Project" service

openstack project create --domain default --description "Demo Project" demo

openstack user create --domain default --password-prompt demo // ipcc2014

openstack role create user

openstack role add --project demo --user demo user

|

|

| ///////////////////// Verify operation

sudo gedit /etc/keystone/keystone-paste.ini

// remove ‘admin_token_auth’ from the

[pipeline:public_api], [pipeline:admin_api], and [pipeline:api_v3] sections.

unset OS_AUTH_URL OS_PASSWORD

openstack --os-auth-url http://controller:35357/v3 --os-project-domain-name default --os-user-domain-name default --os-project-name admin --os-username admin token issue

openstack --os-auth-url http://controller:5000/v3 --os-project-domain-name default --os-user-domain-name default --os-project-name demo --os-username demo token issue

gedit admin-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=ipcc2014

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

gedit demo-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=ipcc2014

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

. admin-openrc

openstack token issue

. demo-openrc

openstack token issue

|

// copy ‘admin-openrc’

// copy ‘demo-openrc’

|

// copy ‘admin-openrc’

// copy ‘demo-openrc’

|

Imageservice – Glance

Image service

| Controller

| compute1

| compute2

| ///////////////////// Create a database

su root // enter the root mode

#mysql

MariaDB [(none)]>

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'ipcc2014';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'ipcc2014';

. admin-openrc

openstack user create --domain default --password-prompt glance

openstack role add --project service --user glance admin

openstack service create --name glance --description "OpenStack Image" image

openstack endpoint create --region RegionOne image public http://controller:9292

openstack endpoint create --region RegionOne image internal http://controller:9292

openstack endpoint create --region RegionOne image admin http://controller:9292

///////////////////// Install Glance

sudo apt-get install glance

sudo gedit /etc/glance/glance-api.conf

[database]

connection = mysql+pymysql://glance:ipcc2014@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = ipcc2014

[paste_deploy]

flavor = keystone

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

sudo gedit /etc/glance/glance-registry.conf

[database]

connection = mysql+pymysql://glance:ipcc2014@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = ipcc2014

[paste_deploy]

flavor = keystone

sudo su -s /bin/sh -c "glance-manage db_sync" glance

sudo service glance-registry restart

sudo service glance-api restart

///////////////////// Verify operation

///////////////////// Download the source image – ‘cirros’

wget http://download.cirros-cloud.net/0.3.3/cirros-0.3.3-x86_64-disk.img

///////////////////// Upload to image service using the QCOW2 disk format

. admin-openrc

openstack image create "cirros_0_3_3" --file cirros-0.3.3-x86_64-disk.img --disk-format qcow2 --container-format bare --public

openstack image list

|

|

|

Computeservice – Nova

Compute service

| Controller

| compute1

| compute2

| ///////////////////// Create a database

su root // enter the root mode

#mysql

MariaDB [(none)]>

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'ipcc2014';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'ipcc2014';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'ipcc2014';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'ipcc2014';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'ipcc2014';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'ipcc2014';

. admin-openrc

openstack user create --domain default --password-prompt nova

openstack role add --project service --user nova admin

openstack service create --name nova --description "OpenStack Compute" compute

openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

openstack user create --domain default --password-prompt placement

openstack role add --project service --user placement admin

openstack service create --name placement --description "Placement API" placement

openstack endpoint create --region RegionOne placement public http://controller:8778

openstack endpoint create --region RegionOne placement internal http://controller:8778

openstack endpoint create --region RegionOne placement admin http://controller:8778

///////////////////// Install Nova

sudo apt-get install nova-api nova-conductor nova-consoleauth nova-novncproxy nova-scheduler nova-placement-api

sudo gedit /etc/nova/nova.conf

[api_database]

connection = mysql+pymysql://nova:ipcc2014@controller/nova_api

# connection=sqlite:////var/lib/nova/nova.sqlite // comment out

[database]

connection = mysql+pymysql://nova:ipcc2014@controller/nova

[DEFAULT]

transport_url = rabbit://openstack:ipcc2014@controller

my_ip = 10.0.0.11

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

#log_dir=/var/log/nova // comment out

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = ipcc2014

[vnc]

enabled = true

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

# lock_path=/var/lock/nova // comment out

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:35357/v3

username = placement

password = ipcc2014

# os_region_name = openstack // comment out

sudo su -s /bin/sh -c "nova-manage api_db sync" nova

sudo su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

sudo su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

sudo su -s /bin/sh -c "nova-manage db sync" nova

sudo nova-manage cell_v2 list_cells

sudo service nova-api restart

sudo service nova-consoleauth restart

sudo service nova-scheduler restart

sudo service nova-conductor restart

sudo service nova-novncproxy restart

sudo ufw disable // open port 5672 for MQ server

sudo ufw status

sudo su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

| egrep -c '(vmx|svm)' /proc/cpuinfo

2

///////////////////// Install Nova

sudo apt-get install nova-compute

sudo gedit /etc/nova/nova.conf

[DEFAULT]

transport_url = rabbit://openstack:ipcc2014@controller

my_ip = 10.0.0.31

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

#log_dir=/var/log/nova // comment out

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = ipcc2014

[vnc]

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

# lock_path=/var/lock/nova // comment out

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:35357/v3

username = placement

password = ipcc2014

# os_region_name = openstack // comment out

[libvirt]

hw_machine_type = "x86_64=pc-i440fx-xenial,i686=pc-i440fx-xenial"

sudo service nova-compute restart

cat /var/log/nova/nova-compute.log

| egrep -c '(vmx|svm)' /proc/cpuinfo

2

///////////////////// Install Nova

sudo apt-get install nova-compute

sudo gedit /etc/nova/nova.conf

[DEFAULT]

transport_url = rabbit://openstack:ipcc2014@controller

my_ip = 10.0.0.32

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

#log_dir=/var/log/nova // comment out

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = ipcc2014

[vnc]

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

# lock_path=/var/lock/nova // comment out

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:35357/v3

username = placement

password = ipcc2014

# os_region_name = openstack // comment out

[libvirt]

hw_machine_type = "x86_64=pc-i440fx-xenial,i686=pc-i440fx-xenial"

sudo service nova-compute restart

cat /var/log/nova/nova-compute.log

| ///////////////////// Verify operation

. admin-openrc

openstack hypervisor list

openstack compute service list

openstack catalog list

sudo nova-status upgrade check

|

Networkingservice – Neutron

Networking service

| controller

| compute1

| compute2

| ///////////////////// Create a database

su root // enter the root mode

#mysql

MariaDB [(none)]>

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost'IDENTIFIED BY 'ipcc2014';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%'IDENTIFIED BY 'ipcc2014';

. admin-openrc

openstack user create --domain default --password-prompt neutron

openstack role add --project service --user neutron admin

openstack service create --name neutron --description "OpenStack Networking" network

openstack endpoint create --region RegionOne network public http://controller:9696

openstack endpoint create --region RegionOne network internal http://controller:9696

openstack endpoint create --region RegionOne network admin http://controller:9696

///////////////////// Install Neutorn

sudo apt-get install neutron-server neutron-plugin-ml2 neutron-linuxbridge-agent neutron-l3-agent neutron-dhcp-agent neutron-metadata-agent

sudo gedit /etc/neutron/neutron.conf

[database]

connection = mysql+pymysql://neutron:ipcc2014@controller/neutron

# connection = sqlite:////var/lib/neutron/neutron.sqlite // comment out

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

transport_url = rabbit://openstack:ipcc2014@controller

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = ipcc2014

[nova]

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = ipcc2014

sudo gedit /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = linuxbridge,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

enable_ipset = true

sudo gedit /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:eth1

[vxlan]

enable_vxlan = true

local_ip = 10.0.0.11

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

sudo gedit /etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = linuxbridge

sudo gedit /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

sudo gedit /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_ip = controller

metadata_proxy_shared_secret = ipcc2014

sudo gedit /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = ipcc2014

service_metadata_proxy = true

metadata_proxy_shared_secret = ipcc2014

sudo su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

sudo service nova-api restart

sudo service neutron-server restart

sudo service neutron-linuxbridge-agent restart

sudo service neutron-dhcp-agent restart

sudo service neutron-metadata-agent restart

sudo service neutron-l3-agent restart

|

///////////////////// Install Neutorn

sudo apt-get install neutron-linuxbridge-agent

sudo gedit /etc/neutron/neutron.conf

[database]

# connection = sqlite:////var/lib/neutron/neutron.sqlite // comment out

[DEFAULT]

transport_url = rabbit://openstack:ipcc2014@controller

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = ipcc2014

sudo gedit /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:eth1

[vxlan]

enable_vxlan = true

local_ip = 10.0.0.31

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

sudo gedit /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = ipcc2014

sudo service nova-compute restart

sudo service neutron-linuxbridge-agent restart

|

///////////////////// Install Neutorn

sudo apt-get install neutron-linuxbridge-agent

sudo gedit /etc/neutron/neutron.conf

[database]

# connection = sqlite:////var/lib/neutron/neutron.sqlite // comment out

[DEFAULT]

transport_url = rabbit://openstack:ipcc2014@controller

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = ipcc2014

sudo gedit /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:eth1

[vxlan]

enable_vxlan = true

local_ip = 10.0.0.32

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

sudo gedit /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = ipcc2014

sudo service nova-compute restart

sudo service neutron-linuxbridge-agent restart

| ///////////////////// Verify operation

. admin-openrc

openstack extension list --network

openstack network agent list

|

Dashboard- Horizon

Dashboard

| Controller

| compute1

| compute2

| ///////////////////// Install Horizon

sudo apt-get install openstack-dashboard

sudo gedit /etc/openstack-dashboard/local_settings.py

#OPENSTACK_HOST = "127.0.0.1" // comment out

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*', ] // at the beginning, not Ubuntu Settings

SESSION_ENGINE = 'django.contrib.sessions.backends.cache' // the memcached session

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

#OPENSTACK_KEYSTONE_URL = "http://%s:5000/v2.0" % OPENSTACK_HOST // comment out

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

#OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = False // comment out

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

#OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = 'Default' // comment out

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

#OPENSTACK_KEYSTONE_DEFAULT_ROLE = "_member_" // comment out

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

OPENSTACK_NEUTRON_NETWORK = {

# 'enable_router': True,

# 'enable_quotas': True,

# 'enable_ipv6': True,

# 'enable_distributed_router': False,

# 'enable_ha_router': False,

# 'enable_lb': True,

# 'enable_firewall': True,

# 'enable_vpn': True,

# 'enable_fip_topology_check': True, // comment out

'enable_router': True,

'enable_quotas': False,

'enable_ipv6': False,

'enable_distributed_router': False,

'enable_ha_router': False,

'enable_lb': False,

'enable_firewall': False,

'enable_vpn': False,

'enable_fip_topology_check': False,

TIME_ZONE = "UTC"

sudo chown www-data:www-data /var/lib/openstack-dashboard/secret_key

sudo gedit /etc/apache2/conf-available/openstack-dashboard.conf

WSGIApplicationGroup %{GLOBAL}

sudo service apache2 reload

///////////////////// Verify operation

http://controller/horizon

default/admin/ipcc2014

default/demo/ipcc2014

|

|

|

|

|