参考官方文档:http://docs.openstack.org/juno/install-guide/install/yum/content/#

采用flatDHCP的网络模式

三个节点ip信息

controller:172.17.80.210

compute2:172.17.80.212

compute1:172.17.80.211

修改各节点的/etc/hosts 文件

测试各节点到openstack.org的连通性

1.配置NTP服务

1.1、In controller node

# yum install -y ntp

vi /etc/ntp.conf 修改文件如下:

server NTP_SERVER iburst #采用默认

restrict -4 default kod notrap nomodify

restrict -6 default kod notrap nomodify

# systemctl enable ntpd.service #开机启动

# systemctl start ntpd.service

1.2、In other node

# yum install ntp

修改/etc/ntp.conf文件如下:

server controller iburst

开机启动服务:

# systemctl enable ntpd.service

# systemctl start ntpd.service

1.3、OpenStack packages basic environment(in all node)

Install the yum-plugin-priorities package to enable assignment of relative priorities within repositories:

# yum install -y yum-plugin-priorities

Install the epel-release package to enable the EPEL repository:

# yum install http://dl.fedoraproject.org/pub/epel/7/x86_64/e/epel-release-7-5.noarch.rpm

Install the rdo-release-juno package to enable the RDO repository:

# yum install http://rdo.fedorapeople.org/openstack-juno/rdo-release-juno.rpm

Upgrade the packages on your system:

# yum upgrade

# reboot

RHEL and CentOS enable SELinux by default. Install the openstack-selinux package to automatically manage security policies for OpenStack services:

# yum install openstack-selinux

安装如果报错,可通过下面安装:

#yum install http://repos.fedorapeople.org/repos/openstack/openstack-juno/epel-7/openstack-selinux-0.5.19-2.el7ost.noarch.rpm

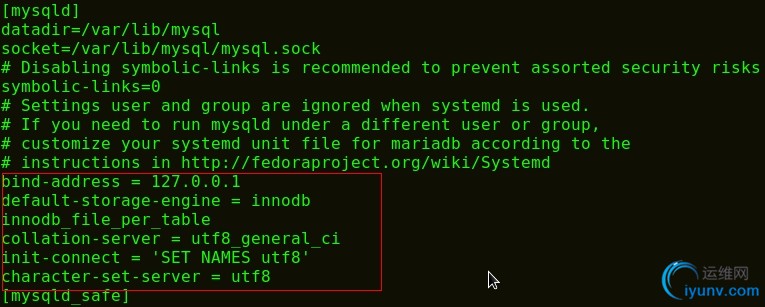

1.4、To install and configure the database server

# yum install -y mariadb mariadb-server MySQL-python

版权问题,centos7数据库采用mariadb

修改/etc/my.cnf

[mysqld]

bind-address = 127.0.0.1

default-storage-engine = innodb

innodb_file_per_table

collation-server = utf8_general_ci

init-connect = 'SET NAMES utf8'

character-set-server = utf8

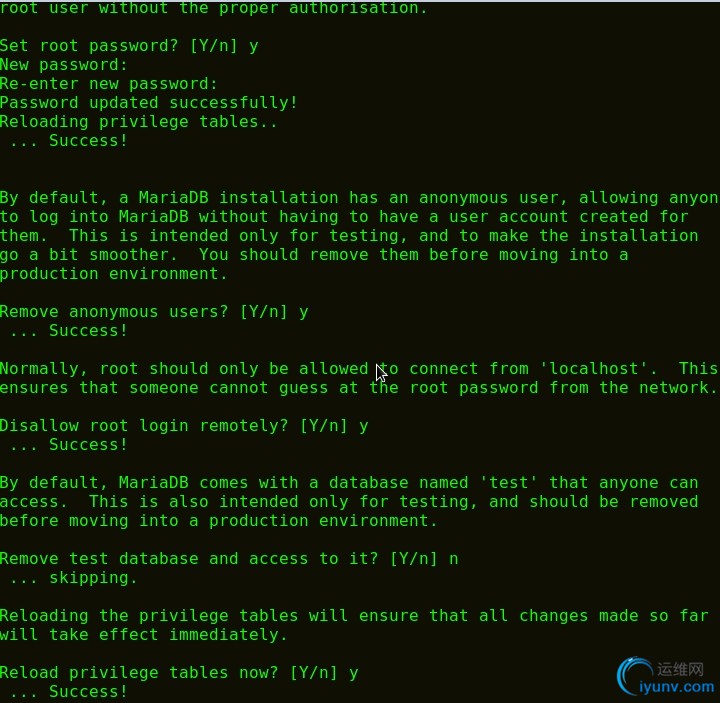

# systemctl enable mariadb.service

# systemctl start mariadb.service

#mysql_secure_installation #mysql安全相关设置

1.5、To install the RabbitMQ message broker service(in controller node)

# yum install rabbitmq-server

# systemctl enable rabbitmq-server.service

# systemctl start rabbitmq-server.service

启动服务时出现如下错误:

# rabbitmqctl change_password guest 123456

执行时如果有报错

2、Identity service Install and configure

2.1、Install and configure

# mysql

> create database keystone;

> grant all privileges on keystone.* to 'keystone'@'localhost' identified by 'test01';

> grant all privileges on keystone.* to 'keystone'@'%' identified by 'test01';

# openssl rand -hex 10

注:上面的作用是生成随机字符串用于用户访问的即keystone.conf中的 admin_token .也可以自己随意写:本次安装admin_token设置为123456

# yum install -y openstack-keystone python-keystoneclient

修改 /etc/keystone/keystone.conf

[DEFAULT]

...

admin_token= 123456

verbose = True

[database]

...

connection=mysql://keystone:test01@localhost/keystone

[token]

...

provider = keystone.token.providers.uuid.Provider

driver =keystone.token.persistence.backends.sql.Token

# keystone-manage pki_setup --keystone-user keystone --keystone-group keystone

# chown -R keystone:keystone /var/log/keystone

# chown -R keystone:keystone /etc/keystone/ssl

# chmod -R o-rwx /etc/keystone/ssl

# su -s /bin/sh -c "keystone-manage db_sync" keystone 或者 keystone-manage db_sync

# systemctl enable openstack-keystone

# systemctl start openstack-keystone

#(crontab -l -u keystone 2>&1 | grep -q token_flush) ||echo '@hourly /usr/bin/keystone-manage token_flush >/var/log/keystone/keystone-tokenflush.log 2>&1' >> /var/spool/cron/keystone #定期清除无效的token

2.2、Create tenants, users, and roles

# export OS_SERVICE_TIOEN=123456

# export OS_SERVICE_ENDPOINT=http://controller:35357/v2.0

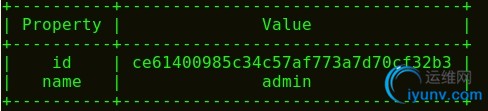

Create the admin tenant:

# keystone tenant-create --name admin --description "Admin Tenant"

Create the admin user:

# keystone user-create --name admin --pass test01 --email admin@test.com

Create the admin role:

# keystone role-create --name admin

Add the admin role to the admin tenant and user:

# keystone user-role-add --user admin --tenant admin --role admin

2.3、Create a demo tenant and user for typical operations in your environment:

Create the demo tenant:

# keystone tenant-create --name demo --description "Demo Tenant"

Create the demo user under the demo tenant:

# keystone user-create --name demo --tenant demo --pass test01 --email admin@test.com

Create the service tenant:

# keystone tenant-create --name service --description "Service Tenant"

Create the service entity for the Identity service:

# keystone service-create --name keystone --type identity --description "Openstack Identity"

Create the Identity service API endpoints:

# keystone endpoint-create --service-id $(keystone service-list | awk '/ identity / {print $2}') --publicurl http://controller:5000/v2.0 --internalurl http://controller:5000/v2.0 --adminurl http://controller:35357/v2.0 --region regionOne

2.4、测试内容

# unset OS_SERVICE_TOKEN OS_SERVICE_ENDPOINT

# keystone --os-tenant-name admin --os-username admin --os-password test01 --os-auth-url http://controller:35357/v2.0 token-get

# keystone --os-tenant-name admin --os-username admin --os-password test01 --os-auth-url http://controller:35357/v2.0 tenant-list

# keystone --os-tenant-name admin --os-username admin --os-password test01 --os-auth-url http://controller:35357/v2.0 user-list

# keystone --os-tenant-name admin --os-username admin --os-password test01 --os-auth-url http://controller:35357/v2.0 role-list

# keystone --os-tenant-name demo --os-username demo --os-password test01 --os-auth-url http://controller:35357/v2.0 token-get

# keystone --os-tenant-name demo --os-username demo --os-password test01 --os-auth-url http://controller:35357/v2.0 user-list

2.5、Create OpenStack client environment scripts

vi admin-openrc.sh

export OS_TENANT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=test01

export OS_AUTH_URL=http://controller:35357/v2.0

vi demo-openrc.sh

export OS_TENANT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=test01

export OS_AUTH_URL=http://controller:5000/v2.0

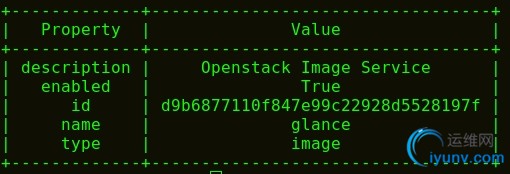

3、Add the Image Service(in controller node)

3.1、To configure prerequisites

#mysql -u root

> create database glance;

> grant all privileges on glance.* to 'glance'@'localhost' identified by 'test01';

> grant all privileges on glance.* to 'glance'@'%' identified by 'test01';

>quit

# source admin-openrc.sh

# keystone user-create --name glance --pass test01 --email admin@test.com

# keystone user-role-add --user glance --tenant service --role admin

# keystone service-create --name glance --type image --description "Openstack Image Service"

# keystone endpoint-create --service-id $(keystone service-list | awk '/ image / {print $2}') --publicurl http://controller:9292 --internalurl http://controller:9292 --adminurl http://controller:9292 --region regionOne

3.2、To install and configure the Image Service components

# yum install openstack-glance python-glanceclient

# vi /etc/glance/glance-api.conf

[DEFAULT]

verbose=True

default_store = file

notification_driver = noop

[database]

connection=mysql://glance:test01@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000/v2.0

identity_uri=http://controller:35357

admin_tenant_name=service

admin_user=glance

admin_password=test01

[glance_store]

filesystem_store_datadir = /var/lib/glance/images/

# vi /etc/glance/glance-registry.conf

[DEFAULT]

verbose=True

notification_driver = noop

[paste_deploy]

flavor = keystone

[database]

connection=mysql://glance:test01@controller/glance

[keystone_authtoken]

auth_uri=http://controller:5000/v2.0

identity_uri=http://controller:35357

admin_tenant_name=service

admin_user=glance

admin_password=test01

# su -s /bin/sh -c "glance-manage db_sync" glance

# systemctl enable openstack-glance-api.service openstack-glance-registry.service

# systemctl start openstack-glance-api.service openstack-glance-registry.service

# mkdir /tmp/images

#cd /tmp/images

# wget http://cdn.download.cirros-cloud.net/0.3.3/cirros-0.3.3-x86_64-disk.img

#source admin-openrc.sh (要先进入admin-openrc.sh的目录下才有效,本次默认在/root/下)

#cd /tmp/images

# glance image-create --name "cirros-0.3.3-x86_64" --file cirros-0.3.3-x86_64-disk.img --disk-format qcow2 --container-format bare --is-public True --progress

4、Add the Compute service in controller node

4.1、Install and configure controller node

#mysql -u root -p

>create database nova;

>grant all privileges on nova.* to 'nova'@'localhost' identified by 'test01';

>grant all privileges on nova.* to 'nova'@'%' identified by 'test01';

>quit

# source admin-openrc.sh

# keystone user-create --name nova --pass test01

# keystone user-role-add --user nova --tenant service --role admin

# keystone service-create --name nova --type compute --description "OpenStack Compute"

# keystone endpoint-create \

--service-id $(keystone service-list | awk '/ compute / {print $2}') \

--publicurl http://controller:8774/v2/%\(tenant_id\)s \

--internalurl http://controller:8774/v2/%\(tenant_id\)s \

--adminurl http://controller:8774/v2/%\(tenant_id\)s \

--region regionOne

# yum install openstack-nova-api openstack-nova-cert openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler python-novaclient

修改/etc/nova.nova.conf

[database]

connection=mysql://nova:test01@controller/nova

注:上面这部分在Juno版本中的配置文件中是不存在的,需要手动添加,避免出错在末尾添加。

rpc_backend=rabbit

rabbit_host=controller

rabbit_password=123456

auth_strategy=keystone

auth_uri=http://controller:5000/v2.0

identity_uri=http://controller:35357

admin_tenant_name=service

admin_user=nova

admin_password=test01

my_ip=172.17.80.210

vncserver_listen=172.17.80.210

vncserver_proxyclient_address=172.17.80.210

host=controller

verbose=True

# su -s /bin/sh -c "nova-manage db sync " nova

注:同步数据库需要几分钟时间,可以稍微等一下

# systemctl enable openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

# systemctl start openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

4.2、Install and configure in compute node

# yum install -y openstack-nova-compute sysfsutils

vi /etc/nova/nova.conf

[DEFAULT]

...

verbose = True

rpc_backend = rabbit

rabbit_host = controller

rabbit_password =123456

auth_strategy = keystone

my_ip=172.17.80.211

host=compute1

vnc_enabled=true

vnc_enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = 172.17.80.211

novncproxy_base_url = http://controller:6080/vnc_auto.html

[keystone_authtoken]

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = nova

admin_password =test01

[glance]

...

host = controller

检查节点是否支持硬件加速

# egrep -c '(vmx|svm)' /proc/cpuinfo

1、如果此命令返回一个值的一个或更多的计算节点,支持硬件加速,通常不需要额外的配置。

2、如果此命令返回一个值为零,计算节点不支持硬件加速,你须要配置libvirt使用QEMU代替KVM。

修改/etc/nova/nova.conf

[libvirt]

...

virt_type = qemu

# systemctl enable libvirtd.service openstack-nova-compute.service

# systemctl start libvirtd.service openstack-nova-compute.service

4.3、test in controller node

# source admin-openrc.sh

5、Install and configure networking (nova-network)

5.1、In controller node

vi /etc/nova/nova.conf

[DEFAULT]

...

network_api_class = nova.network.api.API

security_group_api = nova

# systemctl restart openstack-nova-api.service openstack-nova-scheduler.service \

openstack-nova-conductor.service

5.2、In compute node

# yum install -y openstack-nova-network openstack-nova-api

# vi /etc/nova/nova.conf

[DEFAULT]

...

network_api_class = nova.network.api.API

security_group_api = nova

firewall_driver = nova.virt.libvirt.firewall.IptablesFirewallDriver

network_manager = nova.network.manager.FlatDHCPManager

network_size = 254

allow_same_net_traffic = False

multi_host = True

send_arp_for_ha = True

share_dhcp_address = True

force_dhcp_release = True

flat_network_bridge = br100

flat_interface = eth1

public_interface = eth0

# systemctl enable openstack-nova-network.service openstack-nova-metadata-api.service

# systemctl start openstack-nova-network.service openstack-nova-metadata-api.service

6、Install and configure dashboard

# yum install -y openstack-dashboard httpd mod_wsgi memcached python-memcached

vi /etc/openstack-dashboard/local_settings

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*']

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': '127.0.0.1:11211',

}

}

# setsebool -P httpd_can_network_connect on

# chown -R apache:apache /usr/share/openstack-dashboard/static

# systemctl enable httpd.service memcached.service

# systemctl start httpd.service memcached.service

测试

登录http://172.17.80.211/dashboard(用户名密码前面已创建:admin:test01)

添加实例最常遇到错误:No valid host was found.

首先看一下各服务是否启动正常

通常需要查看/var/log/nova/ 下的日志文件来分析具体的错误

tail /var/log/nova/nova-conductor.log

7、Install and configure block storage

7.1、in controller node

# mysql -u root -p

> create database cinder;

> grant all privileges on cinder.* to 'cinder'@'localhost' identified by 'test01';

> grant all privileges on cinder.* to 'cinder'@'%' identified by 'test01';

# keystone user-create --name cinder --pass test01

# keystone user-role-add --user cinder --tenant service --role admin

# keystone service-create --name cinder --type volume --description "openstack block storage"

# keystone service-create --name cinderv2 --type volumev2 --description "openstack block storage"

# keystone endpoint-create \

--service-id $(keystone service-list | awk '/ volume / {print $2}') \

--publicurl http://controller:8776/v1/%\(tenant_id\)s \

--internalurl http://controller:8776/v1/%\(tenant_id\)s \

--adminurl http://controller:8776/v1/%\(tenant_id\)s \

--region regionOne

# keystone endpoint-create \

--service-id $(keystone service-list | awk '/ volumev2 / {print $2}') \

--publicurl http://controller:8776/v2/%\(tenant_id\)s \

--internalurl http://controller:8776/v2/%\(tenant_id\)s \

--adminurl http://controller:8776/v2/%\(tenant_id\)s \

--region regionOne

# yum install -y openstack-cinder python-cinderclient python-oslo-db

vi /etc/cinder/cinder.conf

[DEFAULT]

...

my_ip =172.17.80.210

verbose = True

auth_strategy = keystone

rpc_backend = rabbit

rabbit_host = controller

rabbit_password =123456

[database]

...

connection=mysql://cinder:test01@controller/cinder

[keystone_authtoken]

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = cinder

admin_password =test01

# su -s /bin/sh -c "cinder-manage db sync" cinder

# systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

# systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

7.2、Install and configure in storage node

ip address:172.17.80.214

相关软件包参考basic environment

# yum install -y yum-plugin-priorities

# yum install http://dl.fedoraproject.org/pub/epel/7/x86_64/e/epel-release-7-5.noarch.rpm -y

# yum install http://rdo.fedorapeople.org/openstack-juno/rdo-release-juno.rpm -y

# yum upgrade -y

# yum install openstack-selinux -y

#如果提示找不到安装包可通过下面来安装

#yum install http://repos.fedorapeople.org/repos/openstack/openstack-juno/epel-7/openstack-selinux-0.5.19-2.el7ost.noarch.rpm

vi /etc/hosts

172.17.80.214block1

# yum install lvm2 -y

# systemctl enable lvm2-lvmetad.service

# systemctl start lvm2-lvmetad.service

本次演示虚拟机添加两块硬盘,其中一块单独做为存储用。可依实际情况来操作

# pvcreate /dev/xvdb1

# vgcreate cinder-volumes /dev/xvdb1

vi /etc/lvm/lvm.conf

devices {

...

filter = [ "a/xvdb/", "r/.*/"]

Warning

If your storage nodes use LVM on the operating system disk, you must also add the associated device to the filter. For example, if the /dev/sda device contains the operating system:

Select Text

filter = [ "a/sda/", "a/sdb/", "r/.*/"]

Similarly, if your compute nodes use LVM on the operating system disk, you must also modify the filter in the /etc/lvm/lvm.conf file on those nodes to include only the operating system disk. For example, if the /dev/sda device contains the operating system:

Select Text

filter = [ "a/sda/", "r/.*/"]

# yum install openstack-cinder targetcli python-oslo-db MySQL-python -y

vi /etc/cinder/cinder.conf

[DEFAULT]

...

verbose = True

rpc_backend = rabbit

rabbit_host = controller

rabbit_password =123456

iscsi_helper = lioadm

glance_host = controller

my_ip =172.17.80.214

auth_strategy = keystone

[database]

...

connection = mysql://cinder:test01@controller/cinder

[keystone_authtoken]

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = cinder

admin_password =test01

# systemctl enable openstack-cinder-volume.service target.service

# systemctl start openstack-cinder-volume.service target.service

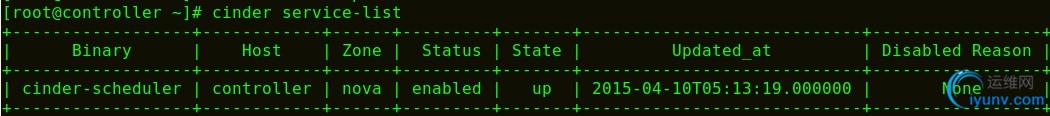

7.3、测试操作:

controller node

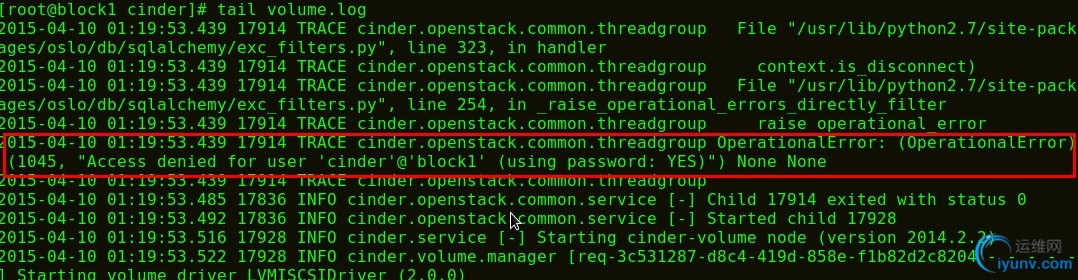

看不到block存储节点?

检查block节点日志发现mysql连接失败

这是由于mysql默认不允许远程机器连接导致的需要在controller节点允许block1节点连接

block1节点重启服务

# systemctl restart openstack-cinder-volume.service

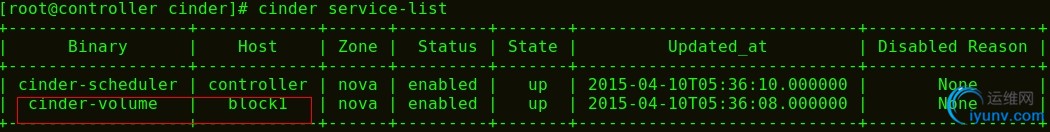

controller 节点服务显示正常

创建20G的卷

# cinder create --display-name demo-volume1 20

8、Add Object Storage

8.1 In controller node

# keystone user-create --name swift --pass test01

# keystone user-role-add --user swift --tenant service --role admin

# keystone service-create --name swift --type object-store --description "OpenStack Object Storage"

# keystone endpoint-create --service-id $(keystone service-list | awk '/ object-store / {print $2}') --publicurl 'http://controller:8080/v1/AUTH_%(tenant_id)s' --internalurl 'http://controller:8080/v1/AUTH_%(tenant_id)s' --adminurl http://controller:8080 --region regionOne

# yum install openstack-swift-proxy python-swiftclient python-keystone-auth-token \

python-keystonemiddleware memcached -y

Obtain the proxy service configuration file from the Object Storage source repository:

# curl -o /etc/swift/proxy-server.conf \

https://raw.githubusercontent.com/openstack/swift/stable/juno/etc/proxy-server.conf-sample

vi /etc/swift/proxy-server.conf

[DEFAULT]

...

bind_port = 8080

user = swift

swift_dir = /etc/swift

[pipeline:main]

pipeline = authtoken cache healthcheck keystoneauth proxy-logging proxy-server

[app:proxy-server]

...

allow_account_management = true

account_autocreate = true

[filter:keystoneauth]

use = egg:swift#keystoneauth

...

operator_roles = admin,_member_

[filter:authtoken]

paste.filter_factory = keystonemiddleware.auth_token:filter_factory

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = swift

admin_password = test01

delay_auth_decision = true

[filter:cache]

...

memcache_servers = 127.0.0.1:11211

8.2、Install and configure the storage nodes

基础软件包安装略……

两块硬盘,一块用于系统,一块用于storage

ip address :172.17.80.213

# yum install -y xfsprogs rsync

# mkfs.xfs /dev/xvdb1 # 虚拟机硬盘

# mkdir -p /srv/node/sdb1

vi /etc/fstab

/dev/xvdb1/srv/node/sdb1xfsnoatime,nodiratime,nobarrier,logbufs=8 0 2

# mount /srv/node/sdb1

vi /etc/rsyncd.conf

uid = swift

gid = swift

log file = /var/log/rsyncd.log

pid file = /var/run/rsyncd.pid

address = 172.17.80.213

[account]

max connections = 2

path = /srv/node/

read only = false

lock file = /var/lock/account.lock

[container]

max connections = 2

path = /srv/node/

read only = false

lock file = /var/lock/container.lock

[object]

max connections = 2

path = /srv/node/

read only = false

lock file = /var/lock/object.lock

# systemctl enable rsyncd.service

# systemctl start rsyncd.service

Install the packages:

# yum install -y openstack-swift-account openstack-swift-container openstack-swift-object

# curl -o /etc/swift/account-server.conf \

https://raw.githubusercontent.com/openstack/swift/stable/juno/etc/account-server.conf-sample

# curl -o /etc/swift/container-server.conf \

https://raw.githubusercontent.com/openstack/swift/stable/juno/etc/container-server.conf-sample

# curl -o /etc/swift/object-server.conf \

https://raw.githubusercontent.com/openstack/swift/stable/juno/etc/object-server.conf-sample

vi /etc/swift/account-server.conf

[DEFAULT]

...

bind_ip = 172.17.80.213

bind_port = 6002

user = swift

swift_dir = /etc/swift

devices = /srv/node

[pipeline:main]

pipeline = healthcheck recon account-server

[filter:recon]

...

recon_cache_path = /var/cache/swift

vi /etc/swift/container-server.conf

[DEFAULT]

...

bind_ip =172.17.80.213

bind_port = 6001

user = swift

swift_dir = /etc/swift

devices = /srv/node

[pipeline:main]

pipeline = healthcheck recon container-server

[filter:recon]

...

recon_cache_path = /var/cache/swift

vi /etc/swift/object-server.conf

[DEFAULT]

...

bind_ip = 172.17.80.213

bind_port = 6000

user = swift

swift_dir = /etc/swift

devices = /srv/node

[pipeline:main]

pipeline = healthcheck recon object-server

[filter:recon]

...

recon_cache_path = /var/cache/swift

# chown -R swift:swift /srv/node

# mkdir -p /var/cache/swift

# chown -R swift:swift /var/cache/swift

8.3、In controller node

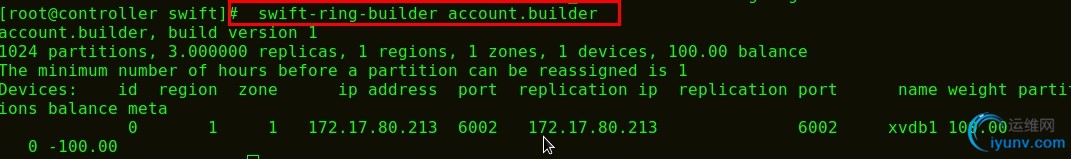

8.3.1、Account ring

# cd /etc/swift/

Create the base account.builder file:

# swift-ring-builder account.builder create 10 3 1

Add each storage node to the ring:

# swift-ring-builder account.builder add r1z1-172.17.80.213:6002/xvdb1 100

Verify the ring contents:

# swift-ring-builder account.builder

# swift-ring-builder account.builder rebalance

8.3.2、Container ring

# cd /etc/swift

# swift-ring-builder container.builder create 10 3 1

# swift-ring-builder container.builder add r1z1-172.17.80.213:6001/xvdb1 100

# swift-ring-builder container.builder

8.3.3、Object ring

# cd /etc/swift

# swift-ring-builder object.builder create 10 3 1

# swift-ring-builder object.builder add r1z1-172.17.80.213:6000/xvdb1 100

# swift-ring-builder object.builder

# swift-ring-builder object.builder rebalance

Distribute ring configuration files

Copy the account.ring.gz, container.ring.gz, and object.ring.gz files to the /etc/swift directory on each storage node and any additional nodes running the proxy service.

完成安装

8.4、In object storage

配置的哈希值和默认存储策略

# curl -o /etc/swift/swift.conf \

https://raw.githubusercontent.com/openstack/swift/stable/juno/etc/swift.conf-sample

vi /

[swift-hash]

swift_hash_path_suffix = test01

swift_hash_path_prefix = test01

[storage-policy:0]

name = Policy-0

default = yes

Copy the swift.conf file to the /etc/swift directory on each storage node and any additional nodes running the proxy service.

# chown -R swift:swift /etc/swift ;on all node

|