|

|

次文档是根据E版本的安装文档修改的,有问题可以留言.

需要修改的地方,已经用蓝色字体标出.由于本人技术能力有限,出现的问题还请指教.

Step 0: 准备操作系统

安装Ubuntu 12.04 正式版

在Dell R410,R610上增加如下操作

Vi /boot/grub/grub.cfg

找到类似行增加 quiet rootdelay=35

linux /boot/vmlinuz-3.2.0-20-generic root=UUID=c8eca5b3-2334-4a87-87c8-48e3a3544c4a ro quiet rootdelay=35

添加folsom 官方源

apt-get install python-software-properties

apt-add-repository ppa:openstack-ubuntu-testing/folsom-trunk-testing

Step 1: Prepare your System

Install NTP by issuing this command on the command line:

apt-get install ntp

Then, open /etc/ntp.conf in your favourite editor and add these lines:

server ntp.ubuntu.com iburst

server 127.127.1.0

fudge 127.127.1.0 stratum 10

Restart NTP by issuing the command

service ntp restart

to finish this part of the installation. Next, install the tgt target, which features an iscsi target (we'll need it for nova-volume):

apt-get install tgt

Then start it with

ervice tgt start

Given that we'll be running nova-compute on this machine as well, we'll also need the openiscsi-client. Install it with:

apt-get install -y open-iscsi open-iscsi-utils

Next, we need to make sure that our network is working as expected. As pointed out earlier, the machine we're doing this on has two network interfaces, eth0 and eth1. eth0 is the machine's link to the outside world, eth1 is the interface we'll be using for our virtual machines. We'll also make nova bridge clients via eth0 into the internet. To achieve this kind of setup, first create the according network configuration in /etc/network/interfaces (assuming that you are not using NetworkManager). An example could look like this:

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet static

address 10.42.0.6

network 10.42.0.0

netmask 255.255.255

broadcast 10.42.0.255

gateway 10.42.0.1

auto eth1

iface eth1 inet static

address 192.168.33.1

network 192.168.33.0

netmask 255.255.255.0

broadcast 192.168.33.255

As you can see, the "public" network here is 10.42.0.0/24 while the "private" network (within which our VMs will be residing) is 192.168.22.0/24. This machine's IP address in the public network is 10.42.0.6 and we'll be using this IP in configuration files later on (except for when connecting to MySQL, which we'll by connecting to 127.0.0.1). After changing your network interfaces definition accordingly, make sure that the bridge-utils package is installed. Should it be missing on your system, install it with

apt-get install -y bridge-utils

Then, restart your network with

/etc/init.d/networking restart

We'll also need RabbitMQ, an AMQP-implementation, as that is what all OpenStack components use to communicate with eath other, and memcached.

apt-get install -y rabbitmq-server memcached python-memcache

As we'll also want to run KVM virtual machines on this very same host, we'll need KVM and libvirt, which OpenStack uses to control virtual machines. Install these packages with:

apt-get install -y kvm libvirt-bin

Last but not least, make sure you have an LVM volume group called nova-volumes; the nova-volume service will need such a VG later on.

Step 2: Install MySQL and create the necessary databases and users

Nova and glance will use MySQL to store their runtime data. To make sure they can do that, we'll install and set up MySQL. Do this:

apt-get install -y mysql-server python-mysqldb

When the package installation is done and you want other machines (read: OpenStack computing nodes) to be able to talk to that MySQL database, too, open up /etc/mysql/my.cnf in your favourite editor and change this line:

bind-address = 127.0.0.1

to look like this:

bind-address = 0.0.0.0

Then, restart MySQL:

service mysql restart

Now create the user accounts in mysql and grant them access on the according databases, which you need to create, too:

CREATE DATABASE keystone;

CREATE DATABASE nova;

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON *.* TO 'folsom'@'%'

IDENTIFIED BY 'password';

这里请检查数据库的权限问题,可能导致folsom用户不能连接到数据库的错误

Step 3: Install and configure Keystone

We can finally get to OpenStack now and we'll start by installing the Identity component, codenamed Keystone. Install the according packages:

apt-get install keystone python-keystone python-keystoneclient python-yaml

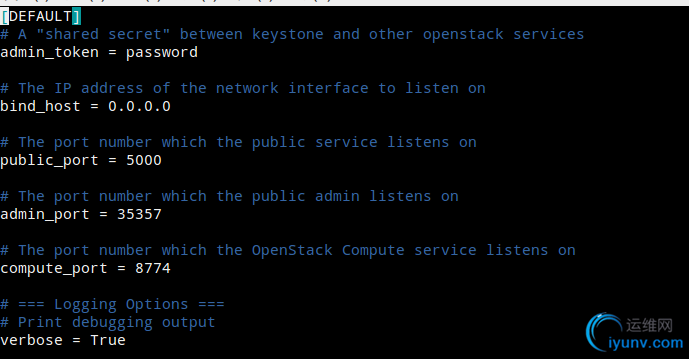

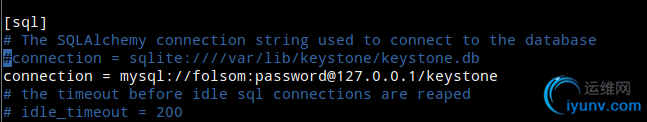

修改/etc/keystone/keystone.conf文件, look like this.

下载下面两个文件

wget https://github.com/nimbis/keystone-init/blob/master/config.yaml

wget https://github.com/nimbis/keystone-init/blob/master/keystone-init.py

修改config.yaml 内容很简单相信都能看懂,

重启 keystone服务 service keystone restart

修改后运行 ./keystone-init.py config.yaml

Step 4: Install and configure Glance

The next step on our way to OpenStack is its Image Service, codenamed Glance. First, install the packages necessary for it:

apt-get install glance glance-api glance-client glance-common glance-registry python-glance

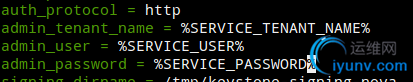

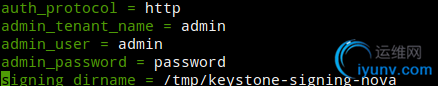

When that is done, open /etc/glance/glance-api-paste.ini in an editor and scroll down to the end of the document. You'll see these three lines at its very end:

admin_tenant_name= %SERVICE_TENANT_NAME%

admin_user = %SERVICE_USER%

admin_password = %SERVICE_PASSWORD%

Fill in values here appropriate for your setup. If you used the keystone_data.sh script from this site, then your admin_tenant_name will be admin and your admin_user will be admin, too. admin_password is the password you defined for ADMIN_PASSWORD in keystone_data.sh, so use the same value here, too. In this example, we'll use password.

Ensure that the glance-api pipeline section includes authtoken:

[pipeline:glance-api]

pipeline = versionnegotiation authtoken auth-context apiv1app

After this, open /etc/glance/glance-registry-paste.ini and scroll to that file's end, too. Adapt it in the same way you adapted /etc/glance/glance-api-paste.ini earlier.

Ensure that the glance-registry pipeline section includes authtoken:

[pipeline:glance-registry]

#pipeline = context registryapp

# NOTE: use the following pipeline for keystone

pipeline = authtoken auth-context context registryapp

Please open /etc/glance/glance-registry.conf now and scroll down to the line starting with sql_connection. This is where we tell Glance to use MySQL; according to the MySQL configuration we created earlier, the sql_connection-line for this example would look like this:

sql_connection = mysql://folsom:password@127.0.0.1/glance

It's important to use the machine's actual IP in this example and not 127.0.0.1! After this, scroll down until the end of the document and add these two lines:

[paste_deploy]

flavor = keystone

These two lines instruct the Glance Registry to use Keystone for authentication, which is what we want. Now we need to do the same for the Glance API. Open /etc/glance/glance-api.conf and add these two lines at the end of the document:

[paste_deploy]

flavor = keystone

Afterwards, you need to restart Glance:

service glance-api restart && service glance-registry restart

Now what's the best method to verify that Glance is working as expected? The glancecommand line utilty can do that for us, but to work properly, it needs to know how we want to authenticate ourselves to Glance (and keystone, subsequently). This is a very good moment to define four environmental variables that we'll need continously when working with OpenStack: OS_TENANT_NAME, OS_USERNAME, OS_PASSWORD and OS_AUTH_URL. Here's what they should look like in our example scenario:

export OS_TENANT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=password

export OS_AUTH_URL="http://localhost:5000/v2.0/"

The first three entries are identical with what you inserted into Glance's API configuration files earlier and the entry for OS_AUTH_URL is mostly generic and should just work. After exporting these variables, you should be able to do

glance index

and get no output at all in return (but the return code will be 0; check with echo $?). If that's the case, Glance is setup correctly and properly connects with Keystone. Now let's add our first image!

We'll be using a Ubuntu UEC image for this. Download one:

wget http://uec-images.ubuntu.com/releases/11.10/release/ubuntu-11.10-server-cloudimg-amd64-disk1.img

Then add this image to Glance:

glance add name="Ubuntu 11.10 cloudimg amd64" is_public=true container_format=ovf disk_format=qcow2 < ubuntu-11.10-server-cloudimg-amd64-disk1.img

After this, if you do

glance index

wget http://smoser.brickies.net/ubuntu/ttylinux-uec/ttylinux-uec-amd64-12.1_2.6.35-22_1.tar.gz

tar -zxvf ttylinux-uec-amd64-12.1_2.6.35-22_1.tar.gz

通过CURL命令获取认证码(本步可省):

curl -s -d "{"auth":{"passwordCredentials":{"username": "adminUser", "password": "password"}}}" -H "Content-type: application/json" http://10.10.10.117:5000/v2.0/tokens | python -c "import sys;import json;tok=json.loads(sys.stdin.read());print tok['access']['token']['id'];"'

glance add name="tty-linux-kernel" disk_format=aki container_format=aki < ttylinux-uec-amd64-12.1_2.6.35-22_1-vmlinuz

glance add name="tty-linux-ramdisk" disk_format=ari container_format=ari < ttylinux-uec-amd64-12.1_2.6.35-22_1-loader

glance add name="tty-linux" disk_format=ami container_format=ami kernel_id=599907ff-296d-4042-a671-d015e34317d2 ramdisk_id=7d9f0378-1640-4e43-8959-701f248d999d < ttylinux-uec-amd64-12.1_2.6.35-22_1.img

once more, you should be seeing the freshly added image.

Step 5: Install and configure Nova

Submitted by martin on Fri, 2012-03-23 22:35 GMT

OpenStack Compute, codenamed Nova, is by far the most important and the most substantial openstack component. Whatever you do when it comes to managing VMs will be done by Nova in the background. The good news is: Nova is basically controlled by one configuration file, /etc/nova/nova.conf. Get started by installing all nova-related components:

apt-get install nova-api nova-cert nova-common nova-compute nova-compute-kvm nova-doc nova-network nova-objectstore nova-scheduler nova-consoleauth novnc python-novnc nova-volume python-nova python-novaclient python-numpy

修改/etc/nova/api-past.ini文件里面的

改成

Then, open /etc/nova/nova.conf and replace everything in there with these lines:

[DEFAULT]

dhcpbridge_flagfile=/etc/nova/nova.conf

dhcpbridge=/usr/bin/nova-dhcpbridge

logdir=/var/log/nova

state_path=/var/lib/nova

lock_path=/var/lock/nova

force_dhcp_release=True

iscsi_helper=tgtadm

libvirt_use_virtio_for_bridges=True

connection_type=libvir

verbose=True

root_helper = sudo nova-rootwrap /etc/nova/rootwrap.conf

ec2_private_dns_show_ip=True

api_paste_config=/etc/nova/api-paste.ini

dhcpbridge_flagfile=/etc/nova/nova.conf

dhcpbridge=/usr/bin/nova-dhcpbridge

logdir=/var/log/nova

state_path=/var/lib/nova

lock_path=/var/lock/nova

allow_admin_api=true

use_deprecated_auth=false

auth_strategy=keystone

scheduler_driver=nova.scheduler.simple.SimpleScheduler

s3_host=127.0.0.1

ec2_host=127.0.0.1

rabbit_host=127.0.0.1

cc_host=127.0.0.1

nova_url=http://127.0.0.1:8774/v1.1/

#multi_host remove

#routing_source_ip=127.0.0.1

glance_api_servers=127.0.0.1:9292

image_service=nova.image.glance.GlanceImageService

iscsi_ip_prefix=192.168.20.0

sql_connection=mysql://folsom:password@127.0.0.1/nova

ec2_url=http://127.0.0.1:8773/services/Cloud

keystone_ec2_url=http://127.0.0.1:5000/v2.0/ec2tokens

api_paste_config=/etc/nova/api-paste.ini

libvirt_type=kvm

libvirt_use_virtio_for_bridges=true

start_guests_on_host_boot=true

resume_guests_state_on_host_boot=true

#novnc

vnc_enabled=true

novnc_enabled=true

vncserver_proxyclient_address=127.0.0.1

vncserver_listen=127.0.0.1

novncproxy_base_url=http://127.0.0.1:6080/vnc_auto.html

xvpvncproxy_base_url=http://127.0.0.1:6081/console

xvpvncproxy_port=6801

xvpvncproxy_host=127.0.0.1

#novncproxy_port=6080

#novncproxy_host=0.0.0.0

# network specific settings

ec2_dmz_host=127.0.0.1

multi_host=True

enabled_apis=ec2,osapi_compute,osapi_volume,metadata

network_manager=nova.network.manager.FlatDHCPManager

public_interface=eth0

flat_interface=eth1

flat_network_bridge=br100

fixed_range=192.168.22.0/27

floating_range=10.0.0.0/27

network_size=32

flat_network_dhcp_start=192.168.22.2

flat_injected=False

force_dhcp_release=true

iscsi_helper=tgtadm

connection_type=libvir

verbose=false

#scheduler

max_cores=400

max_gigabytes=10000

skip_isolated_core_check=TRUE

ram_allocation_ratio = 10.0

reserved_host_disk_mb = 100000

reserved_host_memory_mb = 8192

As you can see, many of the entries in this file are self-explanatory; the trickiest bit to get done right is the network configuration part, which you can see at the end of the file. We're using Nova's FlatDHCP network mode; 192.168.22.32/27 is the fixed range from which our future VMs will get their IP adresses, starting with 192.168.22.33. Our flat interface is eth1 (nova-network will bridge this into a bridge named br100), our public interface is eth0. An additional floating range is defined at 10.42.0.32/27 (for those VMs that we want to have a 'public IP').

Attention: Every occurance of 10.42.0.6 in this file refers to the IP of the machine I used for writing this guide. You need to replace it with the actual machine IP of the box you are running this on. For example, if your machine has the local IP address 192.168.0.1, then use this IP instead of 10.42.0.6.

After saving nova.conf, open /etc/nova/api-paste.ini in an editor and scroll down to the end of the file. Adapt it according to the changes you conducted in Glance's paste-files in step 3.

Then, restart all nova services to make the configuration file changes take effect:

for a in libvirt-bin nova-network nova-compute nova-api nova-objectstore nova-scheduler nova-volume nova-vncproxy; do service "$a" stop; done

for a in libvirt-bin nova-network nova-compute nova-api nova-objectstore nova-scheduler nova-volume nova-vncproxy; do service "$a" start; done

The next step will create all databases Nova needs in MySQL. While we are at it, we can also create the network we want to use for our VMs in the Nova databases. Do this:

nova-manage db sync

nova-manage network create private --fixed_range_v4=192.168.33.0/27 --num_networks=1 --bridge=br100 --bridge_interface=eth1 --network_size=256 --multi_host=T

Also, make sure that all files in /etc/nova belong to the nova user and the nova group:

chown -R nova:nova /etc/nova

Then, restart all nova-related services again:

for a in libvirt-bin nova-network nova-compute nova-api nova-objectstore nova-scheduler nova-volume nova-vncproxy; do service "$a" stop; done

for a in libvirt-bin nova-network nova-compute nova-api nova-objectstore nova-scheduler nova-volume nova-vncproxy; do service "$a" start; done

You should now see all these nova-* processes when doing ps auxw. And you should be able to use the numerous nova commands. For example,

nova list

should give you a list of all currently running VMs (none, the list should be empty). And

nova image-list

should show a list of the image you uploaded to Glance in the step before. If that's the case, Nova is working as expected and you can carry on with starting your first VM.

Step 6: Your first VM

Submitted by martin on Fri, 2012-03-23 22:37 GMT

Once Nova works as desired, starting your first own cloud VM is easy. As we're using a Ubuntu image for this example which allows for SSH-key based login only, we first need to store a public SSH key for our admin user in the OpenStack database. Upload the file containing your SSH public key onto the server (i'll assume the file is called id_dsa.pub) and do this:

nova keypair-add --pub_key id_rsa.pub key1

This will add the key to OpenStack Nova and store it with the name "key1". The only thing left to do after this is firing up your VM. Find out what ID your Ubuntu image has, you can do this with:

nova image-list

When starting a VM, you also need to define the flavor it is supposed to use. Flavors are pre-defined hardware schemes in OpenStack with which you can define what resources your newly created VM has. OpenStack comes with five pre-defined flavors; you can get an overview over the existing flavors with

nova flavor-list

Flavors are referenced by their ID, not by their name. That's important for the actual command to execute to start your VM. That command's syntax basically is this:

nova boot --flavor ID --image Image-UUID --key_name key-name vm_name

So let's assume you want to start a VM with the m1.tiny flavor, which has the ID 1. Let's further assume that your image's UUID in Glance is 9bab7ce7-7523-4d37-831f-c18fbc5cb543 and that you want to use the SSH key key1. Last but nut least, you want your new VM to have the name superfrobnicator. Here's the command you would need to start that particular VM:

nova boot --flavor 1 --image 9bab7ce7-7523-4d37-831f-c18fbc5cb543 --key_name key1 superfrobnicator

After hitting the Enter key, Nova will show you a summary with all important details concerning the new VM. After some seconds, issue the command

nova show superfrobnicator

In the line with the private_network keyword, you'll see the IP address that Nova has assigned this particular VM. As soon as the VMs status is ACTIVE, you should be able to log into that VM by issuing

ssh -i Private-Key ubuntu@IP

Of course Private-Key needs to be replaced with the path to your SSH private key and IP needs to be replaced with the VMs actual IP. If you're using SSH agent forwarding, you can leave out the "-i"-parameter altogether.

Step 7: The OpenStack Dashboard

We can use Nova to start and stop virtual machines now, but up to this point, we can only do it on the command line. That's not good, because typically, we'll want users without high-level administrator skills to be able to start new VMs. There's a solution for this on the OpenStack ecosystem called Dashboard, codename Horizon. Horizon is OpenStack's main configuration interface. It's django-based.

Let's get going with it:

apt-get install libapache2-mod-wsgi openstack-dashboard lessc

Make sure that you install at least the version 2012.1~rc2-0ubuntu1 of the openstack-dashboard package as this version contains some important fixes that are necessary for Horizon to work properly.

Then, open /etc/openstack-dashboard/local_settings.py in an editor. Go to the line starting with CACHE_BACKEND and make sure it looks like this:

CACHE_BACKEND = 'memcached://127.0.0.1:11211/'

然后进行下面操作,要不dashboard没加载类图,会很难看

mkdir -p /usr/share/openstack-dashboard/bin/less

ln -s $(which lessc) /usr/share/openstack-dashboard/bin/less/lessc

ln -s /usr/share/openstack-dashboard/openstack_dashboard/static /usr/share/openstack-dashboard/static

chown -R www-data:www-data /usr/share/openstack-dashboard/openstack_dashboard/static

(感谢北京-郑明义提供方法,我为dashboard如此的难看纠结好长时间)

安装后如果不能访问dashboard,出现缺少模块,请查看apache2的error日志,用pip命令安装缺少的模块

Now restart Apache with

service apache2 restart

After this, point your webbrowser to the Nova machine's IP address and you should see the OpenStack Dashboard login prompt. Login with admin and the password you specified. That's it - you're in!

Step 8: Making the euca2ools work

OpenStack offers a full-blown native API for administrator interaction. However, it also has an API compatible with Amazons AWS service. This means that on Linux you can not only use the native OpenStack clients for interaction but also the euca2ools toolsuite. Using euca2ools with keystone is possible. Large portions on how to do it are written down in this document. Here's the short summary for those who are in a hurry:

export EC2_URL=$(keystone catalog --service ec2 | awk '/ publicURL / { print $4 }')

export CREDS=$(keystone ec2-credentials-create)

export EC2_ACCESS_KEY=$(echo "$CREDS" | awk '/ access / { print $4 }')

export EC2_SECRET_KEY=$(echo "$CREDS" | awk '/ secret / { print $4 }')

After that, the euca2ools should just work (if the euca2ools package is installed, of course). You can try running

euca-describe-images

or

euca-describe-instances

to find out whether it's working or not.

Step 9: Make vnc work

注意:bridge address不能做为vnc监听地址

Required package: python-numpy

nova-consoleauth

novnc

python-novnc

Nova.conf VNC配置

--vnc_enabled=true

--vncserver_proxyclient_address=127.0.0.1

--novncproxy_base_url=http://127.0.0.1:6080/vnc_auto.html

--xvpvncproxy_base_url=http://127.0.0.1:6081/console

--vncserver_listen=127.0.0.1

|

|