|

|

Perhaps one of the most common types of problems we encounter here at VMware Technical Support is relating to loss of network connectivity to one or more virtual machines on a host. As vague and simple as that description is, it may not always be clear where to even start looking for a solution.

Networking in vSphere is a very far reaching topic with many layers. You’ve got your virtual machines, their respective network stacks, Standard vSwitches, Distributed Switches, different load balancing types, physical network adapters, their respective drivers, the vmkernel itself and we haven’t even begun to mention what lies outside of the host yet.

It may be tempting to quickly draw conclusions and dive right into packet captures and even guest operating system troubleshooting, but knowing what questions to ask can make all the difference when trying to narrow down the problem. More often than not, it winds up being something quite simple.

When it comes to troubleshooting virtual machine network connectivity, the best place to start is to simply gather information relating to the problem – what works and what doesn’t. Just because you can’t ping something does not mean the virtual machine is completely isolated. It’s always best not to draw conclusions and to be as logical and methodical as possible. Get a better view of the whole picture, and then narrow down in areas where it makes sense.

Some initial discovery troubleshooting I’d recommend:

- Is this impacting all virtual machines, or a subset of virtual machines?

- If you move this VM to another host using vMotion or Cold Migration, does the issue persist?

- Is there anything in common between the virtual machines having a problem? I.e. are they all in a specific VLAN?

- Are the virtual machines able to communicate with each other on the same vSwitch and Port Group? Or is this a complete loss of network connectivity to the troubled VMs?

- Have you been able to regain connectivity by doing anything? Or is it persistent?

Let me walk you through an example of a common problem in a simple vSphere 5.0 environment utilizing vNetwork Standard Switches. In this example, we have four Ubuntu Server virtual machines on a single ESXi 5.0 host. Three of them are not exhibiting any problems, but for some unknown reason, one of them, ubuntu1, appears to have no network connectivity.

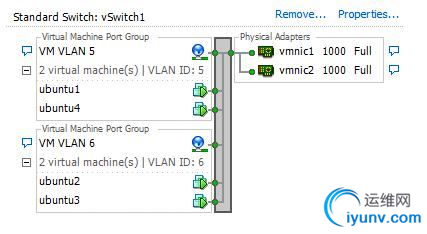

First, we’ll want to have a quick look at the host’s network configuration from the vSphere Client. As you can see below, we have four virtual machines spread across two VLANs – half of them in VLAN 5 and the other half in VLAN 6. Two gigabit network adapters are being used as uplinks in this vSwitch.

Based on this simple vSwitch depiction, we can draw several conclusions that may come in handy during our troubleshooting. First, we can see that VLAN IDs are specified for the two port groups. This indicates that we are doing VST or Virtual Switch Tagging. For this to work correctly, vmnic1 and vmnic2 in the network team must be configured for VLAN trunking using the 802.1q protocol on the physical switch. We’ll keep this information in the back of our heads for now and move on.

Based on this simple vSwitch depiction, we can draw several conclusions that may come in handy during our troubleshooting. First, we can see that VLAN IDs are specified for the two port groups. This indicates that we are doing VST or Virtual Switch Tagging. For this to work correctly, vmnic1 and vmnic2 in the network team must be configured for VLAN trunking using the 802.1q protocol on the physical switch. We’ll keep this information in the back of our heads for now and move on.

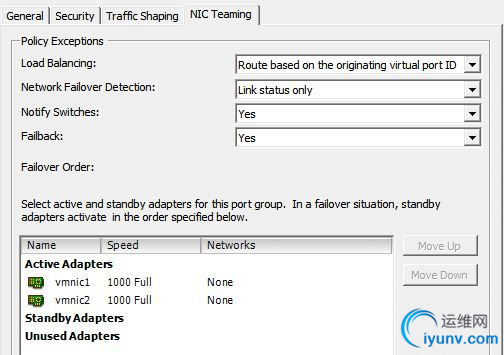

Next, since we have a pair of network adapters in the team, we need to determine the load balancing type employed (this could change the troubleshooting approach quite a bit, so you’ll want to know this right up front). As seen below, we were able to determine that the default load balancing method – Route based on the originating virtual port ID – is being used based on the vSwitch properties.

With this, we know several things about the way the network should be configured.

With this, we know several things about the way the network should be configured.

From a virtual switch perspective, we know that we’ll have a spread of virtual machines across both network adapters as both of the uplinks are in an Active state. We’ll have a one-to-one mapping of virtual machine network adapters to physical adapters. Assuming each virtual machine has only a single virtual network card, each virtual machine will be bound to a single physical adapter on the ESXi host. In theory, we should have a rough 50/50 split of virtual machines across both physical adapters in the team. It may not be a perfect 50/50 split across all VMs, but any single VM on this vSwitch has an equal chance of being on vmnic1 or vmnic2.

From the physical switch, each of these vmnics should be connecting to independent and identically configured 802.1q VLAN trunk ports – this is important. With the default ‘Route based on originating virtual port ID’ load balancing type, the physical switch should not be configured for link aggregation (802.3ad, also known as etherchannel in the Cisco world, or Trunks in the HP world). Link aggregation or bonding should be used only with IP hash load balancing type.

With this small amount of information, we are able to determine quite a bit about the way the environment should be configured in both ESXi and on the physical switch, and how the load balancing should be behaving.

To recap:

- Virtual machines vNICs will be bound in a one to one mapping with physical network adapters on the host.

- We are doing virtual switch tagging, so the virtual machines should not have any VLANs configured within the guest operating system.

- On the physical switch, vmnic1 and vmnic2 should connect to two independent switch ports configured identically as 802.1q VLAN trunks.

- Each physical switch port must be configured to allow both VLAN 5 and VLAN 6 as we are tagging both of these VLANs within the vSwitch.

- An etherchannel or port-channel should not be configured for these two switch ports.

From here, we should now try to determine more about the virtual machine’s problem. We’ll start by doing some basic ICMP ping testing between various devices we know to be online:

From ubuntu1:

- Pinging the default gateway: Fails.

- Pinging another virtual machine in VLAN5 on another ESXi host: Fails.

- Pinging Ubuntu2 on the same host: Fails.

- Pinging Ubuntu4 on the same host: Succeeds.

This very simple test provides us with a wealth of additional information that will help us narrow things down. From the five pings we just ran, we can determine the following:

Pinging the default gateway fails

Because other VMs, including Ubuntu4 can ping the default gateway, we know that it is responding to ICMP requests. A VM’s default gateway should always be in the same subnet/VLAN, so in this case, the core switch’s interface being pinged is also in VLAN 5, so no routing is being done to access it. If the VM can’t communicate with its gateway, we know that anything outside of VLAN5 will not be able to communicate with it due to loss of routing capability.

Pinging another virtual machine in VLAN5 on another ESXi host

This confirms that the VM is unable to communicate with anything on the physical network and that it is not just a VM to gateway communication problem. Since this target VM being pinged is also in VLAN 5, no routing is required and this appears to be a lower-level problem.

Pinging Ubuntu2 on the same host

Even though these two VMs are on the same host, they are in different VLANs, and different IP subnets. vSwitches are layer-2 only and do not perform any routing. Communication between VLANs on the same vSwitch requires routing and all traffic would have to go out to a router on the physical network for this to work. Since the ubuntu1 VM can’t reach it’s gateway on the physical network, this will obviously not work. Be careful when pinging between VMs on the same host – if they are not in the same VLAN, routing will be required and all traffic between VMs will go out and back in via the physical adapters.

Pinging Ubuntu4 on the same host

Pinging ubuntu4 in the same VLAN, in the same IP subnet and in the same vSwitch works correctly. This piece of information is very useful because it confirms that the VM’s network stack in the guest operating system is indeed working to some extent. Because ubuntu1 and ubuntu4 share the same portgroup, all communication remains within the vSwitch and does not need to traverse the physical network.

But why is ubuntu1 not working correctly, and ubuntu4 is? This is a rhetorical question by the way. Let’s think back to what we saw earlier – we have two physical NICs, and four virtual machines employing ‘Route based on the originating virtual port ID’ load balancing. The ubuntu1 VM cannot communicate on the physical network, but can communicate on the vSwitch. Clearly something is preventing this virtual machine from communicating out to the physical network.

Let’s recap what we’ve learned to this point:

- We know the VM’s guest operating system networking stack is working correctly

- We know that the vSwitch is configured correctly, as ubuntu4 works fine and is in the same VLAN and Port Group.

- The problem lies in ubuntu1’s inability to communicate out to the physical network.

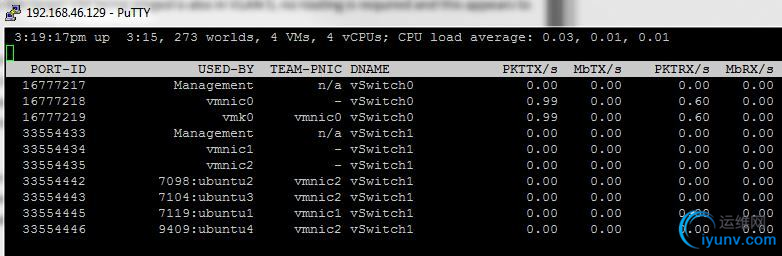

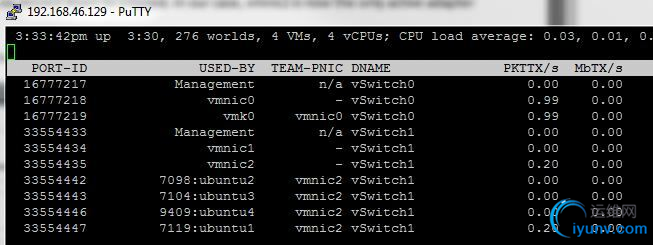

When thinking about this problem from an end-to-end perspective, the next logical place to look would be the physical network adapters. We know that we have two adapters in a NIC team, but which NIC is ubuntu1 actually using? Which NIC is ubuntu4 using? To determine this, we’ll need to connect to the host using SSH or via the Local Tech Support Mode console and use a tool called esxtop. From within esxtop, we simply hit ‘n’ for the networking view and immediately obtain some very interesting information:

As you can see above, this view tells us exactly which physical network adapter each virtual machine is currently bound to. There is a very clear pattern that we can see almost immediately – ubuntu1 is the only virtual machine currently utilizing vmnic1. It is unlikely that this is a mere coincidence, but most vSphere administrators may not be able to approach their network teams without more information.

As you can see above, this view tells us exactly which physical network adapter each virtual machine is currently bound to. There is a very clear pattern that we can see almost immediately – ubuntu1 is the only virtual machine currently utilizing vmnic1. It is unlikely that this is a mere coincidence, but most vSphere administrators may not be able to approach their network teams without more information.

Assuming there really is a problem with vmnic1 or its associated physical switch port, we would expect the VM to regain connectivity if we forced it to use vmnic2 instead. Let’s confirm this theory.

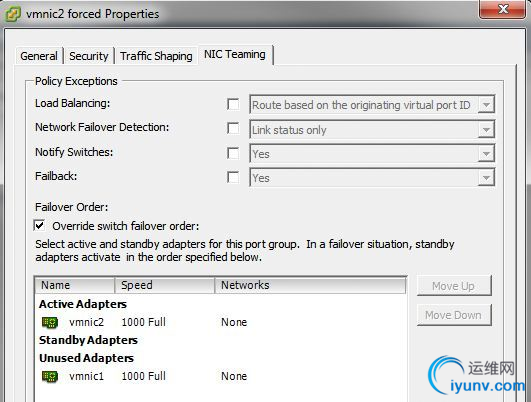

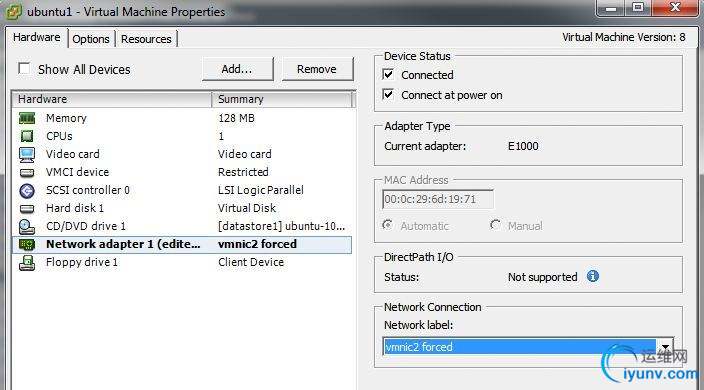

The easiest way to accomplish this would be to create a temporary port group identical to VM VLAN 5, but configured to use only vmnic2. That way when we put ubuntu1’s virtual NIC into that port group, it will have no choice but to use vmnic2. In our example, I created a new port group called ‘vmnic2 forced’:

After creating this new temporary port group, you simply need to check the ‘Override switch failover order’ checkbox in the NIC Teaming tab and ensure that only the desired adapter is listed as Active. All other adapters should be moved down to Unused. In our case, vmnic2 is now the only active adapter associated with this port group called ‘vmnic2 forced’. We can now edit the settings of ubuntu1 and configure its virtual network card to connect to this new port group:

After creating this new temporary port group, you simply need to check the ‘Override switch failover order’ checkbox in the NIC Teaming tab and ensure that only the desired adapter is listed as Active. All other adapters should be moved down to Unused. In our case, vmnic2 is now the only active adapter associated with this port group called ‘vmnic2 forced’. We can now edit the settings of ubuntu1 and configure its virtual network card to connect to this new port group:

Immediately after making this change – even if the VM is up and running – we should see esxtop reflect the new configuration:

Immediately after making this change – even if the VM is up and running – we should see esxtop reflect the new configuration:

As you can see above, ubuntu1 is now forced on vmnic2, consistent with the other VMs that are not having a problem.

We can then repeat our ping tests:

From ubuntu1:

- Pinging the default gateway: Success.

- Pinging another virtual machine in VLAN5 on another ESXi host: Success.

- Pinging Ubuntu2 on the same host: Success.

- Pinging Ubuntu4 on the same host: Succeeds.

And there you have it. We’ve essentially proven that there is some kind of a problem communicating out of vmnic1. Moving further along the communication path, it would now be a good idea to examine the physical switch configuration for these two ports associated with vmnic1 and vmnic2. For those of you who work in a large corporation, I’d say you would have enough proof to go to your network administration team to present a case for investigation on the physical side at this point.

In this example, our ESXi 5.0 host is connected to a single Cisco 2960G gigabit switch and we’ll assume that we’ve been able to physically confirm that vmnic1 plugs into port g1/0/10 and that vmnic2 plugs into port g1/0/11. Finding and confirming the correct upstream physical switch ports is outside of the scope of this example today, but we’ll just assume we’re 100% certain.

Examining the configuration of these switch ports, we see the following:

# sh run int g1/0/10

switchport trunk encapsulation dot1q

switchport mode trunk

switchport trunk allowed vlan 6,7,8,9

spanning-tree portfast trunk

no shutdown# sh run int g1/0/11

switchport trunk encapsulation dot1q

switchport mode trunk

switchport trunk allowed vlan 5,6,7,8,9

spanning-tree portfast trunk

no shutdown

Even if you are not familiar with Cisco IOS commands and switch port configuration, it isn’t difficult to see that there is indeed a difference between g1/0/10 and g1/0/11. It appears that only g1/0/11 is configured to allow VLAN 5. So this problem may have gone unnoticed for some time as it would only impact virtual machines in VLAN 5, and there is about a 50/50 chance they would fall on vmnic1. To make matters worse, certain operations may actually cause a troubled VM to switch from vmnic1 to vmnic2, which can sometimes add to the confusion.

It is not uncommon that vSphere administrators try several things to get the VM to come online again. Some of the actions that may cause the VM to become bound to a different vmnic include:

- vMotion to another host, and then back again.

- Powering off, and then powering on the VM again.

- Removing a virtual network card and then adding it back – even if it’s the same or different type.

Any of the above tasks give you about a 50% chance connectivity will be restored when dealing with two physical network cards. You may try one of the above actions and the problem persists – you may try it again and things start to work again. This will often give administrators the false assumption that it was just something quirky with the VM’s virtual network adapter, or a problem with ESXi itself. This is why taking a methodical approach is ideal as it will eventually lead you to the true cause. Remember, each physical switch port associated with a network team should always be configured identically.

Well, that’s it for today. I’m hoping to provide some other example scenarios that lead to other root problems, or ones that deal with different load balancing types. Thanks for reading.

This entry was posted in Datacenter, From the Trenches, How-to by Mike Da Costa. Bookmark the permalink. |

|